Home > Learning Center > Round Trip Time (RTT)

Article's content

Round trip time (rtt), what is round trip time.

Round-trip time (RTT) is the duration, measured in milliseconds, from when a browser sends a request to when it receives a response from a server. It’s a key performance metric for web applications and one of the main factors, along with Time to First Byte (TTFB), when measuring page load time and network latency .

Using a Ping to Measure Round Trip Time

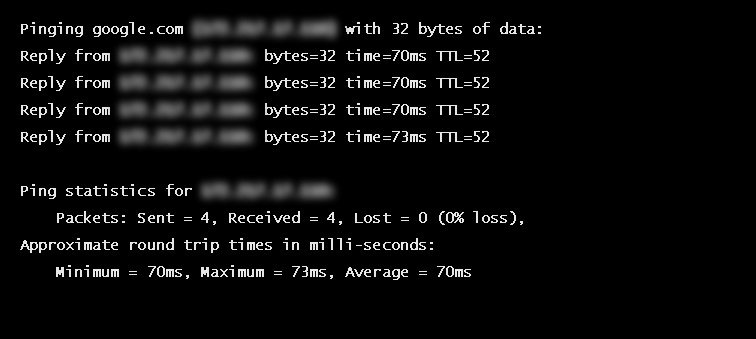

RTT is typically measured using a ping — a command-line tool that bounces a request off a server and calculates the time taken to reach a user device. Actual RTT may be higher than that measured by the ping due to server throttling and network congestion.

Example of a ping to google.com

Factors Influencing RTT

Actual round trip time can be influenced by:

- Distance – The length a signal has to travel correlates with the time taken for a request to reach a server and a response to reach a browser.

- Transmission medium – The medium used to route a signal (e.g., copper wire, fiber optic cables) can impact how quickly a request is received by a server and routed back to a user.

- Number of network hops – Intermediate routers or servers take time to process a signal, increasing RTT. The more hops a signal has to travel through, the higher the RTT.

- Traffic levels – RTT typically increases when a network is congested with high levels of traffic. Conversely, low traffic times can result in decreased RTT.

- Server response time – The time taken for a target server to respond to a request depends on its processing capacity, the number of requests being handled and the nature of the request (i.e., how much server-side work is required). A longer server response time increases RTT.

See how Imperva CDN can help you with website performance.

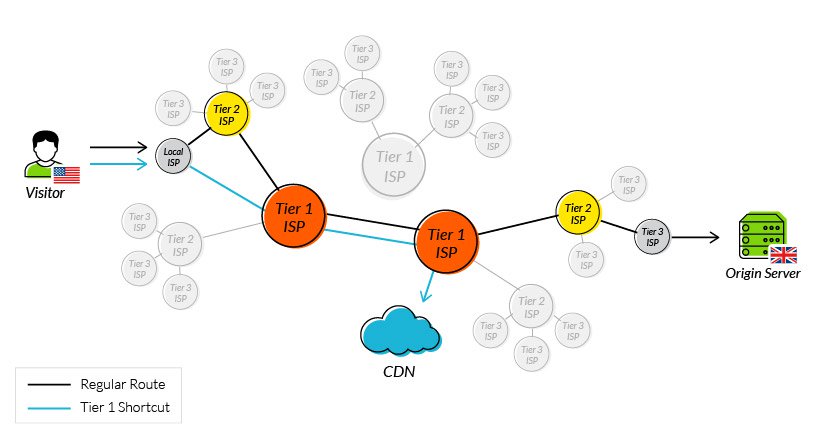

Reducing RTT Using a CDN

A CDN is a network of strategically placed servers, each holding a copy of a website’s content. It’s able to address the factors influencing RTT in the following ways:

- Points of Presence (PoPs) – A CDN maintains a network of geographically dispersed PoPs—data centers, each containing cached copies of site content, which are responsible for communicating with site visitors in their vicinity. They reduce the distance a signal has to travel and the number of network hops needed to reach a server.

- Web caching – A CDN caches HTML, media, and even dynamically generated content on a PoP in a user’s geographical vicinity. In many cases, a user’s request can be addressed by a local PoP and does not need to travel to an origin server, thereby reducing RTT.

- Load distribution – During high traffic times, CDNs route requests through backup servers with lower network congestion, speeding up server response time and reducing RTT.

- Scalability – A CDN service operates in the cloud, enabling high scalability and the ability to process a near limitless number of user requests. This eliminates the possibility of server side bottlenecks.

Using tier 1 access to reduce network hops

One of the original issues CDNs were designed to solve was how to reduce round trip time. By addressing the points outlined above, they have been largely successful, and it’s now reasonable to expect a decrease in your RTT of 50% or more after onboarding a CDN service.

Latest Blogs

Grainne McKeever

Feb 26, 2024 3 min read

Erez Hasson

Jan 18, 2024 3 min read

Luke Richardson

Dec 27, 2023 6 min read

Dec 21, 2023 2 min read

Dec 13, 2023 5 min read

Dec 7, 2023 6 min read

- Imperva Threat Research

, Gabi Stapel

Nov 8, 2023 13 min read

Nov 7, 2023 1 min read

Latest Articles

- Network Management

169.8k Views

103.1k Views

100.5k Views

98.4k Views

58.7k Views

54.7k Views

2024 Bad Bot Report

Bad bots now represent almost one-third of all internet traffic

The State of API Security in 2024

Learn about the current API threat landscape and the key security insights for 2024

Protect Against Business Logic Abuse

Identify key capabilities to prevent attacks targeting your business logic

The State of Security Within eCommerce in 2022

Learn how automated threats and API attacks on retailers are increasing

Prevoty is now part of the Imperva Runtime Protection

Protection against zero-day attacks

No tuning, highly-accurate out-of-the-box

Effective against OWASP top 10 vulnerabilities

An Imperva security specialist will contact you shortly.

Top 3 US Retailer

Determining TCP Initial Round Trip Time

- TCP Analysis , Wireshark

- 18 Comments

I was sitting in the back in Landis TCP Reassembly talk at Sharkfest 2014 (working on my slides for my next talk) when at the end one of the attendees approached me and asked me to explain determining TCP initial RTT to him again. I asked him for a piece of paper and a pen, and coached him through the process. This is what I did.

What is the Round Trip Time?

The round trip time is an important factor when determining application performance if there are many request/reply pairs being sent one after another, because each time the packets have to travel back and forth, adding delay until results are final. This applies mostly to database and remote desktop applications, but not that much for file transfers.

What is Initial RTT, and why bother?

Initial RTT is the round trip time that is determined by looking at the TCP Three Way Handshake. It is good to know the base latency of the connection, and the packets of the handshake are very small. This means that they have a good chance of getting through at maximum speed, because larger packets are often buffered somewhere before being passed on to the next hop. Another point is that the handshake packets are handled by the TCP stack of the operating system, so there is no application interference/delay at all. As a bonus, each TCP session starts with these packets, so they’re easy to find (if the capture was started early enough to catch it, of course).

Knowing Initial RTT is necessary to calculate the optimum TCP window size of a connection, in case it is performing poorly due to bad window sizes. It is also important to know when analyzing packet loss and out of order packets, because it helps to determine if the sender could even have known about packet loss. Otherwise a packet marked as retransmission could just be an out of order arrival.

Determining Initial RTT

The problem with capture device placement

One of the rules of creating good captures is that you should never capture on the client or the server . But if the capture is taken somewhere between client and server we have a problem: how do we determine Initial RTT? Take a look at the next diagram and the solution should be obvious:

Instead of just looking at SYN to SYN/ACK or SYN/ACK to ACK we always look at all three TCP handshake packets. The capture device only sees that SYN after it has already traveled the distance from the client to the capture spot. Same for the ACK from the server, and the colored lines tells us that by looking at all three packets we have full RTT if we add the timings.

Frequently asked questions

Question: can I also look at Ping packets (ICMP Echo Request/Reply)? Answer: no, unless you captured on the client sending the ping. Take a look at the dual colored graph again – if the capture device is in the middle you’re not going to be able to determine the full RTT from partial request and reply timings.

Discussions — 18 Responses

Good article. I’ll have to point a few friends here for reference.

Just wanted to mention that I’ve seen intermediate devices like load balancers or proxies that immediately respond to the connection request throw people off. Make sure you know what’s in your path and always double/triple check the clients..

Thanks, Ty. I agree, you have to know what’s in the path between client and server. Devices sitting in the middle accepting the client connection and open another connection towards the server will lead to partial results, so you have to be aware of them. Good point. They’re usually simple to spot though – if you have an outgoing connection where you know that the packets are leaving your LAN and you get Initial RTT of less than a millisecond it’s a strong hint for a proxy (or similar device).

I really liked your article. I have one question:

Lets say we have the below deployment:

Client ——> LoadBalancer ——> Proxy ——–> Internet/Server

In case we “terminate” the TCP connection on the Proxy and we establish a new TCP connection from the Proxy to the Server. Should we measure 2 RTTs one for each connection and the aggregation of them is the final RTT ? What should we measure in order to determine the speed in such deployments ?

Regards, Andreas

Proxies can be difficult, because there is often only one connection per client but many outgoing connections to various servers. It usually complicates analysis of performance issues because you have to find and match requests first.

Luckily, for TCP analysis you only need to care about the RTT of each connection, because packet loss and delays are only relevant to those.

Of course, if you’re interested in the total RTT you can can add the 2 partial RTTs to see where the time is spent.

Thanks a lot for your response 🙂

good article

Thanks for mentioning REF, which is great of heltp

so where we need to capture the tcp packet actually to know the RTT? for ex if its like :

server—-newyork—-chicago—-california—–washington—-server and if i need to know the RTT between server in newyork to washington Please let me know

You can capture anywhere between the two servers and read the RTT between them from SYN to ACK. By reading the time between the first and third handshake packet it doesn’t matter where you capture, which is the beauty of it. But keep in mind that the TCP connection needs to be end-to-end, so if anything is proxying the connection you have to capture at least twice, on each side of the proxying device.

How can find packet travelling time from client to server are on different system.

To determine the round trip time you always need packets that are exchanged between the two systems. So if I understand your question correctly and you don’t have access to packets of a communication between the client and the server you can’t determine iRTT.

Can u please tell how to solve these type of problems 2. If originally RTTs = 14 ms and a is set to 0.2, calculate the new RTT s after the following events (times are relative to event 1): 1. Event 1: 00 ms Segment 1 was sent. 2. Event 2: 06 ms Segment 2 was sent. 3. Event 3: 16 ms Segment 1 was timed-out and resent. 4. Event 4: 21 ms Segment 1 was acknowledged. 5. Event 5: 23 ms Segment 2 was acknowledged

I’m sorry, but I’m not exactly sure what this is supposed to be. What is it good for, and what is this a set to 0.2? The only thing that may make sense is measuring the time it took to ACK each segment (1 -> 21ms if measuring the time from the original segment, 5 otherwise, 2 -> 17ms), but I have no idea what to use “a” for 🙂

how to calculate RTT using NS-2(network simulator-2)?

I haven’t played with NS-2 yet, but if you can capture packets it should be the same procedure.

ok..thank you sir

Hi, Subject: iRTT Field Missing in Wireshark Capture

We have two Ethernet boards, one board perfectly accomplishes an FTP file transfer the second does not and it eventually times out early on, mishandling the SYN SYN:ACK phase. We analyzed the two Wireshark captures and noticed that the good capture has the iRTT time stamp field included in the dump while the bad one does not. What is the significance of not seeing that iRTT in the bad capture? Is that indicative to why it fails the xfer?

The iRTT field can only be present if the TCP handshake was complete, meaning you all three packets in the capture (SYN, SYN/ACK, ACK). If one is missing the iRTT can’t be calculated correctly, and the field won’t be present. So if the field is missing it means that the TCP connection wasn’t established, which also means that no data transfer was possible.

Cancel reply

CAPTCHA Code *

- Jasper’s Colorfilters

- Sharkfest 2017 Hands-On Files

- Sharkfest 2019 EU Packet Challenge

- Sharkfest 2019 US Packet Challenge

- The Network Packet Capture Playbook

Recent Comments

- SV on Analyzing a failed TLS connection

- Jasper on Wireshark Column Setup Deepdive

- Jasper on Wireless Capture on Windows

- Ram on Wireshark Column Setup Deepdive

- PacketSnooper on Wireless Capture on Windows

Recent Posts

- DDoS Tracefile for SharkFest Europe 2021

- Introducing DNS Hammer, Part 2: Auditing a Name Server’s Rate Limiting Configuration

- Introducing DNS Hammer, Part 1: DDoS Analysis – From DNS Reflection to Rate Limiting

- Analyzing a failed TLS connection

- Patch! Patch! Patch!

- Entries feed

- Comments feed

- WordPress.org

Rechtliches

- Erklärung zur Informationspflicht (Datenschutzerklärung)

- Impressum und ViSdP

Database administration Automate critical tasks for database administration

- Auditing and compliance

- Database backup

- Disaster recovery

- Forensic auditing

- Index defragmentation

- Inventory management

- Load testing

- Object level restore

- Server compare

- SQL job automation

- SQL Server monitoring

- Transaction log reading

- Transactional replication

Database development Integrate database changes and automate deployments

- Continuous delivery

- Continuous integration

- Data compare

- Data import/export

- Data masking

- Database DevOps

- Database documentation

- Database modeling

- Dependency analysis

- Developer productivity

- Development best practices

- Documentation

- Multi-DB script execution

- MySQL data compare

- MySQL documentation

- MySQL schema compare

- Object decryption

- Object refactoring

- Packaging and deployment

- Schema and data scripting

- Schema compare

- Script comparison

- SharePoint documentation

- SQL formatting

- SQL Server documentation

- SQL source control

- SQL unit testing

- SSAS documentation

- SSIS documentation

- SSIS package compare

- SSRS documentation

- Statement auto complete

- Static code analysis

- Test data generation

- Text and object search

How to monitor and detect SQL Server round-trip performance issues using custom designed metrics

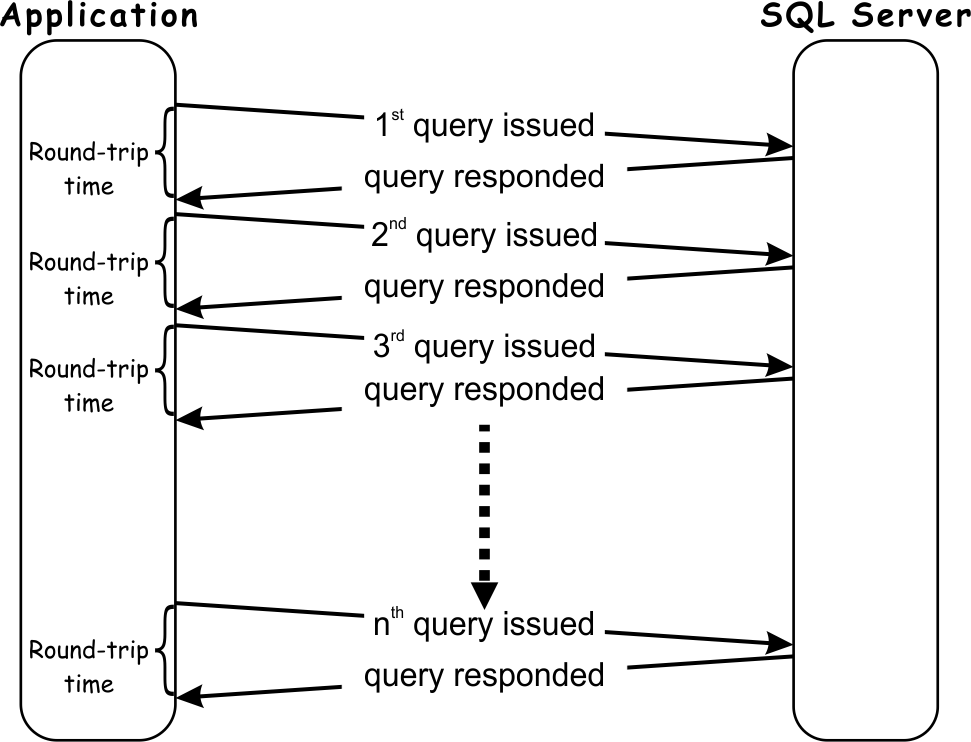

For every query issued by the application, time is needed to reach the SQL Server and then the time needed for results to get back to the application. As all communication between an application and SQL Server goes via some network (LAN or WAN), network performance could be a critical factor affecting overall performance. Two factors affect network performance: latency and throughput.

The latency or so-called SQL Server round-trip time (RTT) is something that is often overlooked by DBAs and database developers, but excessive round-trip time can severely affect performance. However, to be able to understand why and how the round trip affects the SQL Server performance, as first, it is vital to understand the SQL protocol itself.

A talkative SQL protocol

SQL protocol is designed to rely on intensive communication between the client and SQL Server. It as a very talkative protocol where the client can create a series of requests to the database, each request must wait for the response on the previous one before it can be sent.

Since applications tend to handle their data in a way that minimizes the data volume transferred in each interaction with the database, the result is usually that a significant number of requests/responses are needed for dealing with even modest data requests. In other words, an application that interacts with SQL Server is often forced to spend excessive time waiting for handling all requests/responses duos on the network.

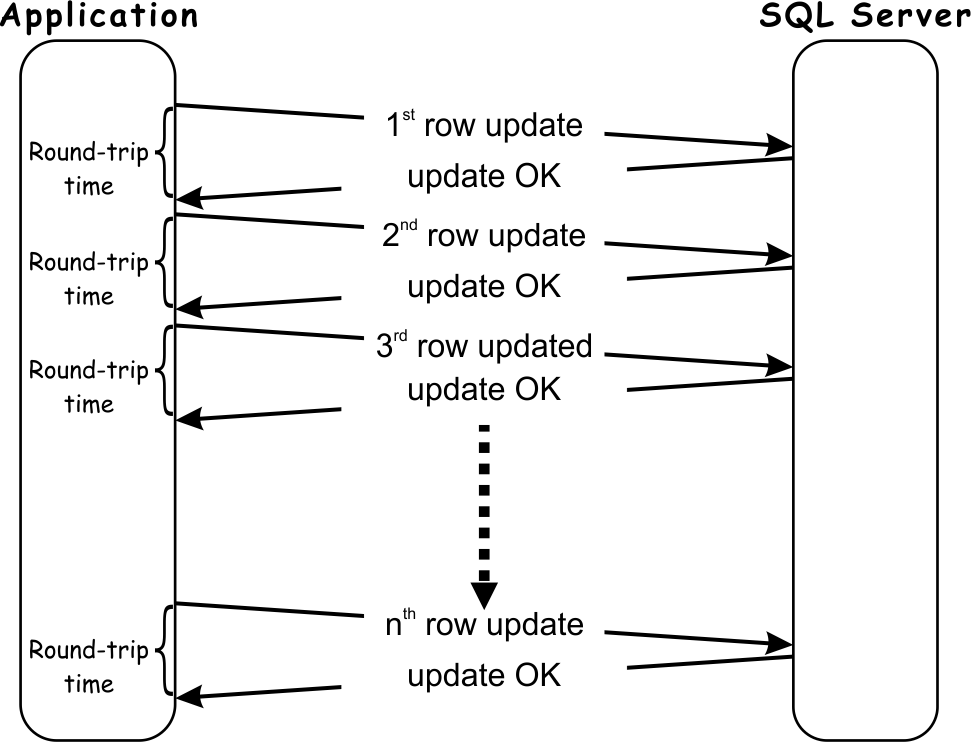

Looking at the diagram above it is clear that even in an ideal scenario, where SQL Server query processing time is zero, the application must wait for at least a sum of SQL Server round-trip times for each query execution. What’s more, in a situation where a single query has to return a substantial amount of data, more than one round-trip could be experienced for that query, which depends on the network TCP protocol. The number of round-trips in such cases can increase exponentially causing an additional increase in the total round-trip time.

The most obvious scenario where inefficiency relating to the SQL protocol is the most noticeable is update statements. Even when a single update SQL query is issued, the application must wait a minimum of one round-trip time for each row updated in the target table.

The more data that has to be updated; the longer time application must wait on the round-trips.

It is now clear that application performance does not depend strictly and always on SQL Server, so let’s expand this a bit to get a better insight into scenarios when the high round-trip times could affect the application performance.

When developing the application, developers usually work in a controlled environment with high-end machines and with the whole development environment is on the same machine as SQL Server, and thus close-to-zero delay in communication with a database. So after the development completes, the application has to be deployed in the customer environment; and this is where the problem starts.

Depending on the type of the application, it can be deployed in the LAN (Local Area Network), WAN (Wide Area Network) or combined LAN-WAN environment

It can often be heard from developers that the application should use LAN or WAN connection with high throughput to get satisfactory performance. However, it is not unusual that the application users start to complain that instead of getting results immediately or in a few seconds they have to wait tens of seconds or even minutes to get results. The problem here is that SQL Server applications are not throughput based, but transaction-based, meaning that it does not move a significant amount of data in a single transaction, but rather small chunks of data in numerous transactions. So for a SQL Server application, the network round-trip (latency) time is often more critical for performance, than network throughput.

Various network round-trip times:

With these network round-trip times, an impact on the application performance for a transaction that requires 500 round-trips can be calculated. For the calculation, some typical round-trip times were used:

So the difference and impact on performance are noticeable. For application deployed in a LAN total network round-trip is 1.5 seconds, while when deployed in a WAN total round-trip is 50 seconds.

So now it is evident that monitoring the round-trip is an essential performance parameter, especially in today’s modern environments that are often deployed in a WAN environment, either by deploying SQL Servers in the cloud or by using the web or mobile-based applications.

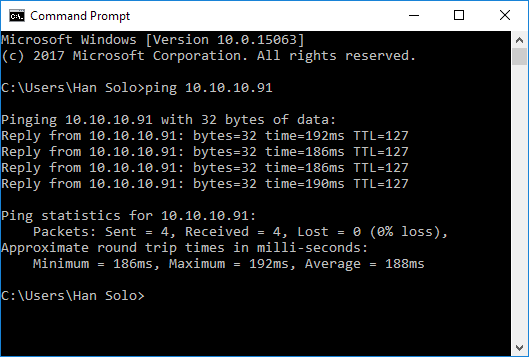

Monitoring the round-trip time – Ping

Using the system ping utility is the easiest way to measure network round-trip between two machines. The ping measures round-trip time (latency) for transferring data to another machine plus the transferring data from that machine back to the first machine. Below is an example of measuring ping between the machines, where destination machine is deployed to a WAN and the connection between machines is established using a VPN.

So in this particular case, an average round-trip is 188 ms, which is not a particularly good result.

However, ping results are typical network-related results, and for DBAs, it is more important to measure. For them, measuring round-trip time considers how long it takes to the database to respond to a single application request. In this case, using ping is not the best choice, but it still can be the first choice for initial troubleshooting.

Monitoring the round-trip time – SQL Server Profiler

SQL Server Profiler is a handy tool that can provide much information about the SQL Server, and that includes the ability to track round-trip times. The most important events for measuring the round-trip are SQL:BatchCompleted and RPC:Completed events . However, Profiler can be invasive and when used is sometimes the reason for the degradation of SQL Server performance on a highly active server. It is often a choice for quick troubleshooting, but not a long-term choice for monitoring the round-trip and collecting the historical data.

There are some other custom build options for measuring the round-trip time, but many if not most of those require a significant investment of knowledge, time and energy and are often out of reach for new or less experienced database administrators.

Related posts:

- Monitor SQL Server queries – find poor performers – SSMS Activity Monitor and Data Collection

- Monitor SQL Server queries – find poor performers – dynamic management views and functions

- How to get notifications on SQL Server performance issues

- The process of elimination: How to analyze SQL Server performance with baselines and wait statistics

- How to master SQL Server performance baselining to avoid false positives and/or missing alerts

What's supported

Requirements

Testimonials

Support plans

Renewals and upgrades

Resend keys

What's next

- Looking Glass

- Developer Tools

- Status Page

- Case studies

- Press & Media

- Product Roadmap

- Product Documentation

- API Documentation

- Help Center

- CDN for Gaming

- Game Server Protection

- Game Development

- CDN for Video

- Video Hosting

- Live Streaming

- Video Calls

- Metaverse Streaming

- TV & Online Broadcasters

- Sport Broadcasting

- Online events

- Cloud for financial services

- Image Optimization

- Website Acceleration

- WordPress CDN

- White Label Program

- CDN for E-commerce

- AI Universities

- Online Education

- Wordpress CDN

- DDoS Protection

- Penetration Test

- Web Service

- White Label Products

- Referral Program

- Data Migration

- Disaster Recovery

- Content Hub Content Hub Blog Learning News Case studies Downloads Press & Media API Documentation Product Roadmap Product Documentation Help Center

- Tools Tools Looking Glass Speed Test Developer Tools Status Page

- Talk to an expert

- Under attack?

Select the Gcore Platform

- Edge Delivery (CDN)

- DNS with failover

- Virtual Machines

- Cloud Load Balancers

- Managed Kubernetes

- AI Infrastructure

- Edge Security (DDOS+WAF)

- Object Storage

- ImageStack (Optimize and Resize)

- Edge Compute (Coming soon)

- VPS Hosting

- Dedicated Servers

What is round-trip time (RTT) and how to reduce it?

In this article, factors affecting rtt, how to calculate rtt using ping, normal rtt values, how to reduce rtt, i want to reduce rtt with cdn. what provider to choose.

Round-trip time (RTT) is the time it takes for the server to receive a data packet, process it, and send the client an acknowledgement that the request has been received. It is measured from the time the signal is sent till the response is received.

When a user clicks a button on a website, the request is sent to the server as a data packet. The server needs time (RTT) to process the data, generate a response, and send it back. Each action, like sending a form upon a click, may require multiple requests.

RTT determines the total network latency and helps monitor the state of data channels. A user cannot communicate with the server in less than one RTT, and the browser requires at least three round trip times to initiate a connection:

- to resolve the DNS name;

- to configure the TCP connection;

- to send an HTTP request and receive the first byte.

In some latency-sensitive services, e.g., online games, the RTT is shown on the screen.

Distance and number of intermediate nodes. A node is a single device on the network that sends and receives data. The first node is the user’s computer. A home router or routers at the district, city, or country level are often intermediate nodes. The longer the distance between the client and server, the more intermediate nodes the data must pass through and the higher the RTT.

Server and intermediate node congestion. For example, a request may be sent to a fully loaded server that is concurrently processing other requests. It can’t accept this new request until other ones are processed, which increases the RTT. The RTT includes the total time spent on sending and processing a request at each hop, so if one of the intermediate nodes is overloaded, the RTT adds up.

You never know exactly to what extent the RTT will grow based on how the infrastructure is loaded; it depends on individual data links, intermediate node types, hardware settings, and underlying protocols.

Physical link types and interferences. Physical data channels include copper, fiber optic, and radio channels. The RTT here is affected by the amount of interference. On the Wi-Fi operating frequency, the noise and other signals interfere with the useful signals, which reduces the number of packets per second. So, the RTT is likely to increase over Wi-Fi than over fiber-optics.

To measure the RTT, you can run the ping command in the command line, e.g., “ping site.com.”

Requests will be sent to the server using ICMP. Their default number is four, but it can be adjusted. The system will record the delayed time between sending each request and receiving a response and display it in milliseconds: minimum, maximum, and average.

The ping command shows the total RTT value. If you want to trace the route and measure the RTT at each individual node, you can use the tracert command (or traceroute for Linux or Mac OS). It is also can be performed via the command line.

Many factors affect RTT, making it difficult to establish a normal—the smaller the number, the better.

In online games, over 50 milliseconds are noticeable: players cannot accurately hit their targets due to network latency. Pings above 200 milliseconds matter even when users browse news feeds or place online orders: many pages open slowly and not always fully. A buyer is more likely to leave a slow website without making a purchase and never come back, which is what 79 percent of users do .

Let’s compare the pings of the two sites—the US jewelry store Fancy and the German news portal Nachrichtenleicht.de . We will ping them from Germany.

The RTT of a German news portal is almost three times lower than that of a US store because we ping from Germany. There are fewer nodes between the user and the server, which are both in the same country, so the RTT is lower.

Connect to a content delivery network (CDN). The hosting provider’s servers are usually located in the same region where most of the audience lives. But if the audience of the site grows or changes geographically, and content is requested by users who are far away from the server, RTT increases for them, and the site loading speed is slower. To increase the loading speed, use a CDN.

CDN (Content Delivery Network) is a service that caches (mostly static) content and stores it on servers in different regions. Therefore, only dynamic content is downloaded from the main source server, which is far from the user. Heavy static files—the main share of the website—are downloaded from the nearest CDN server, which reduces the RTT by up to 50 percent.

For example, the client requests content from a CDN-connected site. The resource recognizes that there is a caching server in the user’s region and that it has a cached copy of the requested content. To speed up the loading, the site substitutes links to files so that they are retrieved not from the hosting provider’s servers, but from the caching server instead since it is located closer. If the content is not in the cache, CDN downloads it directly from the hosting server, passes it to the user, and stores it in the cache. Now a client on the same network can request the resource from another device and load the content faster without having refer to the origin server.

Also, CDN is capable of load balancing: it routes requests through redundant servers if the load on the closest one is too high.

Optimize content and server apps. If your website has visitors from different countries/regions, you need a CDN to offset the increased RTT caused by long distances. In addition, the RTT is affected by the request processing time, which can be improved by the below content optimizations:

- Audit website pages for unnecessary scripts and functions, reduce them, if possible.

- Combine and simplify external CSS.

- Combine JavaScript files and use async/await keywords to optimize their processing—the HTML code first, the script later.

- Use JS and CSS for individual page types to reduce load times.

- Use the tag instead of @import url (“style.css”) commands .

- Use advanced compression media technologies: WebP for images, HEVC for video.

- Use CSS-sprites: merge images into one and show its parts on the webpage. Use special services like SpriteMe.

For fast content delivery anywhere in the world, you need a reliable CDN with a large number of points of presence. Try Gcore CDN —this is a next-generation content delivery network with over 140 PoPs on 5 continents, 30 ms average latency worldwide, and many built-in web security features. It will help to accelerate the dynamic and static content of your websites or applications, significantly reduce RTT, and make users satisfied.

Related product

Try gcore cdn.

- 150+ points of presence

- Low latency worldwide

- Dynamic content acceleration

- Smart asset optimization

- Top-notch availability

- Outstanding connectivity

Related articles

- The Development of AI Infrastructure: Transitioning from On-Site to the Cloud and Edge

- What is Managed Kubernetes

- Ways to Harm: Understanding DDoS Attacks from the Attacker’s View

Subscribe and discover the newest updates, news, and features

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

Round Trip Time (RTT)

Round Trip Time (RTT) is the length time it takes for a data packet to be sent to a destination plus the time it takes for an acknowledgment of that packet to be received back at the origin. The RTT between a network and server can be determined by using the ping command.

This will output something like:

In the above example, the average round trip time is shown on the final line as 26.8ms.

- Time to First Byte (TTFB)

Select Product

Machine Translated

14.1 - Current Release

NetScaler Release Notes

Getting Started with NetScaler

Where Does a NetScaler Appliance Fit in the Network?

How a NetScaler Communicates with Clients and Servers

Introduction to the NetScaler Product Line

Install the hardware

Access a NetScaler

Configure the ADC for the first time

Secure your NetScaler deployment

Configure high availability

Change an RPC node password

Configuring a FIPS Appliance for the First Time

Understanding Common Network Topologies

System management settings

System settings

Packet forwarding modes

Network interfaces

Clock synchronization

DNS configuration

SNMP configuration

Verify Configuration

Load balance traffic on a NetScaler appliance

Load balancing

Persistence settings

Configure features to protect the load balancing configuration

A typical load balancing scenario

Use case - How to force Secure and HttpOnly cookie options for websites using the NetScaler appliance

Accelerate load balanced traffic by using compression

Secure load balanced traffic by using SSL

Features at a Glance

Application Switching and Traffic Management Features

Application Acceleration Features

Application Security and Firewall Features

Application Visibility Feature

NetScaler Solutions

Setting up NetScaler for Citrix Virtual Apps and Desktops

Global Server Load Balancing (GSLB) Powered Zone Preference

Anycast support in NetScaler

Deploy digital advertising platform on AWS with NetScaler

Enhancing Clickstream analytics in AWS using NetScaler

NetScaler in a Private Cloud Managed by Microsoft Windows Azure Pack and Cisco ACI

Creating a NetScaler Load Balancer in a Plan in the Service Management Portal (Admin Portal)

Configuring a NetScaler Load Balancer by Using the Service Management Portal (Tenant Portal)

Deleting a NetScaler Load Balancer from the Network

NetScaler cloud native solution

Kubernetes Ingress solution

Service mesh

Solutions for observability

API gateway for Kubernetes

Use NetScaler ADM to Troubleshoot NetScaler cloud native Networking

Deploy a NetScaler VPX instance

Support matrix and usage guidelines

Optimize NetScaler VPX performance on VMware ESX, Linux KVM, and Citrix Hypervisors

Support for increasing NetScaler VPX disk space

Apply NetScaler VPX configurations at the first boot of the NetScaler appliance in cloud

Improve SSL-TPS performance on public cloud platforms

Configure simultaneous multithreading for NetScaler VPX on public clouds

Install a NetScaler VPX instance on a bare metal server

Install a NetScaler VPX instance on Citrix Hypervisor

Configuring NetScaler Virtual Appliances to use Single Root I/O Virtualization (SR-IOV) Network Interfaces

Install a NetScaler VPX instance on VMware ESX

Configure NetScaler VPX to use VMXNET3 network interface

Configure NetScaler VPX to use SR-IOV network interface

Configure NetScaler VPX to use Intel QAT for SSL acceleration in SR-IOV mode

Migrating the NetScaler VPX from E1000 to SR-IOV or VMXNET3 network interfaces

Configure NetScaler VPX to use PCI passthrough network interface

Apply NetScaler VPX configurations at the first boot of the NetScaler appliance on VMware ESX hypervisor

Install a NetScaler VPX instance on VMware cloud on AWS

Install a NetScaler VPX instance on Microsoft Hyper-V servers

Install a NetScaler VPX instance on Linux-KVM platform

Prerequisites for installing NetScaler VPX virtual appliances on Linux-KVM platform

Provisioning the NetScaler virtual appliance by using OpenStack

Provisioning the NetScaler virtual appliance by using the Virtual Machine Manager

Configuring NetScaler virtual appliances to use SR-IOV network interface

Configure a NetScaler VPX on KVM hypervisor to use Intel QAT for SSL acceleration in SR-IOV mode

Configuring NetScaler virtual appliances to use PCI Passthrough network interface

Provisioning the NetScaler virtual appliance by using the virsh Program

Managing the NetScaler Guest VMs

Provisioning the NetScaler virtual appliance with SR-IOV on OpenStack

Configuring a NetScaler VPX instance on KVM to use OVS DPDK-Based host interfaces

Apply NetScaler VPX configurations at the first boot of the NetScaler appliance on the KVM hypervisor

Deploy a NetScaler VPX instance on AWS

AWS terminology

AWS-VPX support matrix

Limitations and usage guidelines

Prerequisites

Configure AWS IAM roles on NetScaler VPX instance

How a NetScaler VPX instance on AWS works

Deploy a NetScaler VPX standalone instance on AWS

Scenario: standalone instance

Download a NetScaler VPX license

Load balancing servers in different availability zones

How high availability on AWS works

Deploy a VPX HA pair in the same AWS availability zone

High availability across different AWS availability zones

Deploy a VPX high-availability pair with elastic IP addresses across different AWS zones

Deploy a VPX high-availability pair with private IP addresses across different AWS zones

Deploy a NetScaler VPX instance on AWS Outposts

Protect AWS API Gateway using the NetScaler Web Application Firewall

Add back-end AWS auto scaling service

Deploy NetScaler GSLB on AWS

Deploy NetScaler VPX on AWS

Configure a NetScaler VPX instance to use SR-IOV network interface

Configure a NetScaler VPX instance to use Enhanced Networking with AWS ENA

Upgrade a NetScaler VPX instance on AWS

Troubleshoot a VPX instance on AWS

Deploy a NetScaler VPX instance on Microsoft Azure

Azure terminology

Network architecture for NetScaler VPX instances on Microsoft Azure

Configure a NetScaler standalone instance

Configure multiple IP addresses for a NetScaler VPX standalone instance

Configure a high-availability setup with multiple IP addresses and NICs

Configure a high-availability setup with multiple IP addresses and NICs by using PowerShell commands

Deploy a NetScaler high-availability pair on Azure with ALB in the floating IP-disabled mode

Deploy the NetScaler for Azure DNS private zone

Configure a NetScaler VPX instance to use Azure accelerated networking

Configure HA-INC nodes by using the NetScaler high availability template with Azure ILB

Configure HA-INC nodes by using the NetScaler high availability template for internet-facing applications

Configure a high-availability setup with Azure external and internal load balancers simultaneously

Install a NetScaler VPX instance on Azure VMware solution

Configure a NetScaler VPX standalone instance on Azure VMware solution

Configure a NetScaler VPX high availability setup on Azure VMware solution

Configure Azure route server with NetScaler VPX HA pair

Add Azure autoscale settings

Azure tags for NetScaler VPX deployment

Configure GSLB on NetScaler VPX instances

Configure GSLB on an active-standby high availability setup

Deploy NetScaler GSLB on Azure

Deploy NetScaler Web App Firewall on Azure

Configure address pools (IIP) for a NetScaler Gateway appliance

Configure multiple IP addresses for a NetScaler VPX instance in standalone mode by using PowerShell commands

Additional PowerShell scripts for Azure deployment

Create a support ticket for the VPX instance on Azure

Deploy a NetScaler VPX instance on Google Cloud Platform

Deploy a VPX high-availability pair on Google Cloud Platform

Deploy a VPX high-availability pair with external static IP address on Google Cloud Platform

Deploy a single NIC VPX high-availability pair with private IP address on Google Cloud Platform

Deploy a VPX high-availability pair with private IP addresses on Google Cloud Platform

Install a NetScaler VPX instance on Google Cloud VMware Engine

Add back-end GCP Autoscaling service

VIP scaling support for NetScaler VPX instance on GCP

Troubleshoot a VPX instance on GCP

Jumbo frames on NetScaler VPX instances

Automate deployment and configurations of NetScaler

Allocate and apply a license

Data governance

Console Advisory Connect

Upgrade and downgrade a NetScaler appliance

Before you begin

Upgrade considerations for configurations with classic policies

Upgrade considerations for customized configuration files

Upgrade considerations - SNMP configuration

Download a NetScaler release package

Upgrade a NetScaler standalone appliance

Downgrade a NetScaler standalone appliance

Upgrade a high availability pair

In Service Software Upgrade support for high availability

Downgrade a high availability pair

Troubleshooting

Solutions for Telecom Service Providers

Large Scale NAT

Points to Consider before Configuring LSN

Configuration Steps for LSN

Sample LSN Configurations

Configuring Static LSN Maps

Configuring Application Layer Gateways

Logging and Monitoring LSN

TCP SYN Idle Timeout

Overriding LSN configuration with Load Balancing Configuration

Clearing LSN Sessions

Load Balancing SYSLOG Servers

Port Control Protocol

LSN44 in a cluster setup

Dual-Stack Lite

Points to Consider before Configuring DS-Lite

Configuring DS-Lite

Configuring DS-Lite Static Maps

Configuring Deterministic NAT Allocation for DS-Lite

Configuring Application Layer Gateways for DS-Lite

Logging and Monitoring DS-Lite

Port Control Protocol for DS-Lite

Large Scale NAT64

Points to Consider for Configuring Large Scale NAT64

Configuring DNS64

Configuring Large Scaler NAT64

Configuring Application Layer Gateways for Large Scale NAT64

Configuring Static Large Scale NAT64 Maps

Logging and Monitoring Large Scale NAT64

Port Control Protocol for Large Scale NAT64

LSN64 in a cluster setup

Mapping Address and Port using Translation

Telco subscriber management

Subscriber aware traffic steering

Subscriber aware service chaining

Subscriber aware traffic steering with TCP optimization

Policy based TCP profile selection

Load Balance Control-Plane Traffic that is based on Diameter, SIP, and SMPP Protocols

Provide DNS Infrastructure/Traffic Services, such as, Load Balancing, Caching, and Logging for Telecom Service Providers

Provide Subscriber Load Distribution Using GSLB Across Core-Networks of a Telecom Service Provider

Bandwidth Utilization Using Cache Redirection Functionality

NetScaler TCP Optimization

Getting Started

Management Network

High Availability

Gi-LAN Integration

TCP Optimization Configuration

Analytics and Reporting

Real-time Statistics

Technical Recipes

Scalability

Optimizing TCP Performance using TCP Nile

Troubleshooting Guidelines

Frequently Asked Questions

NetScaler Video Optimization

Configuring Video Optimization over TCP

Video Optimization over UDP

NetScaler URL Filtering

URL Categorization

Authentication, Authorization, and Auditing

Admin Partition

Connection Management

Content Switching

Integrated Caching

Installing, Upgrading, and Downgrading

Load Balancing

NetScaler GUI

Authentication, authorization, and auditing application traffic

How authentication, authorization, and auditing works

Basic components of authentication, authorization, and auditing configuration

Authentication virtual server

Authorization policies

Authentication profiles

Authentication policies

Users and groups

Authentication methods

Multi-Factor (nFactor) authentication

SAML authentication

OAuth authentication

LDAP authentication

RADIUS authentication

TACACS authentication

Client certificate authentication

Negotiate authentication

Web authentication

Forms based authentication

401 based authentication

reCaptcha for nFactor authentication

Native OTP support for authentication

Push notification for OTP

Authentication, authorization, and auditing configuration for commonly used protocols

Single sign-on types

NetScaler Kerberos single sign-on

Enable SSO for Basic, Digest, and NTLM authentication

Content Security Policy response header support for NetScaler Gateway and authentication virtual server generated responses

Self-service password reset

Web Application Firewall protection for VPN virtual servers and authentication virtual servers

Polling during authentication

Session and traffic management

Rate Limiting for NetScaler Gateway

Authorizing user access to application resources

Auditing authenticated sessions

NetScaler as an Active Directory Federation Service proxy

Web Services Federation protocol

Active Directory Federation Service Proxy Integration Protocol compliance

On-premises NetScaler Gateway as an identity provider to Citrix Cloud

Support for active-active GSLB deployments on NetScaler Gateway

Configuration support for SameSite cookie attribute

Handling authentication, authorization and auditing with Kerberos/NTLM

Troubleshoot authentication and authorization related issues

Admin partition

NetScaler configuration support in admin partition

Configure admin partitions

VLAN configuration for admin partitions

VXLAN support for admin partitions

SNMP support for admin partitions

Audit log support for admin partitions

Display configured PMAC addresses for shared VLAN configuration

Action analytics

Configure a selector

Configure a stream identifier

View statistics

Group records on attribute values

Clear a stream session

Configure policy for optimizing traffic

How to limit bandwidth consumption for user or client device

AppExpert applications

How AppExpert application works

Customize AppExpert configuration

Configure user authentication

Monitor NetScaler statistics

Delete an AppExpert application

Configure application authentication, authorization, and auditing

Set up a custom NetScaler application

NetScaler Gateway Applications

Enable AppQoE

AppQOE actions

AppQoE parameters

AppQoE policies

Entity templates

HTTP callouts

How an HTTP callout works

Notes on the format of HTTP requests and responses

Configure an HTTP callout

Verify the configuration

Invoke an HTTP callout

Avoid HTTP callout recursion

Cache HTTP callout responses

Use Case: Filter clients by using an IP blacklist

Use Case: ESI support for fetching and updating content dynamically

Use Case: Access control and authentication

Use Case: OWA-Based spam filtering

Use Case: Dynamic content switching

Pattern sets and data sets

How string matching works with pattern sets and data sets

Configure a pattern set

Configure a data set

Use Pattern sets and data sets

Sample usage

Configure and use variables

Use case for caching user privileges

Use case for limiting the number of sessions

Policies and expressions

Introduction to policies and expressions

Configuring advanced policy infrastructure

Configure advanced policy expression: Getting started

Advanced policy expressions: Evaluating text

Advanced policy expressions: Working with dates, times, and numbers

Advanced policy expressions: Parsing HTTP, TCP, and UDP data

Advanced policy expressions: Parsing SSL certificates

Advanced policy expressions: IP and MAC Addresses, Throughput, VLAN IDs

Advanced policy expressions: Stream analytics functions

Advanced policy expressions: DataStream

Typecasting data

Regular expressions

Summary examples of advanced policy expressions

Tutorial examples of advanced policies for rewrite

Rewrite and responder policy examples

Rate limiting

Configure a stream selector

Configure a traffic rate limit identifier

Configure and bind a traffic rate policy

View the traffic rate

Test a rate-based policy

Examples of rate-based policies

Sample use cases for rate-based policies

Rate limiting for traffic domains

Configure rate limit at packet level

Enable the responder feature

Configure a responder action

Configure a responder policy

Bind a responder policy

Set the default action for a responder policy

Responder action and policy examples

Diameter support for responder

RADIUS support for responder

DNS support for the responder feature

MQTT support for responder

How to redirect HTTP requests

Content-length header behavior in a rewrite policy

Rewrite action and policy examples

URL transformation

RADIUS support for the rewrite feature

Diameter support for rewrite

DNS support for the rewrite feature

MQTT support for rewrite

String maps

Getting started

Advanced policy expressions for URL evaluation

Configure URL set

URL pattern semantics

URL categories

Configuring the AppFlow Feature

Exporting Performance Data of Web Pages to AppFlow Collector

Session Reliability on NetScaler High Availability Pair

API Security

Import API Specification

API Specification Validation

Advanced Policy Expressions using API Specification

API Traffic Visibility using API Specification Validation

Application Firewall

FAQs and Deployment Guide

Introduction to Citrix Web App Firewall

Configuring the Application Firewall

Enabling the Application Firewall

The Application Firewall Wizard

Manual Configuration

Manual Configuration By Using the GUI

Manual Configuration By Using the Command Line Interface

Manually Configuring the Signatures Feature

Adding or Removing a Signatures Object

Configuring or Modifying a Signatures Object

Protecting JSON Applications using Signatures

Updating a Signatures Object

Signature Auto Update

Snort rule integration

Exporting a Signatures Object to a File

The Signatures Editor

Signature Updates in High-Availability Deployment and Build Upgrades

Overview of Security checks

Top-Level Protections

HTML Cross-Site Scripting Check

HTML SQL Injection Checks

SQL grammar-based protection for HTML and JSON payload

Command injection grammar-based protection for HTML payload

Relaxation and deny rules for handling HTML SQL injection attacks

HTML Command Injection Protection

Custom keyword support for HTML payload

XML External Entity Protection

Buffer Overflow Check

Application Firewall Support for Google Web Toolkit

Cookie Protection

Cookie Consistency Check

Cookie Hijacking Protection

SameSite cookie attribute

Data Leak Prevention Checks

Credit Card Check

Safe Object Check

Advanced Form Protection Checks

Field Formats Check

Form Field Consistency Check

CSRF Form Tagging Check

Managing CSRF Form Tagging Check Relaxations

URL Protection Checks

Start URL Check

Deny URL Check

XML Protection Checks

XML Format Check

XML Denial-of-Service Check

XML Cross-Site Scripting Check

XML SQL Injection Check

XML Attachment Check

Web Services Interoperability Check

XML Message Validation Check

XML SOAP Fault Filtering Check

JSON Protection Checks

JSON DOS Protection

JSON SQL Protection

JSON XSS Protection

JSON Command Injection Protection

Managing Content Types

Creating Application Firewall Profiles

Enforcing HTTP RFC Compliance

Configuring Application Firewall Profiles

Application Firewall Profile Settings

Changing an Application Firewall Profile Type

Exporting and Importing an Application Firewall Profile

Detailed troubleshooting with WAF logs

File Upload Protection

Configuring and Using the Learning Feature

Dynamic Profiling

Supplemental Information about Profiles

Custom error status and message for HTML, XML, or JSON error object

Policy Labels

Firewall Policies

Auditing Policies

Importing and Exporting Files

Global Configuration

Engine Settings

Confidential Fields

Field Types

XML Content Types

JSON Content Types

Statistics and Reports

Application Firewall Logs

PCRE Character Encoding Format

Whitehat WASC Signature Types for WAF Use

Streaming Support for Request Processing

Trace HTML Requests with Security Logs

Application Firewall Support for Cluster Configurations

Debugging and Troubleshooting

Large File Upload Failure

Miscellaneous

Use case - Binding Web App Firewall policy to a VPN virtual server

Signatures Alert Articles

Signature update version 128

Signature update version 127

Signature update version 126

Signature update version 125

Signature update version 124

Signature update version 123

Signature update version 122

Signature update version 121

Signature update version 120

Signature update version 119

Signature update version 118

Signature update version 117

Signature update version 116

Signature update version 115

Signature update version 114

Signature update version 113

Signature update version 112

Signature update version 111

Signature update version 110

Signature update version 109

Signature update version 108

Signature update version 107

Signature update version 106

Signature update version 105

Bot Management

Bot detection

Configure bot profile setting

Configure bot signature setting

Bot signature auto update

Bot troubleshooting

Bot signature alert articles

Bot signature update version 5

Bot signature update version 6

Bot signature update version 7

Bot signature update version 8

Bot signature update version 9

Bot signature update version 10

Bot signature update version 11

Bot signature update version 12

Bot signature update version 13

Bot signature update version 14

Bot signature update version 15

Bot signature update version 16

Cache Redirection

Cache redirection policies

Built-in cache redirection policies

Configure a cache redirection policy

Cache redirection configurations

Configure transparent redirection

Configure forward proxy redirection

Configure reverse proxy redirection

Selective cache redirection

Enable content switching

Configure a load balancing virtual server for the cache

Configure policies for content switching

Configure precedence for policy evaluation

Administer a cache redirection virtual server

View cache redirection virtual server statistics

Enable or disable a cache redirection virtual server

Direct policy hits to the cache instead of the origin

Back up a cache redirection virtual server

Manage client connections for a virtual server

Enable external TCP health check for UDP virtual servers

N-tier cache redirection

Configure the upper-tier NetScaler appliances

Configure the lower-tier NetScaler appliances

Translate destination IP address of a request to origin IP address

NetScaler configuration support in a cluster

Cluster overview

Synchronization across cluster nodes

Striped, partially striped, and spotted configurations

Communication in a cluster setup

Traffic distribution in a cluster setup

Cluster nodegroups

Cluster and node states

Routing in a cluster

IP addressing for a cluster

Configuring layer 3 clustering

Setting up a NetScaler cluster

Setting up inter-node communication

Creating a NetScaler cluster

Adding a node to the cluster

Viewing the details of a cluster

Distributing traffic across cluster nodes

Using Equal Cost Multiple Path (ECMP)

Using cluster link aggregation

Using USIP mode in cluster

Managing the NetScaler cluster

Configuring linksets

Nodegroups for spotted and partially-striped configurations

Configuring redundancy for nodegroups

Disabling steering on the cluster backplane

Synchronizing cluster configurations

Synchronizing time across cluster nodes

Synchronizing cluster files

Viewing the statistics of a cluster

Discovering NetScaler appliances

Disabling a cluster node

Removing a cluster node

Removing a node from a cluster deployed using cluster link aggregation

Detecting jumbo probe on a cluster

Route monitoring for dynamic routes in cluster

Monitoring cluster setup using SNMP MIB with SNMP link

Monitoring command propagation failures in a cluster deployment

Graceful shutdown of nodes

Graceful shutdown of services

IPv6 ready logo support for clusters

Managing cluster heartbeat messages

Configure secure heartbeats

Configuring owner node response status

Monitor Static Route (MSR) support for inactive nodes in a spotted cluster configuration

VRRP interface binding in a single node active cluster

Cluster setup and usage scenarios

Creating a two-node cluster

Migrating an HA setup to a cluster setup

Transitioning between a L2 and L3 cluster

Setting up GSLB in a cluster

Using cache redirection in a cluster

Using L2 mode in a cluster setup

Using cluster LA channel with linksets

Backplane on LA channel

Common interfaces for client and server and dedicated interfaces for backplane

Common switch for client, server, and backplane

Common switch for client and server and dedicated switch for backplane

Different switch for every node

Sample cluster configurations

Using VRRP in a cluster setup

Monitoring services in a cluster using path monitoring

Backup and restore of cluster setup

Upgrading or downgrading the NetScaler cluster

Operations supported on individual cluster nodes

Support for heterogeneous cluster

Troubleshooting the NetScaler cluster

Tracing the packets of a NetScaler cluster

Troubleshooting common issues

Configuring Basic Content Switching

Customizing the Basic Content Switching Configuration

Content Switching for Diameter Protocol

Protecting the Content Switching Setup against Failure

Managing a Content Switching Setup

Managing Client Connections

Persistence support for content switching virtual server

Configure database users

Configure a database profile

Configure load balancing for DataStream

Configure content switching for DataStream

Configure monitors for DataStream

Use Case 1: Configure DataStream for a primary/secondary database architecture

Use Case 2: Configure the token method of load balancing for DataStream

Use Case 3: Log MSSQL transactions in transparent mode

Use Case 4: Database specific load balancing

DataStream reference

Domain Name System

Configure DNS resource records

Create SRV records for a service

Create AAAA Records for a domain name

Create address records for a domain name

Create MX records for a mail exchange server

Create NS records for an authoritative server

Create CNAME records for a subdomain

Create NAPTR records for telecommunications domain

Create PTR records for IPv4 and IPv6 addresses

Create SOA records for authoritative information

Create TXT records for holding descriptive text

Create CAA records for a domain name

View DNS statistics

Configure a DNS zone

Configure the NetScaler as an ADNS server

Configure the NetScaler as a DNS proxy server

Configure the NetScaler as an end resolver

Configure the NetScaler as a forwarder

Configure NetScaler as a non-validating security aware stub-resolver

Jumbo frames support for DNS to handle responses of large sizes

Configure DNS logging

Configure DNS suffixes

DNS ANY query

Configure negative caching of DNS records

Caching of EDNS0 client subnet data when the NetScaler appliance is in proxy mode

Domain name system security extensions

Configure DNSSEC

Configure DNSSEC when the NetScaler is authoritative for a zone

Configure DNSSEC for a zone for which the NetScaler is a DNS proxy server

Configure DNSSEC for GSLB domain names

Zone maintenance

Offload DNSSEC operations to the NetScaler

Admin partition support for DNSSEC

Support for wildcard DNS domains

Mitigate DNS DDoS attacks

Use case - configure the automatic DNSSEC key management feature

Use Case - configure the automatic DNSSEC key management on GSLB deployment

Use Case - how to revoke a compromised active key

Firewall Load Balancing

Sandwich Environment

Enterprise Environment

Multiple-Firewall Environment

Global Server Load Balancing

GSLB deployment types

Active-active site deployment

Active-passive site deployment

Parent-child topology deployment using the MEP protocol

GSLB configuration entities

GSLB methods

GSLB algorithms

Static proximity

Dynamic round trip time method

Configure static proximity

Add a location file to create a static proximity database

Add custom entries to a static proximity database

Set location qualifiers

Specify proximity method

Synchronize GSLB static proximity database

Configure site-to-site communication

Configure metrics exchange protocol

Configure GSLB by using a wizard

Configure active-active site

Configure active-passive site

Configure parent-child topology

Configure GSLB entities individually

Configure an authoritative DNS service

Configure a basic GSLB site

Configure a GSLB service

Configure a GSLB service group

Configure a GSLB virtual server

Bind GSLB services to a GSLB virtual server

Bind a domain to a GSLB virtual server

Example of a GSLB setup and configuration

Synchronize the configuration in a GSLB setup

Manual synchronization between sites participating in GSLB

Real-time synchronization between sites participating in GSLB

View GSLB synchronization status and summary

SNMP traps for GSLB configuration synchronization

GSLB dashboard

Monitor GSLB services

How domain name system works with GSLB

Priority order for GSLB services

Upgrade recommendations for GSLB deployment

Use case: Deployment of domain name based autoscale service group

Use case: Deployment of IP address based autoscale service group

How-to articles

Customize your GSLB configuration

Configure persistent connections

Manage client connections

Configure GSLB for proximity

Protect the GSLB setup against failure

Configure GSLB for disaster recovery

Override static proximity behavior by configuring preferred locations

Configure GSLB service selection using content switching

Configure GSLB for DNS queries with NAPTR records

Configure GSLB for wildcard domain

Use the EDNS0 client subnet option for GSLB

Example of a complete parent-child configuration using the metrics exchange protocol

Link Load Balancing

Configuring a Basic LLB Setup

Configuring RNAT with LLB

Configuring a Backup Route

Resilient LLB Deployment Scenario

Monitoring an LLB Setup

How load balancing works

Set up basic load balancing

Load balance virtual server and service states

Support for load balancing profile

Load balancing algorithms

Least connection method

Round robin method

Least response time method

LRTM method

Hashing methods

Least bandwidth method

Least packets method

Custom load method

Static proximity method

Token method

Least request method

Configure a load balancing method that does not include a policy

Persistence and persistent connections

About Persistence

Source IP address persistence

HTTP cookie persistence

SSL session ID persistence

Diameter AVP number persistence

Custom server ID persistence

IP address persistence

SIP Call ID persistence

RTSP session ID persistence

Configure URL passive persistence

Configure persistence based on user-defined rules

Configure persistence types that do not require a rule

Configure backup persistence

Configure persistence groups

Share persistent sessions between virtual servers

Configure RADIUS load balancing with persistence

View persistence sessions

Clear persistence sessions

Override persistence settings for overloaded services

Insert cookie attributes to ADC generated cookies

Customize a load balancing configuration

Customize the hash algorithm for persistence across virtual servers

Configure the redirection mode

Configure per-VLAN wildcarded virtual servers

Assign weights to services

Configure the MySQL and Microsoft SQL server version setting

Multi-IP virtual servers

Limit the number of concurrent requests on a client connection

Configure diameter load balancing

Configure FIX load balancing

MQTT load balancing

Protect a load balancing configuration against failure

Redirect client requests to an alternate URL

Configure a backup load balancing virtual server

Configure spillover

Connection failover

Flush the surge queue

Manage a load balancing setup

Manage server objects

Manage services

Manage a load balancing virtual server

Load balancing visualizer

Manage client traffic

Configure sessionless load balancing virtual servers

Redirect HTTP requests to a cache

Enable cleanup of virtual server connections

Rewrite ports and protocols for HTTP redirection

Insert IP address and port of a virtual server in the request header

Use a specified source IP for backend communication

Set a time-out value for idle client connections

Manage RTSP connections

Manage client traffic on the basis of traffic rate

Identify a connection with layer 2 parameters

Configure the prefer direct route option

Use a source port from a specified port range for backend communication

Configure source IP persistency for backend communication

Use IPv6 link local addresses on server side of a load balancing setup

Advanced load balancing settings

Gradually stepping up the load on a new service with virtual server–level slow start

The no-monitor option for services

Protect applications on protected servers against traffic surges

Enable cleanup of virtual server and service connections

Enable or disable persistence session on TROFS services

Direct requests to a custom web page

Enable access to services when down

Enable TCP buffering of responses

Enable compression

Maintain client connection for multiple client requests

Insert the IP address of the client in the request header

Retrieve location details from user IP address using geolocation database

Use source IP address of the client when connecting to the server

Use client source IP address for backend communication in a v4-v6 load balancing configuration

Configure the source port for server-side connections

Set a limit on the number of client connections

Set a limit on number of requests per connection to the server

Set a threshold value for the monitors bound to a service

Set a timeout value for idle client connections

Set a timeout value for idle server connections

Set a limit on the bandwidth usage by clients

Redirect client requests to a cache

Retain the VLAN identifier for VLAN transparency

Configure automatic state transition based on percentage health of bound services

Static proximity based on NetScaler location

Built-in monitors

TCP-based application monitoring

SSL service monitoring

HTTP/2 service monitoring

Proxy protocol service monitoring

FTP service monitoring

Secure monitoring of servers by using SFTP

Set SSL parameters on a secure monitor

SIP service monitoring

RADIUS service monitoring

Monitor accounting information delivery from a RADIUS server

DNS and DNS-TCP service monitoring

LDAP service monitoring

MySQL service monitoring

SNMP service monitoring

NNTP service monitoring

POP3 service monitoring

SMTP service monitoring

RTSP service monitoring

ARP request monitoring

Citrix Virtual Desktops Delivery Controller service monitoring

Citrix StoreFront stores monitoring

Oracle ECV service monitoring

Custom monitors

Configure HTTP-inline monitors

Understand user monitors

How to use a user monitor to check web sites

Understand the internal dispatcher

Configure a user monitor

Understand load monitors

Configure load monitors

Unbind metrics from a metrics table

Configure reverse monitoring for a service

Configure monitors in a load balancing setup

Create monitors

Configure monitor parameters to determine the service health

Bind monitors to services

Modify monitors

Enable and disable monitors

Unbind monitors

Remove monitors

View monitors

Close monitor connections

Ignore the upper limit on client connections for monitor probes

Manage a large scale deployment

Ranges of virtual servers and services

Configure service groups

Manage service groups

Configure a desired set of service group members for a service group in one NITRO API call

Configure automatic domain based service group scaling

Service discovery using DNS SRV records

Translate the IP address of a domain-based server

Mask a virtual server IP address

Configure load balancing for commonly used protocols

Load balance a group of FTP servers

Load balance DNS servers

Load balance domain-name based services

Load balance a group of SIP servers

Load balance RTSP servers

Load balance remote desktop protocol (RDP) servers

Priority order for load balancing services

Use case 1: SMPP load balancing

Use case 2: Configure rule based persistence based on a name-value pair in a TCP byte stream

Use case 3: Configure load balancing in direct server return mode

Use case 4: Configure LINUX servers in DSR mode

Use case 5: Configure DSR mode when using TOS

Use case 6: Configure load balancing in DSR mode for IPv6 networks by using the TOS field

Use case 7: Configure load balancing in DSR mode by using IP Over IP

Use case 8: Configure load balancing in one-arm mode

Use case 9: Configure load balancing in the inline mode

Use case 10: Load balancing of intrusion detection system servers

Use case 11: Isolating network traffic using listen policies

Use case 12: Configure Citrix Virtual Desktops for load balancing

Use case 13: Configure Citrix Virtual Apps and Desktops for load balancing

Use case 14: ShareFile wizard for load balancing Citrix ShareFile

Use case 15: Configure layer 4 load balancing on the NetScaler appliance

Load balancing FAQs

IP Addressing

Configuring NetScaler-Owned IP Addresses

How the NetScaler Proxies Connections

Enabling Use Source IP Mode

Configuring Network Address Translation

Configuring Static ARP

Setting the Timeout for Dynamic ARP Entries

Configuring Neighbor Discovery

Configuring IP Tunnels

Class E IPv4 packets

Monitor the free ports available on a NetScaler appliance for a new back-end connection

Configuring MAC-Based Forwarding

Configuring Network Interfaces

Configuring Forwarding Session Rules

Understanding VLANs

Configuring a VLAN

Configuring NSVLAN

Configuring Allowed VLAN List

Configuring Bridge Groups

Configuring Virtual MACs

Configuring Link Aggregation

Redundant Interface Set

Binding an SNIP address to an Interface

Monitoring the Bridge Table and Changing the Aging time

NetScaler Appliances in Active-Active Mode Using VRRP

Using the Network Visualizer

Configuring Link Layer Discovery Protocol

Jumbo Frames

NetScaler Support for Microsoft Direct Access Deployment

Access Control Lists

Simple ACLs and Simple ACL6s

Extended ACLs and Extended ACL6s

MAC Address Wildcard Mask for ACLs

Blocking Traffic on Internal Ports

Configuring Dynamic Routes

Configuring Static Routes

Route Health Injection Based on Virtual Server Settings

Configuring Policy-Based Routes

Traffic distribution in multiple routes based on five tuples information

Troubleshooting Routing Issues

Internet Protocol version 6 (IPv6)

Traffic Domains

Inter Traffic Domain Entity Bindings

Virtual MAC Based Traffic Domains

Geneve tunnels

Best practices for networking configurations

Configure to source NetScaler FreeBSD data traffic from a SNIP address

Observability

Integration with Prometheus

Monitor NetScaler and applications using Prometheus

Integration with Splunk

Export metrics directly from NetScaler to Splunk

Export transaction logs directly from NetScaler to Splunk

Export management logs directly from NetScaler to Splunk

Export audit logs and events directly from NetScaler to Splunk

Integration with Elasticsearch

Export transaction logs directly from NetScaler to Elasticsearch

NetScaler advanced analytics

Sample dashboards for endpoints

Sample dashboards on Grafana

Sample dashboards on Splunk

NetScaler metrics reference

Priority Load Balancing

NetScaler Extensions

NetScaler extensions - language overview

Simple types

Expressions

Control structures

NetScaler extensions - library reference

NetScaler extensions API reference

Protocol extensions

Protocol extensions - architecture

Protocol extensions - traffic pipeline for user defined TCP client and server behaviors

Protocol extensions - use cases

Tutorial – Add MQTT protocol to the NetScaler appliance by using protocol extensions

Tutorial - Load balancing syslog messages by using protocol extensions

Protocol extensions command reference

Troubleshoot protocol extensions

Policy extensions

Configure policy extensions

Policy extensions - use cases

Troubleshooting policy extensions

Optimization

Client Keep-Alive

HTTP Compression

Configure selectors and basic content groups

Configure policies for caching and invalidation

Cache support for database protocols

Configure expressions for caching policies and selectors

Display cached objects and cache statistics

Improve cache performance

Configure cookies, headers, and polling

Configure integrated cache as a forward proxy

Default Settings for the Integrated Cache

Front End Optimization

Media Classification

IP Reputation

SSL offload and acceleration

SSL offloading configuration

Support for TLS 1.3 protocol

SSL certificates

Create a certificate

Install, link, and update certificates

Generate a server test certificate

Import and convert SSL files

Bind an SSL certificate to a virtual server on the NetScaler appliance

SSL profiles

SSL profile infrastructure

Secure front-end profile

Appendix A: Sample migration of the SSL configuration after upgrade

Appendix B: Default front-end and back-end SSL profile settings

Legacy SSL profile

Migrate the SSL configuration to the enhanced SSL profile

Certificate revocation lists

Monitor certificate status with OCSP

OCSP stapling

Ciphers available on the NetScaler appliances

ECDHE ciphers

Diffie-Hellman (DH) key generation and achieving PFS with DHE

Cipher redirection

Leverage hardware and software to improve ECDHE and ECDSA cipher performance

ECDSA cipher suites support

Configure user-defined cipher groups on the ADC appliance

Server certificate support matrix on the ADC appliance

Client authentication

Server authentication

SSL actions and policies

SSL policies

SSL built-in actions and user-defined actions

SSL policy binding

SSL policy labels

Selective SSL logging

Support for DTLS protocol

Support for Intel Coleto SSL chip based platforms

MPX 14000 FIPS appliances

SDX 14000 FIPS appliances

Limitations

Terminology

Initialize the HSM

Create partitions

Provision a new instance or modify an existing instance and assign a partition

Configure the HSM for an instance on an SDX 14030/14060/14080 FIPS appliance

Create a FIPS key for an instance on an SDX 14030/14060/14080 FIPS appliance

Upgrade the FIPS firmware on a VPX instance

Support for Thales Luna Network hardware security module

Configure a Thales Luna client on the ADC

Configure Thales Luna HSMs in a high availability setup on the ADC

Additional ADC configuration

NetScaler appliances in a high availability setup

Support for Azure Key Vault

Content inspection

ICAP for remote content inspection

Inline Device Integration with NetScaler

Integration with IPS or NGFW as inline devices