- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Graph Data Structure And Algorithms

- Introduction to Graphs - Data Structure and Algorithm Tutorials

- Graph and its representations

- Types of Graphs with Examples

- Basic Properties of a Graph

- Applications, Advantages and Disadvantages of Graph

- Transpose graph

- Difference Between Graph and Tree

BFS and DFS on Graph

- Breadth First Search or BFS for a Graph

- Depth First Search or DFS for a Graph

- Applications, Advantages and Disadvantages of Depth First Search (DFS)

- Applications, Advantages and Disadvantages of Breadth First Search (BFS)

- Iterative Depth First Traversal of Graph

- BFS for Disconnected Graph

- Transitive Closure of a Graph using DFS

- Difference between BFS and DFS

Cycle in a Graph

- Detect Cycle in a Directed Graph

- Detect cycle in an undirected graph

- Detect Cycle in a directed graph using colors

- Detect a negative cycle in a Graph | (Bellman Ford)

- Cycles of length n in an undirected and connected graph

- Detecting negative cycle using Floyd Warshall

- Clone a Directed Acyclic Graph

Shortest Paths in Graph

- How to find Shortest Paths from Source to all Vertices using Dijkstra's Algorithm

- Bellman–Ford Algorithm

- Floyd Warshall Algorithm

- Johnson's algorithm for All-pairs shortest paths

- Shortest Path in Directed Acyclic Graph

- Multistage Graph (Shortest Path)

- Shortest path in an unweighted graph

- Karp's minimum mean (or average) weight cycle algorithm

- 0-1 BFS (Shortest Path in a Binary Weight Graph)

- Find minimum weight cycle in an undirected graph

Minimum Spanning Tree in Graph

- Kruskal’s Minimum Spanning Tree (MST) Algorithm

- Difference between Prim's and Kruskal's algorithm for MST

- Applications of Minimum Spanning Tree

- Total number of Spanning Trees in a Graph

- Minimum Product Spanning Tree

- Reverse Delete Algorithm for Minimum Spanning Tree

Topological Sorting in Graph

- Topological Sorting

- All Topological Sorts of a Directed Acyclic Graph

- Kahn's algorithm for Topological Sorting

- Maximum edges that can be added to DAG so that it remains DAG

- Longest Path in a Directed Acyclic Graph

- Topological Sort of a graph using departure time of vertex

Connectivity of Graph

- Articulation Points (or Cut Vertices) in a Graph

- Biconnected Components

- Bridges in a graph

- Eulerian path and circuit for undirected graph

- Fleury's Algorithm for printing Eulerian Path or Circuit

- Strongly Connected Components

- Count all possible walks from a source to a destination with exactly k edges

- Euler Circuit in a Directed Graph

- Word Ladder (Length of shortest chain to reach a target word)

- Find if an array of strings can be chained to form a circle | Set 1

- Tarjan's Algorithm to find Strongly Connected Components

- Paths to travel each nodes using each edge (Seven Bridges of Königsberg)

- Dynamic Connectivity | Set 1 (Incremental)

Maximum flow in a Graph

- Max Flow Problem Introduction

- Ford-Fulkerson Algorithm for Maximum Flow Problem

- Find maximum number of edge disjoint paths between two vertices

- Find minimum s-t cut in a flow network

- Maximum Bipartite Matching

- Channel Assignment Problem

- Introduction to Push Relabel Algorithm

- Introduction and implementation of Karger's algorithm for Minimum Cut

- Dinic's algorithm for Maximum Flow

Some must do problems on Graph

- Find size of the largest region in Boolean Matrix

- Count number of trees in a forest

- A Peterson Graph Problem

- Clone an Undirected Graph

- Introduction to Graph Coloring

Traveling Salesman Problem (TSP) Implementation

- Introduction and Approximate Solution for Vertex Cover Problem

- Erdos Renyl Model (for generating Random Graphs)

- Chinese Postman or Route Inspection | Set 1 (introduction)

- Hierholzer's Algorithm for directed graph

- Boggle (Find all possible words in a board of characters) | Set 1

- Hopcroft–Karp Algorithm for Maximum Matching | Set 1 (Introduction)

- Construct a graph from given degrees of all vertices

- Determine whether a universal sink exists in a directed graph

- Number of sink nodes in a graph

- Two Clique Problem (Check if Graph can be divided in two Cliques)

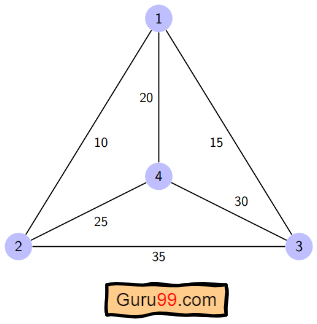

Travelling Salesman Problem (TSP) : Given a set of cities and distances between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once. Here we know that Hamiltonian Tour exists (because the graph is complete) and in fact, many such tours exist, the problem is to find a minimum weight Hamiltonian Cycle. For example, consider the graph shown in the figure on the right side. A TSP tour in the graph is 1-2-4-3-1. The cost of the tour is 10+25+30+15 which is 80. The problem is a famous NP-hard problem. There is no polynomial-time known solution for this problem.

Examples:

In this post, the implementation of a simple solution is discussed.

- Consider city 1 as the starting and ending point. Since the route is cyclic, we can consider any point as a starting point.

- Generate all (n-1)! permutations of cities.

- Calculate the cost of every permutation and keep track of the minimum cost permutation.

- Return the permutation with minimum cost.

Below is the implementation of the above idea

Time complexity: O(n!) where n is the number of vertices in the graph. This is because the algorithm uses the next_permutation function which generates all the possible permutations of the vertex set. Auxiliary Space: O(n) as we are using a vector to store all the vertices.

Please Login to comment...

Similar reads.

- NP Complete

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Data Structures & Algorithms

- DSA - Overview

- DSA - Environment Setup

- DSA - Algorithms Basics

- DSA - Asymptotic Analysis

- Data Structures

- DSA - Data Structure Basics

- DSA - Data Structures and Types

- DSA - Array Data Structure

- Linked Lists

- DSA - Linked List Data Structure

- DSA - Doubly Linked List Data Structure

- DSA - Circular Linked List Data Structure

- Stack & Queue

- DSA - Stack Data Structure

- DSA - Expression Parsing

- DSA - Queue Data Structure

- Searching Algorithms

- DSA - Searching Algorithms

- DSA - Linear Search Algorithm

- DSA - Binary Search Algorithm

- DSA - Interpolation Search

- DSA - Jump Search Algorithm

- DSA - Exponential Search

- DSA - Fibonacci Search

- DSA - Sublist Search

- DSA - Hash Table

- Sorting Algorithms

- DSA - Sorting Algorithms

- DSA - Bubble Sort Algorithm

- DSA - Insertion Sort Algorithm

- DSA - Selection Sort Algorithm

- DSA - Merge Sort Algorithm

- DSA - Shell Sort Algorithm

- DSA - Heap Sort

- DSA - Bucket Sort Algorithm

- DSA - Counting Sort Algorithm

- DSA - Radix Sort Algorithm

- DSA - Quick Sort Algorithm

- Graph Data Structure

- DSA - Graph Data Structure

- DSA - Depth First Traversal

- DSA - Breadth First Traversal

- DSA - Spanning Tree

- Tree Data Structure

- DSA - Tree Data Structure

- DSA - Tree Traversal

- DSA - Binary Search Tree

- DSA - AVL Tree

- DSA - Red Black Trees

- DSA - B Trees

- DSA - B+ Trees

- DSA - Splay Trees

- DSA - Tries

- DSA - Heap Data Structure

- DSA - Recursion Algorithms

- DSA - Tower of Hanoi Using Recursion

- DSA - Fibonacci Series Using Recursion

- Divide and Conquer

- DSA - Divide and Conquer

- DSA - Max-Min Problem

- DSA - Strassen's Matrix Multiplication

- DSA - Karatsuba Algorithm

- Greedy Algorithms

- DSA - Greedy Algorithms

- DSA - Travelling Salesman Problem (Greedy Approach)

- DSA - Prim's Minimal Spanning Tree

- DSA - Kruskal's Minimal Spanning Tree

- DSA - Dijkstra's Shortest Path Algorithm

- DSA - Map Colouring Algorithm

- DSA - Fractional Knapsack Problem

- DSA - Job Sequencing with Deadline

- DSA - Optimal Merge Pattern Algorithm

- Dynamic Programming

- DSA - Dynamic Programming

- DSA - Matrix Chain Multiplication

- DSA - Floyd Warshall Algorithm

- DSA - 0-1 Knapsack Problem

- DSA - Longest Common Subsequence Algorithm

- DSA - Travelling Salesman Problem (Dynamic Approach)

- Approximation Algorithms

- DSA - Approximation Algorithms

- DSA - Vertex Cover Algorithm

- DSA - Set Cover Problem

- DSA - Travelling Salesman Problem (Approximation Approach)

- Randomized Algorithms

- DSA - Randomized Algorithms

- DSA - Randomized Quick Sort Algorithm

- DSA - Karger’s Minimum Cut Algorithm

- DSA - Fisher-Yates Shuffle Algorithm

- DSA Useful Resources

- DSA - Questions and Answers

- DSA - Quick Guide

- DSA - Useful Resources

- DSA - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Travelling Salesman Problem (Greedy Approach)

Travelling salesperson algorithm.

The travelling salesman problem is a graph computational problem where the salesman needs to visit all cities (represented using nodes in a graph) in a list just once and the distances (represented using edges in the graph) between all these cities are known. The solution that is needed to be found for this problem is the shortest possible route in which the salesman visits all the cities and returns to the origin city.

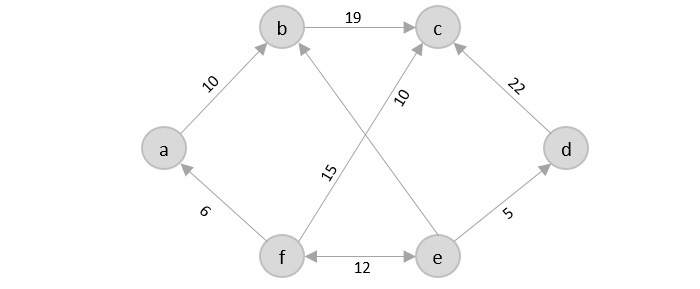

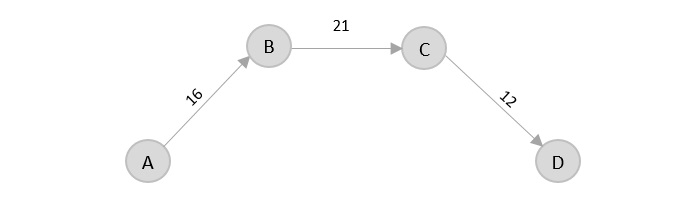

If you look at the graph below, considering that the salesman starts from the vertex ‘a’, they need to travel through all the remaining vertices b, c, d, e, f and get back to ‘a’ while making sure that the cost taken is minimum.

There are various approaches to find the solution to the travelling salesman problem: naive approach, greedy approach, dynamic programming approach, etc. In this tutorial we will be learning about solving travelling salesman problem using greedy approach.

As the definition for greedy approach states, we need to find the best optimal solution locally to figure out the global optimal solution. The inputs taken by the algorithm are the graph G {V, E}, where V is the set of vertices and E is the set of edges. The shortest path of graph G starting from one vertex returning to the same vertex is obtained as the output.

Travelling salesman problem takes a graph G {V, E} as an input and declare another graph as the output (say G’) which will record the path the salesman is going to take from one node to another.

The algorithm begins by sorting all the edges in the input graph G from the least distance to the largest distance.

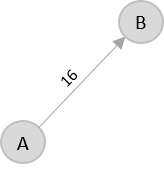

The first edge selected is the edge with least distance, and one of the two vertices (say A and B) being the origin node (say A).

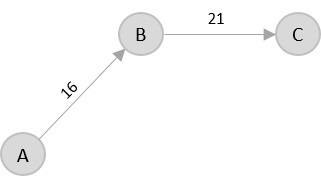

Then among the adjacent edges of the node other than the origin node (B), find the least cost edge and add it onto the output graph.

Continue the process with further nodes making sure there are no cycles in the output graph and the path reaches back to the origin node A.

However, if the origin is mentioned in the given problem, then the solution must always start from that node only. Let us look at some example problems to understand this better.

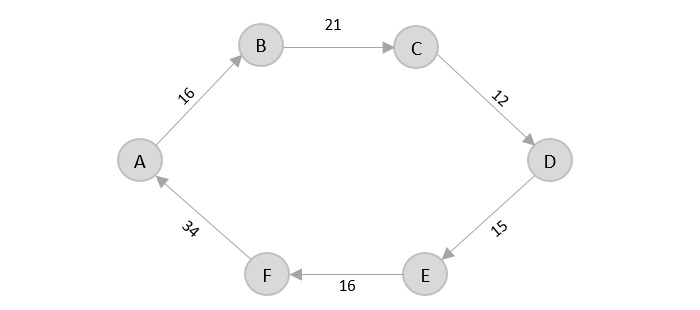

Consider the following graph with six cities and the distances between them −

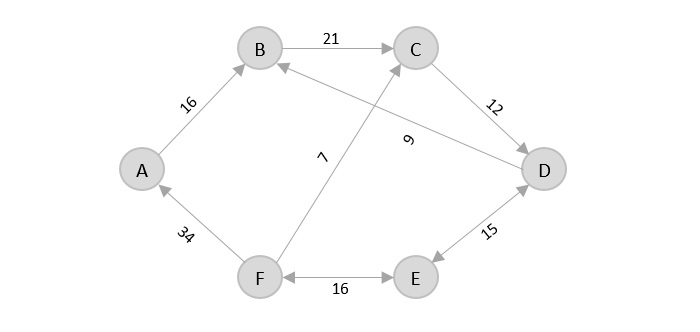

From the given graph, since the origin is already mentioned, the solution must always start from that node. Among the edges leading from A, A → B has the shortest distance.

Then, B → C has the shortest and only edge between, therefore it is included in the output graph.

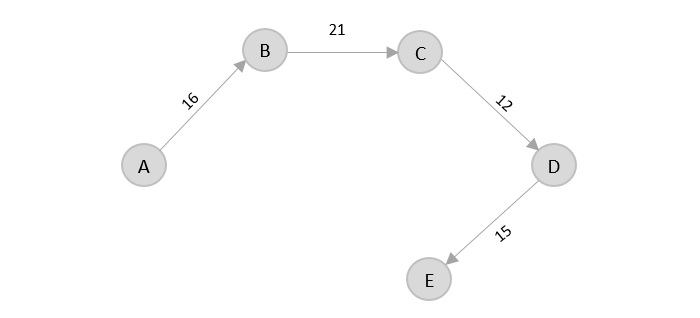

There’s only one edge between C → D, therefore it is added to the output graph.

There’s two outward edges from D. Even though, D → B has lower distance than D → E, B is already visited once and it would form a cycle if added to the output graph. Therefore, D → E is added into the output graph.

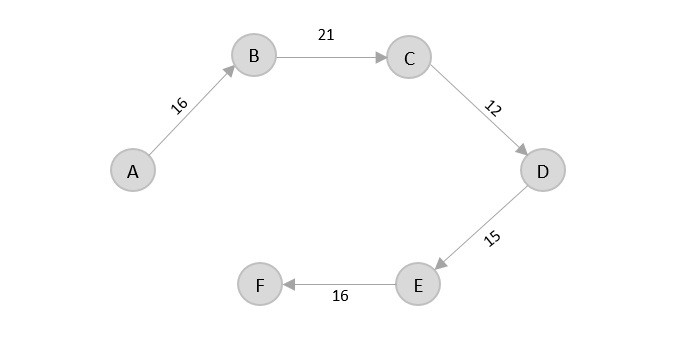

There’s only one edge from e, that is E → F. Therefore, it is added into the output graph.

Again, even though F → C has lower distance than F → A, F → A is added into the output graph in order to avoid the cycle that would form and C is already visited once.

The shortest path that originates and ends at A is A → B → C → D → E → F → A

The cost of the path is: 16 + 21 + 12 + 15 + 16 + 34 = 114.

Even though, the cost of path could be decreased if it originates from other nodes but the question is not raised with respect to that.

The complete implementation of Travelling Salesman Problem using Greedy Approach is given below −

To Continue Learning Please Login

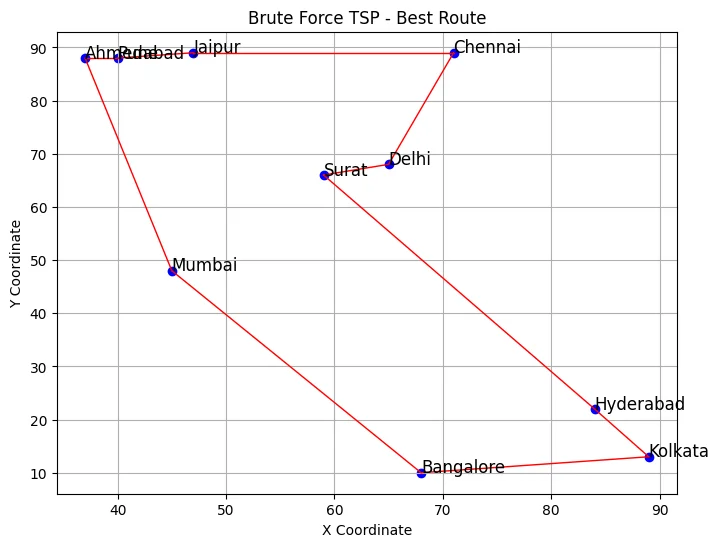

Travelling Salesman Problem: Python, C++ Algorithm

What is the Travelling Salesman Problem (TSP)?

Travelling Salesman Problem (TSP) is a classic combinatorics problem of theoretical computer science. The problem asks to find the shortest path in a graph with the condition of visiting all the nodes only one time and returning to the origin city.

The problem statement gives a list of cities along with the distances between each city.

Objective: To start from the origin city, visit other cities only once, and return to the original city again. Our target is to find the shortest possible path to complete the round-trip route.

Example of TSP

Here a graph is given where 1, 2, 3, and 4 represent the cities, and the weight associated with every edge represents the distance between those cities.

The goal is to find the shortest possible path for the tour that starts from the origin city, traverses the graph while only visiting the other cities or nodes once, and returns to the origin city.

For the above graph, the optimal route is to follow the minimum cost path: 1-2-4-3-1. And this shortest route would cost 10+25+30+15 =80

Different Solutions to Travelling Salesman Problem

Travelling Salesman Problem (TSP) is classified as a NP-hard problem due to having no polynomial time algorithm. The complexity increases exponentially by increasing the number of cities.

There are multiple ways to solve the traveling salesman problem (tsp). Some popular solutions are:

The brute force approach is the naive method for solving traveling salesman problems. In this approach, we first calculate all possible paths and then compare them. The number of paths in a graph consisting of n cities is n! It is computationally very expensive to solve the traveling salesman problem in this brute force approach.

The branch-and-bound method: The problem is broken down into sub-problems in this approach. The solution of those individual sub-problems would provide an optimal solution.

This tutorial will demonstrate a dynamic programming approach, the recursive version of this branch-and-bound method, to solve the traveling salesman problem.

Dynamic programming is such a method for seeking optimal solutions by analyzing all possible routes. It is one of the exact solution methods that solve traveling salesman problems through relatively higher cost than the greedy methods that provide a near-optimal solution.

The computational complexity of this approach is O(N^2 * 2^N) which is discussed later in this article.

The nearest neighbor method is a heuristic-based greedy approach where we choose the nearest neighbor node. This approach is computationally less expensive than the dynamic approach. But it does not provide the guarantee of an optimal solution. This method is used for near-optimal solutions.

Algorithm for Traveling Salesman Problem

We will use the dynamic programming approach to solve the Travelling Salesman Problem (TSP).

Before starting the algorithm, let’s get acquainted with some terminologies:

- A graph G=(V, E), which is a set of vertices and edges.

- V is the set of vertices.

- E is the set of edges.

- Vertices are connected through edges.

- Dist(i,j) denotes the non-negative distance between two vertices, i and j.

Let’s assume S is the subset of cities and belongs to {1, 2, 3, …, n} where 1, 2, 3…n are the cities and i, j are two cities in that subset. Now cost(i, S, j) is defined in such a way as the length of the shortest path visiting node in S, which is exactly once having the starting and ending point as i and j respectively.

For example, cost (1, {2, 3, 4}, 1) denotes the length of the shortest path where:

- Starting city is 1

- Cities 2, 3, and 4 are visited only once

- The ending point is 1

The dynamic programming algorithm would be:

- Set cost(i, , i) = 0, which means we start and end at i, and the cost is 0.

- When |S| > 1, we define cost(i, S, 1) = ∝ where i !=1 . Because initially, we do not know the exact cost to reach city i to city 1 through other cities.

- Now, we need to start at 1 and complete the tour. We need to select the next city in such a way-

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j) where i∈S and i≠j

For the given figure, the adjacency matrix would be the following:

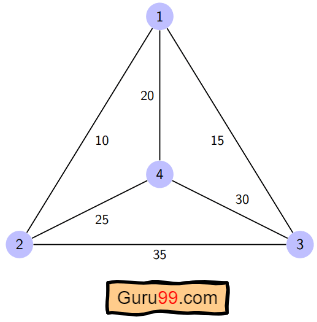

Let’s see how our algorithm works:

Step 1) We are considering our journey starting at city 1, visit other cities once and return to city 1.

Step 2) S is the subset of cities. According to our algorithm, for all |S| > 1, we will set the distance cost(i, S, 1) = ∝. Here cost(i, S, j) means we are starting at city i, visiting the cities of S once, and now we are at city j. We set this path cost as infinity because we do not know the distance yet. So the values will be the following:

Cost (2, {3, 4}, 1) = ∝ ; the notation denotes we are starting at city 2, going through cities 3, 4, and reaching 1. And the path cost is infinity. Similarly-

cost(3, {2, 4}, 1) = ∝

cost(4, {2, 3}, 1) = ∝

Step 3) Now, for all subsets of S, we need to find the following:

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j), where j∈S and i≠j

That means the minimum cost path for starting at i, going through the subset of cities once, and returning to city j. Considering that the journey starts at city 1, the optimal path cost would be= cost(1, {other cities}, 1).

Let’s find out how we could achieve that:

Now S = {1, 2, 3, 4}. There are four elements. Hence the number of subsets will be 2^4 or 16. Those subsets are-

1) |S| = Null:

2) |S| = 1:

{{1}, {2}, {3}, {4}}

3) |S| = 2:

{{1, 2}, {1, 3}, {1, 4}, {2, 3}, {2, 4}, {3, 4}}

4) |S| = 3:

{{1, 2, 3}, {1, 2, 4}, {2, 3, 4}, {1, 3, 4}}

5) |S| = 4:

{{1, 2, 3, 4}}

As we are starting at 1, we could discard the subsets containing city 1.

The algorithm calculation:

1) |S| = Φ:

cost (2, Φ, 1) = dist(2, 1) = 10

cost (3, Φ, 1) = dist(3, 1) = 15

cost (4, Φ, 1) = dist(4, 1) = 20

cost (2, {3}, 1) = dist(2, 3) + cost (3, Φ, 1) = 35+15 = 50

cost (2, {4}, 1) = dist(2, 4) + cost (4, Φ, 1) = 25+20 = 45

cost (3, {2}, 1) = dist(3, 2) + cost (2, Φ, 1) = 35+10 = 45

cost (3, {4}, 1) = dist(3, 4) + cost (4, Φ, 1) = 30+20 = 50

cost (4, {2}, 1) = dist(4, 2) + cost (2, Φ, 1) = 25+10 = 35

cost (4, {3}, 1) = dist(4, 3) + cost (3, Φ, 1) = 30+15 = 45

cost (2, {3, 4}, 1) = min [ dist[2,3]+Cost(3,{4},1) = 35+50 = 85,

dist[2,4]+Cost(4,{3},1) = 25+45 = 70 ] = 70

cost (3, {2, 4}, 1) = min [ dist[3,2]+Cost(2,{4},1) = 35+45 = 80,

dist[3,4]+Cost(4,{2},1) = 30+35 = 65 ] = 65

cost (4, {2, 3}, 1) = min [ dist[4,2]+Cost(2,{3},1) = 25+50 = 75

dist[4,3]+Cost(3,{2},1) = 30+45 = 75 ] = 75

cost (1, {2, 3, 4}, 1) = min [ dist[1,2]+Cost(2,{3,4},1) = 10+70 = 80

dist[1,3]+Cost(3,{2,4},1) = 15+65 = 80

dist[1,4]+Cost(4,{2,3},1) = 20+75 = 95 ] = 80

So the optimal solution would be 1-2-4-3-1

Pseudo-code

Implementation in c/c++.

Here’s the implementation in C++ :

Implementation in Python

Academic solutions to tsp.

Computer scientists have spent years searching for an improved polynomial time algorithm for the Travelling Salesman Problem. Until now, the problem is still NP-hard.

Though some of the following solutions were published in recent years that have reduced the complexity to a certain degree:

- The classical symmetric TSP is solved by the Zero Suffix Method.

- The Biogeography‐based Optimization Algorithm is based on the migration strategy to solve the optimization problems that can be planned as TSP.

- Multi-Objective Evolutionary Algorithm is designed for solving multiple TSP based on NSGA-II.

- The Multi-Agent System solves the TSP of N cities with fixed resources.

Application of Traveling Salesman Problem

Travelling Salesman Problem (TSP) is applied in the real world in both its purest and modified forms. Some of those are:

- Planning, logistics, and manufacturing microchips : Chip insertion problems naturally arise in the microchip industry. Those problems can be planned as traveling salesman problems.

- DNA sequencing : Slight modification of the traveling salesman problem can be used in DNA sequencing. Here, the cities represent the DNA fragments, and the distance represents the similarity measure between two DNA fragments.

- Astronomy : The Travelling Salesman Problem is applied by astronomers to minimize the time spent observing various sources.

- Optimal control problem : Travelling Salesman Problem formulation can be applied in optimal control problems. There might be several other constraints added.

Complexity Analysis of TSP

So the total time complexity for an optimal solution would be the Number of nodes * Number of subproblems * time to solve each sub-problem. The time complexity can be defined as O(N 2 * 2^N).

- Space Complexity: The dynamic programming approach uses memory to store C(S, i), where S is a subset of the vertices set. There is a total of 2 N subsets for each node. So, the space complexity is O(2^N).

Next, you’ll learn about Sieve of Eratosthenes Algorithm

- Linear Search: Python, C++ Example

- DAA Tutorial PDF: Design and Analysis of Algorithms

- Heap Sort Algorithm (With Code in Python and C++)

- Kadence’s Algorithm: Largest Sum Contiguous Subarray

- Radix Sort Algorithm in Data Structure

- Doubly Linked List: C++, Python (Code Example)

- Singly Linked List in Data Structures

- Adjacency List and Matrix Representation of Graph

- Google OR-Tools

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Traveling Salesperson Problem

This section presents an example that shows how to solve the Traveling Salesperson Problem (TSP) for the locations shown on the map below.

The following sections present programs in Python, C++, Java, and C# that solve the TSP using OR-Tools

Create the data

The code below creates the data for the problem.

The distance matrix is an array whose i , j entry is the distance from location i to location j in miles, where the array indices correspond to the locations in the following order:

The data also includes:

- The number of vehicles in the problem, which is 1 because this is a TSP. (For a vehicle routing problem (VRP), the number of vehicles can be greater than 1.)

- The depot : the start and end location for the route. In this case, the depot is 0, which corresponds to New York.

Other ways to create the distance matrix

In this example, the distance matrix is explicitly defined in the program. It's also possible to use a function to calculate distances between locations: for example, the Euclidean formula for the distance between points in the plane. However, it's still more efficient to pre-compute all the distances between locations and store them in a matrix, rather than compute them at run time. See Example: drilling a circuit board for an example that creates the distance matrix this way.

Another alternative is to use the Google Maps Distance Matrix API to dynamically create a distance (or travel time) matrix for a routing problem.

Create the routing model

The following code in the main section of the programs creates the index manager ( manager ) and the routing model ( routing ). The method manager.IndexToNode converts the solver's internal indices (which you can safely ignore) to the numbers for locations. Location numbers correspond to the indices for the distance matrix.

The inputs to RoutingIndexManager are:

- The number of rows of the distance matrix, which is the number of locations (including the depot).

- The number of vehicles in the problem.

- The node corresponding to the depot.

Create the distance callback

To use the routing solver, you need to create a distance (or transit) callback : a function that takes any pair of locations and returns the distance between them. The easiest way to do this is using the distance matrix.

The following function creates the callback and registers it with the solver as transit_callback_index .

The callback accepts two indices, from_index and to_index , and returns the corresponding entry of the distance matrix.

Set the cost of travel

The arc cost evaluator tells the solver how to calculate the cost of travel between any two locations — in other words, the cost of the edge (or arc) joining them in the graph for the problem. The following code sets the arc cost evaluator.

In this example, the arc cost evaluator is the transit_callback_index , which is the solver's internal reference to the distance callback. This means that the cost of travel between any two locations is just the distance between them. However, in general the costs can involve other factors as well.

You can also define multiple arc cost evaluators that depend on which vehicle is traveling between locations, using the method routing.SetArcCostEvaluatorOfVehicle() . For example, if the vehicles have different speeds, you could define the cost of travel between locations to be the distance divided by the vehicle's speed — in other words, the travel time.

Set search parameters

The following code sets the default search parameters and a heuristic method for finding the first solution:

The code sets the first solution strategy to PATH_CHEAPEST_ARC , which creates an initial route for the solver by repeatedly adding edges with the least weight that don't lead to a previously visited node (other than the depot). For other options, see First solution strategy .

Add the solution printer

The function that displays the solution returned by the solver is shown below. The function extracts the route from the solution and prints it to the console.

The function displays the optimal route and its distance, which is given by ObjectiveValue() .

Solve and print the solution

Finally, you can call the solver and print the solution:

This returns the solution and displays the optimal route.

Run the programs

When you run the programs, they display the following output.

In this example, there's only one route because it's a TSP. But in more general vehicle routing problems, the solution contains multiple routes.

Save routes to a list or array

As an alternative to printing the solution directly, you can save the route (or routes, for a VRP) to a list or array. This has the advantage of making the routes available in case you want to do something with them later. For example, you could run the program several times with different parameters and save the routes in the returned solutions to a file for comparison.

The following functions save the routes in the solution to any VRP (possibly with multiple vehicles) as a list (Python) or an array (C++).

You can use these functions to get the routes in any of the VRP examples in the Routing section.

The following code displays the routes.

For the current example, this code returns the following route:

As an exercise, modify the code above to format the output the same way as the solution printer for the program.

Complete programs

The complete TSP programs are shown below.

Example: drilling a circuit board

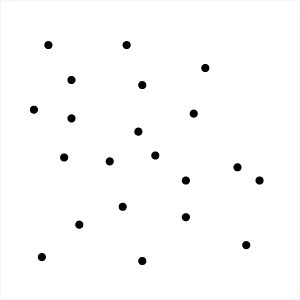

The next example involves drilling holes in a circuit board with an automated drill. The problem is to find the shortest route for the drill to take on the board in order to drill all of the required holes. The example is taken from TSPLIB, a library of TSP problems.

Here's scatter chart of the locations for the holes:

The following sections present programs that find a good solution to the circuit board problem, using the solver's default search parameters. After that, we'll show how to find a better solution by changing the search strategy .

The data for the problem consist of 280 points in the plane, shown in the scatter chart above. The program creates the data in an array of ordered pairs corresponding to the points in the plane, as shown below.

Compute the distance matrix

The function below computes the Euclidean distance between any two points in the data and stores it in an array. Because the routing solver works over the integers, the function rounds the computed distances to integers. Rounding doesn't affect the solution in this example, but might in other cases. See Scaling the distance matrix for a way to avoid possible rounding issues.

Add the distance callback

The code that creates the distance callback is almost the same as in the previous example. However, in this case the program calls the function that computes the distance matrix before adding the callback.

Solution printer

The following function prints the solution to the console. To keep the output more compact, the function displays just the indices of the locations in the route.

Main function

The main function is essentially the same as the one in the previous example , but also includes a call to the function that creates the distance matrix.

Running the program

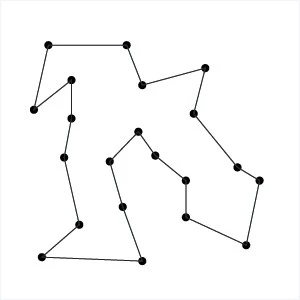

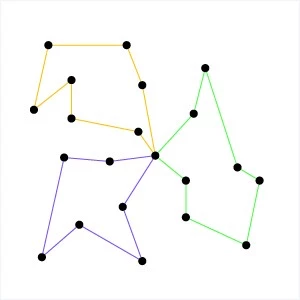

The complete programs are shown in the next section . When you run the program, it displays the following route:

Here's a graph of the corresponding route:

The OR-Tools library finds the above tour very quickly: in less than a second on a typical computer. The total length of the above tour is 2790.

Here are the complete programs for the circuit board example.

Changing the search strategy

The routing solver does not always return the optimal solution to a TSP, because routing problems are computationally intractable. For instance, the solution returned in the previous example is not the optimal route.

To find a better solution, you can use a more advanced search strategy, called guided local search , which enables the solver to escape a local minimum — a solution that is shorter than all nearby routes, but which is not the global minimum. After moving away from the local minimum, the solver continues the search.

The examples below show how to set a guided local search for the circuit board example.

For other local search strategies, see Local search options .

The examples above also enable logging for the search. While logging isn't required, it can be useful for debugging.

When you run the program after making the changes shown above, you get the following solution, which is shorter than the solution shown in the previous section .

For more search options, see Routing Options .

The best algorithms can now routinely solve TSP instances with tens of thousands of nodes. (The record at the time of writing is the pla85900 instance in TSPLIB, a VLSI application with 85,900 nodes. For certain instances with millions of nodes, solutions have been found guaranteed to be within 1% of an optimal tour.)

Scaling the distance matrix

Since the routing solver works over the integers, if your distance matrix has non-integer entries, you have to round the distances to integers. If some distances are small, rounding can affect the solution.

To avoid any issue with rounding, you can scale the distance matrix: multiply all entries of the matrix by a large number — say 100. This multiplies the length of any route by a factor of 100, but it doesn't change the solution. The advantage is that now when you round the matrix entries, the rounding amount (which is at most 0.5), is very small compared to the distances, so it won't affect the solution significantly.

If you scale the distance matrix, you also need to change the solution printer to divide the scaled route lengths by the scaling factor, so that it displays the unscaled distances of the routes.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2023-01-16 UTC.

Reset password New user? Sign up

Existing user? Log in

Traveling Salesperson Problem

Already have an account? Log in here.

A salesperson needs to visit a set of cities to sell their goods. They know how many cities they need to go to and the distances between each city. In what order should the salesperson visit each city exactly once so that they minimize their travel time and so that they end their journey in their city of origin?

The traveling salesperson problem is an extremely old problem in computer science that is an extension of the Hamiltonian Circuit Problem . It has important implications in complexity theory and the P versus NP problem because it is an NP-Complete problem . This means that a solution to this problem cannot be found in polynomial time (it takes superpolynomial time to compute an answer). In other words, as the number of vertices increases linearly, the computation time to solve the problem increases exponentially.

The following image is a simple example of a network of cities connected by edges of a specific distance. The origin city is also marked.

Network of cities

Here is the solution for that network, it has a distance traveled of only 14. Any other path that the salesman can takes will result in a path length that is more than 14.

Relationship to Graphs

Special kinds of tsp, importance for p vs np, applications.

The traveling salesperson problem can be modeled as a graph . Specifically, it is typical a directed, weighted graph. Each city acts as a vertex and each path between cities is an edge. Instead of distances, each edge has a weight associated with it. In this model, the goal of the traveling salesperson problem can be defined as finding a path that visits every vertex, returns to the original vertex, and minimizes total weight.

To that end, many graph algorithms can be used on this model. Search algorithms like breadth-first search (BFS) , depth-first search (DFS) , and Dijkstra's shortest path algorithm can certainly be used, however, they do not take into consideration that fact that every vertex must be visited.

The Traveling Salesperson Problem (TSP), an NP-Complete problem, is notoriously complicated to solve. That is because the greedy approach is so computational intensive. The greedy approach to solving this problem would be to try every single possible path and see which one is the fastest. Try this conceptual question to see if you have a grasp for how hard it is to solve.

For a fully connected map with \(n\) cities, how many total paths are possible for the traveling salesperson? Show Answer There are (n-1)! total paths the salesperson can take. The computation needed to solve this problem in this way grows far too quickly to be a reasonable solution. If this map has only 5 cities, there are \(4!\), or 24, paths. However, if the size of this map is increased to 20 cities, there will be \(1.22 \cdot 10^{17}\) paths!

The greedy approach to TSP would go like this:

- Find all possible paths.

- Find the cost of every paths.

- Choose the path with the lowest cost.

Another version of a greedy approach might be: At every step in the algorithm, choose the best possible path. This version might go a little quicker, but it's not guaranteed to find the best answer, or an answer at all since it might hit a dead end.

For NP-Hard problems (a subset of NP-Complete problems) like TSP, exact solutions can only be implemented in a reasonable amount of time for small input sizes (maps with few cities). Otherwise, the best approach we can do is provide a heuristic to help the problem move forward in an optimal way. However, these approaches cannot be proven to be optimal because they always have some sort of downside.

Small input sizes

As described, in a previous section , the greedy approach to this problem has a complexity of \(O(n!)\). However, there are some approaches that decrease this computation time.

The Held-Karp Algorithm is one of the earliest applications of dynamic programming . Its complexity is much lower than the greedy approach at \(O(n^2 2^n)\). Basically what this algorithm says is that every sub path along an optimal path is itself an optimal path. So, computing an optimal path is the same as computing many smaller subpaths and adding them together.

Heuristics are a way of ranking possible next steps in an algorithm in the hopes of cutting down computation time for the entire algorithm. They are often a tradeoff of some attribute - such as completeness, accuracy, or precision - in favor of speed. Heuristics exist for the traveling salesperson problem as well.

The most simple heuristic for this problem is the greedy heuristic. This heuristic simply says, at each step of the network traversal, choose the best next step. In other words, always choose the closest city that you have not yet visited. This heuristic seems like a good one because it is simple and intuitive, and it is even used in practice sometimes, however there are heuristics that are proven to be more effective.

Christofides algorithm is another heuristic. It produces at most 1.5 times the optimal weight for TSP. This algorithm involves finding a minimum spanning tree for the network. Next, it creates matchings for the cities of an odd degree (meaning they have an odd number of edges coming out of them), calculates an eulerian path , and converts back to a TSP path.

Even though it is typically impossible to optimally solve TSP problems, there are cases of TSP problems that can be solved if certain conditions hold.

The metric-TSP is an instance of TSP that satisfies this condition: The distance from city A to city B is less than or equal to the distance from city A to city C plus the distance from city C to city B. Or,

\[distance_{AB} \leq distance_{AC} + distance_{CB}\]

This is a condition that holds in the real world, but it can't always be expected to hold for every TSP problem. But, with this inequality in place, the approximated path will be no more than twice the optimal path. Even better, we can bound the solution to a \(3/2\) approximation by using Christofide's Algorithm .

The euclidean-TSP has an even stricter constraint on the TSP input. It states that all cities' edges in the network must obey euclidean distances . Recent advances have shown that approximation algorithms using euclidean minimum spanning trees have reduced the runtime of euclidean-TSP, even though they are also NP-hard. In practice, though, simpler heuristics are still used.

The P versus NP problem is one of the leading questions in modern computer science. It asks whether or not every problem whose solution can be verified in polynomial time by a computer can also be solved in polynomial time by a computer. TSP, for example, cannot be solved in polynomial time (at least that's what is currently theorized). However, TSP can be solved in polynomial time when it is phrased like this: Given a graph and an integer, x, decide if there is a path of length x or less than x . It's easy to see that given a proposed answer to this question, it is simple to check if it is less than or equal to x.

The traveling salesperson problem, like other problems that are NP-Complete, are very important to this debate. That is because if a polynomial time solution can be found to this problems, then \(P = NP\). As it stands, most scientists believe that \(P \ne NP\).

The traveling salesperson problem has many applications. The obvious ones are in the transportation space. Planning delivery routes or flight patterns, for example, would benefit immensly from breakthroughs is this problem or in the P versus NP problem .

However, this same logic can be applied to many facets of planning as well. In robotics, for instance, planning the order in which to drill holes in a circuit board is a complex task due to the sheer number of holes that must be drawn.

The best and most important application of TSP, however, comes from the fact that it is an NP-Complete problem. That means that its practical applications amount to the applications of any problem that is NP-Complete. So, if there are significant breakthroughs for TSP, that means that those exact same breakthrough can be applied to any problem in the NP-Complete class.

Problem Loading...

Note Loading...

Set Loading...

Traveling Salesman Problem

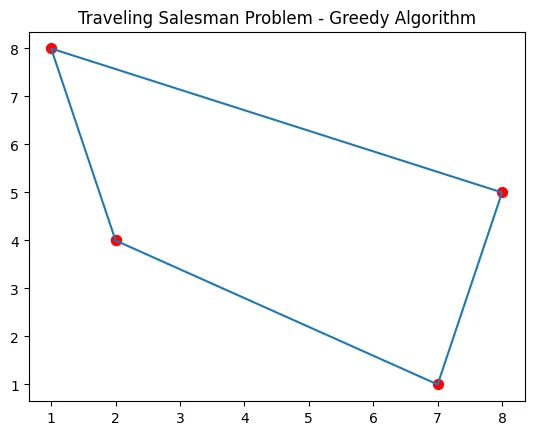

Greedy algorithms for graphs: traveling salesman problem, introduction.

The Traveling Salesman Problem (TSP) is a classic optimization problem in graph theory. Given a set of cities and the distances between them, the task is to find the shortest possible route that allows a salesman to visit each city exactly once and return to the starting city. The TSP is known to be an NP-hard problem, meaning there is no known efficient algorithm to solve it optimally for large inputs. However, greedy algorithms offer a promising approach to finding approximate solutions.

Greedy Algorithms

A greedy algorithm is one that makes locally optimal choices at each step in hopes of finding a globally optimal solution. In the context of the TSP, a greedy algorithm constructs a tour by iteratively adding the nearest unvisited city to the current tour. Let's dive into the details and code examples to better understand how this works.

Algorithm Steps

The greedy algorithm for the TSP can be summarized in the following steps:

- Start with an empty tour and select an arbitrary starting city.

- Find the nearest unvisited city from the current city.

- Add this city to the tour.

- Mark the city as visited.

- Set the current city to the newly added city.

- Add the starting city back to the tour.

- The resulting tour is the approximate solution to the TSP.

Implementing the Algorithm

To illustrate the implementation of the greedy algorithm for the TSP, let's consider a small example using the following distance matrix:

| | City A | City B | City C | |-------|--------|--------|--------| | City A| 0 | 2 | 9 | | City B| 2 | 0 | 4 | | City C| 9 | 4 | 0 |

Example Run

Let's run our algorithm on the given distance matrix to find an approximate solution to the TSP:

The time complexity of the greedy algorithm for the TSP is O(n^2), where n is the number of cities. This is because, in each step, we iterate through all unvisited cities to find the nearest one. Despite being an approximate solution, the greedy algorithm often provides reasonable results and runs quickly for practical instances.

In this tutorial, we explored greedy algorithms for graphs, focusing specifically on the Traveling Salesman Problem. We learned the steps involved in the greedy algorithm for the TSP and implemented it in Python. By making locally optimal choices, the greedy algorithm offers an approach to finding approximate solutions for the TSP. While not always optimal, the greedy algorithm provides a good trade-off between efficiency and solution quality.

Hi, I'm Ada, your personal AI tutor. I can help you with any coding tutorial. Go ahead and ask me anything.

I have a question about this topic

Give more examples

DSA Tutorial

Linked lists, stacks & queues, hash tables, shortest path, minimum spanning tree, maximum flow, time complexity, dsa reference, dsa examples, dsa the traveling salesman problem.

The Traveling Salesman Problem

The Traveling Salesman Problem states that you are a salesperson and you must visit a number of cities or towns.

Rules : Visit every city only once, then return back to the city you started in.

Goal : Find the shortest possible route.

Except for the Held-Karp algorithm (which is quite advanced and time consuming, (\(O(2^n n^2)\)), and will not be described here), there is no other way to find the shortest route than to check all possible routes.

This means that the time complexity for solving this problem is \(O(n!)\), which means 720 routes needs to be checked for 6 cities, 40,320 routes must be checked for 8 cities, and if you have 10 cities to visit, more than 3.6 million routes must be checked!

Note: "!", or "factorial", is a mathematical operation used in combinatorics to find out how many possible ways something can be done. If there are 4 cities, each city is connected to every other city, and we must visit every city exactly once, there are \(4!= 4 \cdot 3 \cdot 2 \cdot 1 = 24\) different routes we can take to visit those cities.

The Traveling Salesman Problem (TSP) is a problem that is interesting to study because it is very practical, but so time consuming to solve, that it becomes nearly impossible to find the shortest route, even in a graph with just 20-30 vertices.

If we had an effective algorithm for solving The Traveling Salesman Problem, the consequences would be very big in many sectors, like for example chip design, vehicle routing, telecommunications, and urban planning.

Checking All Routes to Solve The Traveling Salesman Problem

To find the optimal solution to The Traveling Salesman Problem, we will check all possible routes, and every time we find a shorter route, we will store it, so that in the end we will have the shortest route.

Good: Finds the overall shortest route.

Bad: Requires an awful lot of calculation, especially for a large amount of cities, which means it is very time consuming.

How it works:

- Check the length of every possible route, one route at a time.

- Is the current route shorter than the shortest route found so far? If so, store the new shortest route.

- After checking all routes, the stored route is the shortest one.

Such a way of finding the solution to a problem is called brute force .

Brute force is not really an algorithm, it just means finding the solution by checking all possibilities, usually because of a lack of a better way to do it.

Speed: {{ inpVal }}

Finding the shortest route in The Traveling Salesman Problem by checking all routes (brute force).

Progress: {{progress}}%

n = {{vertices}} cities

{{vertices}}!={{posRoutes}} possible routes

Show every route: {{showCompares}}

The reason why the brute force approach of finding the shortest route (as shown above) is so time consuming is that we are checking all routes, and the number of possible routes increases really fast when the number of cities increases.

Finding the optimal solution to the Traveling Salesman Problem by checking all possible routes (brute force):

Using A Greedy Algorithm to Solve The Traveling Salesman Problem

Since checking every possible route to solve the Traveling Salesman Problem (like we did above) is so incredibly time consuming, we can instead find a short route by just going to the nearest unvisited city in each step, which is much faster.

Good: Finds a solution to the Traveling Salesman Problem much faster than by checking all routes.

Bad: Does not find the overall shortest route, it just finds a route that is much shorter than an average random route.

- Visit every city.

- The next city to visit is always the nearest of the unvisited cities from the city you are currently in.

- After visiting all cities, go back to the city you started in.

This way of finding an approximation to the shortest route in the Traveling Salesman Problem, by just going to the nearest unvisited city in each step, is called a greedy algorithm .

Finding an approximation to the shortest route in The Traveling Salesman Problem by always going to the nearest unvisited neighbor (greedy algorithm).

As you can see by running this simulation a few times, the routes that are found are not completely unreasonable. Except for a few times when the lines cross perhaps, especially towards the end of the algorithm, the resulting route is a lot shorter than we would get by choosing the next city at random.

Finding a near-optimal solution to the Traveling Salesman Problem using the nearest-neighbor algorithm (greedy):

Other Algorithms That Find Near-Optimal Solutions to The Traveling Salesman Problem

In addition to using a greedy algorithm to solve the Traveling Salesman Problem, there are also other algorithms that can find approximations to the shortest route.

These algorithms are popular because they are much more effective than to actually check all possible solutions, but as with the greedy algorithm above, they do not find the overall shortest route.

Algorithms used to find a near-optimal solution to the Traveling Salesman Problem include:

- 2-opt Heuristic: An algorithm that improves the solution step-by-step, in each step removing two edges and reconnecting the two paths in a different way to reduce the total path length.

- Genetic Algorithm: This is a type of algorithm inspired by the process of natural selection and use techniques such as selection, mutation, and crossover to evolve solutions to problems, including the TSP.

- Simulated Annealing: This method is inspired by the process of annealing in metallurgy. It involves heating and then slowly cooling a material to decrease defects. In the context of TSP, it's used to find a near-optimal solution by exploring the solution space in a way that allows for occasional moves to worse solutions, which helps to avoid getting stuck in local minima.

- Ant Colony Optimization: This algorithm is inspired by the behavior of ants in finding paths from the colony to food sources. It's a more complex probabilistic technique for solving computational problems which can be mapped to finding good paths through graphs.

Time Complexity for Solving The Traveling Salesman Problem

To get a near-optimal solution fast, we can use a greedy algorithm that just goes to the nearest unvisited city in each step, like in the second simulation on this page.

Solving The Traveling Salesman Problem in a greedy way like that, means that at each step, the distances from the current city to all other unvisited cities are compared, and that gives us a time complexity of \(O(n^2) \).

But finding the shortest route of them all requires a lot more operations, and the time complexity for that is \(O(n!)\), like mentioned earlier, which means that for 4 cities, there are 4! possible routes, which is the same as \(4 \cdot 3 \cdot 2 \cdot 1 = 24\). And for just 12 cities for example, there are \(12! = 12 \cdot 11 \cdot 10 \cdot \; ... \; \cdot 2 \cdot 1 = 479,001,600\) possible routes!

See the time complexity for the greedy algorithm \(O(n^2)\), versus the time complexity for finding the shortest route by comparing all routes \(O(n!)\), in the image below.

But there are two things we can do to reduce the number of routes we need to check.

In the Traveling Salesman Problem, the route starts and ends in the same place, which makes a cycle. This means that the length of the shortest route will be the same no matter which city we start in. That is why we have chosen a fixed city to start in for the simulation above, and that reduces the number of possible routes from \(n!\) to \((n-1)!\).

Also, because these routes go in cycles, a route has the same distance if we go in one direction or the other, so we actually just need to check the distance of half of the routes, because the other half will just be the same routes in the opposite direction, so the number of routes we need to check is actually \( \frac{(n-1)!}{2}\).

But even if we can reduce the number of routes we need to check to \( \frac{(n-1)!}{2}\), the time complexity is still \( O(n!)\), because for very big \(n\), reducing \(n\) by one and dividing by 2 does not make a significant change in how the time complexity grows when \(n\) is increased.

To better understand how time complexity works, go to this page .

Actual Traveling Salesman Problems Are More Complex

The edge weight in a graph in this context of The Traveling Salesman Problem tells us how hard it is to go from one point to another, and it is the total edge weight of a route we want to minimize.

So far on this page, the edge weight has been the distance in a straight line between two points. And that makes it much easier to explain the Traveling Salesman Problem, and to display it.

But in the real world there are many other things that affects the edge weight:

- Obstacles: When moving from one place to another, we normally try to avoid obstacles like trees, rivers, houses for example. This means it is longer and takes more time to go from A to B, and the edge weight value needs to be increased to factor that in, because it is not a straight line anymore.

- Transportation Networks: We usually follow a road or use public transport systems when traveling, and that also affects how hard it is to go (or send a package) from one place to another.

- Traffic Conditions: Travel congestion also affects the travel time, so that should also be reflected in the edge weight value.

- Legal and Political Boundaries: Crossing border for example, might make one route harder to choose than another, which means the shortest straight line route might be slower, or more costly.

- Economic Factors: Using fuel, using the time of employees, maintaining vehicles, all these things cost money and should also be factored into the edge weights.

As you can see, just using the straight line distances as the edge weights, might be too simple compared to the real problem. And solving the Traveling Salesman Problem for such a simplified problem model would probably give us a solution that is not optimal in a practical sense.

It is not easy to visualize a Traveling Salesman Problem when the edge length is not just the straight line distance between two points anymore, but the computer handles that very well.

COLOR PICKER

Contact Sales

If you want to use W3Schools services as an educational institution, team or enterprise, send us an e-mail: [email protected]

Report Error

If you want to report an error, or if you want to make a suggestion, send us an e-mail: [email protected]

Top Tutorials

Top references, top examples, get certified.

12.9 Traveling Salesperson Problem

Learning objectives.

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths . In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.187 . Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles , the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.192 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.193 .

The possible distances are:

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.194 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.195 .

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.194 . There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.196 .

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 V 1 for "visited 1st." Then to compare the weights of the edges between A and vertices adjacent to A : $250, $210, $300, $200, and $100 as shown in Figure 12.197 . The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 V 2 for "visited 2nd" then compare the weights of the edges between F and the remaining vertices adjacent to F : $170, $330, $150 and $350 as shown in Figure 12.198 . The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 V 3 for "visited 3rd." Next, compare the weights of the edges between D and the remaining vertices adjacent to D : $120, $310, and $270 as shown in Figure 12.199 . The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 V 4 for "visited 4th." Finally, compare the weights of the edges between B and the remaining vertices adjacent to B : $160 and $220 as shown in Figure 12.200 . The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 V 5 and mark the only remaining vertex, which is C , as V 6 V 6 . This is shown in Figure 12.201 . Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.202 . Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Authors: Donna Kirk

- Publisher/website: OpenStax

- Book title: Contemporary Mathematics

- Publication date: Mar 22, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Section URL: https://openstax.org/books/contemporary-mathematics/pages/12-9-traveling-salesperson-problem

© Dec 21, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Coding Problems

Travelling Salesman Problem (TSP)

Problem statement, example 1: travelling salesman problem, example 2: travelling salesman problem, 1. simple approach, c++ code implementation, java code implementation, python code implementation, 2. travelling salesman problem using dynamic programming, c code implementation, 3. greedy approach, practice questions, frequently asked questions, 1. which algorithm is used for the travelling salesman problem, 2. what is the complexity of the travelling salesman problem, 3. how is this problem modelled as a graph problem, 4: what is the difficulty level of the travelling salesman problem.

Travelling Salesman Problem (TSP) – Given a set of cities and the distance between every pair of cities as an adjacency matrix, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. The ultimate goal is to minimize the total distance travelled, forming a closed tour or circuit.

The TSP is referred to as an NP-hard problem, meaning there is no known algorithm to solve it in polynomial time for large instances. As the number of cities increases, the number of potential solutions grows exponentially, making an exhaustive search unfeasible. This complexity is one of the reasons why the TSP remains a popular topic of research. Learn More .

Input –

Confused about your next job?

Output –

Here, the TSP Tour is 0-2-1-3-0 and the cost of the tour is 48.

Minimum weight Hamiltonian Cycle : EACBDE= 32

Wondering how the Hamiltonian Cycle Problem and the Traveling Salesman Problem differ? The Hamiltonian Cycle problem is to find out if there exists a tour that visits each city exactly once. Here, we know that the Hamiltonian Tour exists (due to the graph being complete), and there are indeed many such tours. The problem is to find a minimum weight Hamiltonian Cycle.

There are various approaches to finding the solution to the travelling salesman problem- simple (naïve) approach, dynamic programming approach, and greedy approach. Let’s explore each approach in detail:

- Consider city 1 as the starting and ending point. Since the route is cyclic, we can consider any point as a starting point.

- Now, we will generate all possible permutations of cities which are (n-1)!.

- Find the cost of each permutation and keep track of the minimum cost permutation.

- Return the permutation with minimum cost.

- Time complexity: O(N!), Where N is the number of cities.

- Space complexity: O(1).