Data Science Tutorials

Data Science and Machine Learning in Python and R

Solving the Travelling Salesman Problem (TSP) with Python

In this tutorial, we would see how to solve the TSP problem in Python. The following sections are covered.

- Approach to Solving the TSP Problem

- The Routing Model and Index Manager

- The Distance Callback

- Travel Cost and Search Parameters

- Function to the Print the Solution

- Putting it all Together

1. Approach to Solving the TSP Problem

To be able to solve a TSP problem in Python, we need the following items:

- List of cities

- List of distances between the cities

- Number of vehicles

- Starting location of the vehicles

List of Cities

In this problem we have a list of 12 cities. They are listed below. The indices of the cities are provided against the name.

0. New York – 1. Los Angeles – 2. Chicago – 3. Minneapolis – 4. Denver – 5. Dallas – 6. Seattle – 7. Boston – 8. San Francisco – 9. St. Louis – 10. Houston – 11. Phoenix – 12. Salt Lake City

List of Distances

It is normally better to represent the list of distances as a square matrix with intersecting cells holding distance between the row and column headers. If we use indexes i and j for this matrix, then data[i][j] is the distance between city i and city j.

Number of Vehicles

In this case, since it a TSP, the number of vehicles is 1. The Python code is

Starting Point

In this example, the starting point or ‘depot’ is location 0, that is New York.

2. The Routing Model and Index Manager

To solve the TSP in Python, you need to create the RoutingIndexManager and the RoutingModel. The RoutingIndexManager manages conversion between the internal solver variables and NodeIndexes. In this way, we can simply use the NodeIndex in our programs.

The RoutingIndexManager takes three parameters:

- number of location, including the depot (number of rows of the distance matrix

- number of vehicles

- the starting node (depot node)

The code below creates the routing model and index manager

3. The Distance Callback

To be able to use the solver, we need to create a distance callback. What is a distance callback? This is a function that takes two locations and returns the distance between them. Since these distances are available in the distance matrix, then we can use the distance matrix. The two functions passed to the distance callback are routing variable indexes, so we need to convert them to distance matrix NodeIndex using IndexToNode() method from the RoutingIndexManager.

The code below does that

4. The Travel Cost and Search Parameters

The cost of travel is the cost to travel the distance between two nodes. In the case of the solver, you need to set an arc cost evaluator function that does this calculation. This function takes as parameter the transit_callback_index returned by the distance_callback. In our case, the travel cost is simply the distance between the locations.

However, in a real world scenario, there may be other factors to be considered.

We also need to set the search parameters as well as the heuristic for finding the first solution. In this case, the strategy is PATH_CHEAPEST_ARCH. This heuristic repeatedly adds edge with the least weight that don’t lead to a previously visited node..

5. Function to Print the Solution

The function below prints out the solution to the screen by extracting the route from the solution

6. Putting it all together

Finally, call the SolveWithParameters() method of the routing model and then call the print_solution function.

The final output of the program is given below:

kindsonthegenius

You might also like, introduction to vehicle routing with python.

- Google OR-Tools

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Traveling Salesperson Problem

This section presents an example that shows how to solve the Traveling Salesperson Problem (TSP) for the locations shown on the map below.

The following sections present programs in Python, C++, Java, and C# that solve the TSP using OR-Tools

Create the data

The code below creates the data for the problem.

The distance matrix is an array whose i , j entry is the distance from location i to location j in miles, where the array indices correspond to the locations in the following order:

The data also includes:

- The number of vehicles in the problem, which is 1 because this is a TSP. (For a vehicle routing problem (VRP), the number of vehicles can be greater than 1.)

- The depot : the start and end location for the route. In this case, the depot is 0, which corresponds to New York.

Other ways to create the distance matrix

In this example, the distance matrix is explicitly defined in the program. It's also possible to use a function to calculate distances between locations: for example, the Euclidean formula for the distance between points in the plane. However, it's still more efficient to pre-compute all the distances between locations and store them in a matrix, rather than compute them at run time. See Example: drilling a circuit board for an example that creates the distance matrix this way.

Another alternative is to use the Google Maps Distance Matrix API to dynamically create a distance (or travel time) matrix for a routing problem.

Create the routing model

The following code in the main section of the programs creates the index manager ( manager ) and the routing model ( routing ). The method manager.IndexToNode converts the solver's internal indices (which you can safely ignore) to the numbers for locations. Location numbers correspond to the indices for the distance matrix.

The inputs to RoutingIndexManager are:

- The number of rows of the distance matrix, which is the number of locations (including the depot).

- The number of vehicles in the problem.

- The node corresponding to the depot.

Create the distance callback

To use the routing solver, you need to create a distance (or transit) callback : a function that takes any pair of locations and returns the distance between them. The easiest way to do this is using the distance matrix.

The following function creates the callback and registers it with the solver as transit_callback_index .

The callback accepts two indices, from_index and to_index , and returns the corresponding entry of the distance matrix.

Set the cost of travel

The arc cost evaluator tells the solver how to calculate the cost of travel between any two locations — in other words, the cost of the edge (or arc) joining them in the graph for the problem. The following code sets the arc cost evaluator.

In this example, the arc cost evaluator is the transit_callback_index , which is the solver's internal reference to the distance callback. This means that the cost of travel between any two locations is just the distance between them. However, in general the costs can involve other factors as well.

You can also define multiple arc cost evaluators that depend on which vehicle is traveling between locations, using the method routing.SetArcCostEvaluatorOfVehicle() . For example, if the vehicles have different speeds, you could define the cost of travel between locations to be the distance divided by the vehicle's speed — in other words, the travel time.

Set search parameters

The following code sets the default search parameters and a heuristic method for finding the first solution:

The code sets the first solution strategy to PATH_CHEAPEST_ARC , which creates an initial route for the solver by repeatedly adding edges with the least weight that don't lead to a previously visited node (other than the depot). For other options, see First solution strategy .

Add the solution printer

The function that displays the solution returned by the solver is shown below. The function extracts the route from the solution and prints it to the console.

The function displays the optimal route and its distance, which is given by ObjectiveValue() .

Solve and print the solution

Finally, you can call the solver and print the solution:

This returns the solution and displays the optimal route.

Run the programs

When you run the programs, they display the following output.

In this example, there's only one route because it's a TSP. But in more general vehicle routing problems, the solution contains multiple routes.

Save routes to a list or array

As an alternative to printing the solution directly, you can save the route (or routes, for a VRP) to a list or array. This has the advantage of making the routes available in case you want to do something with them later. For example, you could run the program several times with different parameters and save the routes in the returned solutions to a file for comparison.

The following functions save the routes in the solution to any VRP (possibly with multiple vehicles) as a list (Python) or an array (C++).

You can use these functions to get the routes in any of the VRP examples in the Routing section.

The following code displays the routes.

For the current example, this code returns the following route:

As an exercise, modify the code above to format the output the same way as the solution printer for the program.

Complete programs

The complete TSP programs are shown below.

Example: drilling a circuit board

The next example involves drilling holes in a circuit board with an automated drill. The problem is to find the shortest route for the drill to take on the board in order to drill all of the required holes. The example is taken from TSPLIB, a library of TSP problems.

Here's scatter chart of the locations for the holes:

The following sections present programs that find a good solution to the circuit board problem, using the solver's default search parameters. After that, we'll show how to find a better solution by changing the search strategy .

The data for the problem consist of 280 points in the plane, shown in the scatter chart above. The program creates the data in an array of ordered pairs corresponding to the points in the plane, as shown below.

Compute the distance matrix

The function below computes the Euclidean distance between any two points in the data and stores it in an array. Because the routing solver works over the integers, the function rounds the computed distances to integers. Rounding doesn't affect the solution in this example, but might in other cases. See Scaling the distance matrix for a way to avoid possible rounding issues.

Add the distance callback

The code that creates the distance callback is almost the same as in the previous example. However, in this case the program calls the function that computes the distance matrix before adding the callback.

Solution printer

The following function prints the solution to the console. To keep the output more compact, the function displays just the indices of the locations in the route.

Main function

The main function is essentially the same as the one in the previous example , but also includes a call to the function that creates the distance matrix.

Running the program

The complete programs are shown in the next section . When you run the program, it displays the following route:

Here's a graph of the corresponding route:

The OR-Tools library finds the above tour very quickly: in less than a second on a typical computer. The total length of the above tour is 2790.

Here are the complete programs for the circuit board example.

Changing the search strategy

The routing solver does not always return the optimal solution to a TSP, because routing problems are computationally intractable. For instance, the solution returned in the previous example is not the optimal route.

To find a better solution, you can use a more advanced search strategy, called guided local search , which enables the solver to escape a local minimum — a solution that is shorter than all nearby routes, but which is not the global minimum. After moving away from the local minimum, the solver continues the search.

The examples below show how to set a guided local search for the circuit board example.

For other local search strategies, see Local search options .

The examples above also enable logging for the search. While logging isn't required, it can be useful for debugging.

When you run the program after making the changes shown above, you get the following solution, which is shorter than the solution shown in the previous section .

For more search options, see Routing Options .

The best algorithms can now routinely solve TSP instances with tens of thousands of nodes. (The record at the time of writing is the pla85900 instance in TSPLIB, a VLSI application with 85,900 nodes. For certain instances with millions of nodes, solutions have been found guaranteed to be within 1% of an optimal tour.)

Python and the Travelling Salesman Problem

Understanding the Travelling Salesman Problem

The Travelling Salesman Problem is a classic algorithmic problem in computer science and operations research. It focuses on optimization. In this context, a salesman is given a list of cities and must determine the shortest route to visit each city once and return to his original location.

Python, with its robust set of libraries and packages, is a is an excellent language for solving the TSP with its robust set of libraries and packagesess of creating a Python program to solve the TSP.

Step 1: Importing the Required Libraries

For this Python program, we will need to import the following libraries:

Step 2: Creating a List of Cities

Next, we need to create a list of cities. In this example, we generate a list of 50 random cities using numpy:

Step 3: Calculating the Distance Between Cities

Step 4: solving the problem.

Once we have our distances, we can solve the problem using a technique called Simulated Annealing:

Python provides a flexible and powerful platform for solving complex problems like the TSP. The ability to leverage Python's robust libraries and concise syntax makes it an ideal choice for tackling such tasks. If you need assistance with your Python project, consider hiring Python developers .

If you're interested in enhancing this article or becoming a contributing author, we'd love to hear from you.

Please contact Sasha at [email protected] to discuss the opportunity further or to inquire about adding a direct link to your resource. We welcome your collaboration and contributions!

Simulated Annealing

Simulated Annealing is a probabilistic technique used for finding an approximate solution to an optimization problem. In the context of the TSP, Simulated Annealing can be applied to find the shortest possible route that a salesman can take to visit each city once and return to his original location.

Travelling Salesman Problem

The Travelling Salesman Problem (TSP) is a well-known algorithmic problem in the field of computational mathematics and computer science. It involves a hypothetical scenario where a salesman must travel between a number of cities, starting and ending his journey at the same city, with the objective of finding the shortest possible route that allows him to visit every city only once. The TSP is a classic example of an NP-hard optimization problem. For more details, you can visit the Wikipedia page .

Effortlessly Scale Your Tech Team with Expert Remote Laravel and Python Developers Skilled in Protobuf

Enhance Your Tech Team with Expert Laravel and Python Developers Skilled in Jenkins CI

Effortlessly Scale Your Tech Team with Expert Remote Laravel & Python Developers Skilled in Amazon EC2

- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Dynamic Programming or DP

- What is memoization? A Complete tutorial

- Dynamic Programming (DP) Tutorial with Problems

- Tabulation vs Memoization

- Optimal Substructure Property in Dynamic Programming | DP-2

- Overlapping Subproblems Property in Dynamic Programming | DP-1

- Steps for how to solve a Dynamic Programming Problem

Advanced Topics

- Bitmasking and Dynamic Programming | Set 1 (Count ways to assign unique cap to every person)

- Digit DP | Introduction

- Sum over Subsets | Dynamic Programming

Easy problems in Dynamic programming

- Count all combinations of coins to make a given value sum (Coin Change II)

- Subset Sum Problem

- Introduction and Dynamic Programming solution to compute nCr%p

- Cutting a Rod | DP-13

- Painting Fence Algorithm

- Longest Common Subsequence (LCS)

- Longest Increasing Subsequence (LIS)

- Longest subsequence such that difference between adjacents is one

- Maximum size square sub-matrix with all 1s

- Min Cost Path | DP-6

- Longest Common Substring (Space optimized DP solution)

- Count ways to reach the nth stair using step 1, 2 or 3

- Count Unique Paths in matrix

- Unique paths in a Grid with Obstacles

Medium problems on Dynamic programming

- 0/1 Knapsack Problem

- Printing Items in 0/1 Knapsack

- Unbounded Knapsack (Repetition of items allowed)

- Egg Dropping Puzzle | DP-11

- Word Break Problem | DP-32

- Vertex Cover Problem (Dynamic Programming Solution for Tree)

- Tile Stacking Problem

- Box Stacking Problem | DP-22

- Partition problem | DP-18

Travelling Salesman Problem using Dynamic Programming

- Longest Palindromic Subsequence (LPS)

- Longest Common Increasing Subsequence (LCS + LIS)

- Find all distinct subset (or subsequence) sums of an array

- Weighted Job Scheduling

- Count Derangements (Permutation such that no element appears in its original position)

- Minimum insertions to form a palindrome | DP-28

- Ways to arrange Balls such that adjacent balls are of different types

Hard problems on Dynamic programming

- Palindrome Partitioning

- Word Wrap Problem

- The Painter's Partition Problem

- Program for Bridge and Torch problem

- Matrix Chain Multiplication | DP-8

- Printing brackets in Matrix Chain Multiplication Problem

- Maximum sum rectangle in a 2D matrix | DP-27

- Maximum profit by buying and selling a share at most k times

- Minimum cost to sort strings using reversal operations of different costs

- Count of AP (Arithmetic Progression) Subsequences in an array

- Introduction to Dynamic Programming on Trees

- Maximum height of Tree when any Node can be considered as Root

- Longest repeating and non-overlapping substring

- Top 20 Dynamic Programming Interview Questions

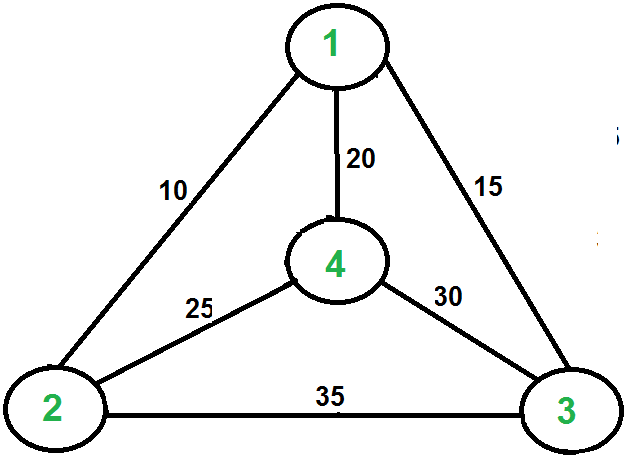

Travelling Salesman Problem (TSP):

Given a set of cities and the distance between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once. Here we know that Hamiltonian Tour exists (because the graph is complete) and in fact, many such tours exist, the problem is to find a minimum weight Hamiltonian Cycle.

For example, consider the graph shown in the figure on the right side. A TSP tour in the graph is 1-2-4-3-1. The cost of the tour is 10+25+30+15 which is 80. The problem is a famous NP-hard problem. There is no polynomial-time know solution for this problem. The following are different solutions for the traveling salesman problem.

Naive Solution:

1) Consider city 1 as the starting and ending point.

2) Generate all (n-1)! Permutations of cities.

3) Calculate the cost of every permutation and keep track of the minimum cost permutation.

4) Return the permutation with minimum cost.

Time Complexity: ?(n!)

Dynamic Programming:

Let the given set of vertices be {1, 2, 3, 4,….n}. Let us consider 1 as starting and ending point of output. For every other vertex I (other than 1), we find the minimum cost path with 1 as the starting point, I as the ending point, and all vertices appearing exactly once. Let the cost of this path cost (i), and the cost of the corresponding Cycle would cost (i) + dist(i, 1) where dist(i, 1) is the distance from I to 1. Finally, we return the minimum of all [cost(i) + dist(i, 1)] values. This looks simple so far.

Now the question is how to get cost(i)? To calculate the cost(i) using Dynamic Programming, we need to have some recursive relation in terms of sub-problems.

Let us define a term C(S, i) be the cost of the minimum cost path visiting each vertex in set S exactly once, starting at 1 and ending at i . We start with all subsets of size 2 and calculate C(S, i) for all subsets where S is the subset, then we calculate C(S, i) for all subsets S of size 3 and so on. Note that 1 must be present in every subset.

Below is the dynamic programming solution for the problem using top down recursive+memoized approach:-

For maintaining the subsets we can use the bitmasks to represent the remaining nodes in our subset. Since bits are faster to operate and there are only few nodes in graph, bitmasks is better to use.

For example: –

10100 represents node 2 and node 4 are left in set to be processed

010010 represents node 1 and 4 are left in subset.

NOTE:- ignore the 0th bit since our graph is 1-based

Time Complexity : O(n 2 *2 n ) where O(n* 2 n) are maximum number of unique subproblems/states and O(n) for transition (through for loop as in code) in every states.

Auxiliary Space: O(n*2 n ), where n is number of Nodes/Cities here.

For a set of size n, we consider n-2 subsets each of size n-1 such that all subsets don’t have nth in them. Using the above recurrence relation, we can write a dynamic programming-based solution. There are at most O(n*2 n ) subproblems, and each one takes linear time to solve. The total running time is therefore O(n 2 *2 n ). The time complexity is much less than O(n!) but still exponential. The space required is also exponential. So this approach is also infeasible even for a slightly higher number of vertices. We will soon be discussing approximate algorithms for the traveling salesman problem.

Next Article: Traveling Salesman Problem | Set 2

References:

http://www.lsi.upc.edu/~mjserna/docencia/algofib/P07/dynprog.pdf

http://www.cs.berkeley.edu/~vazirani/algorithms/chap6.pdf

Please Login to comment...

Similar reads.

- Dynamic Programming

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Various algorithms for solving the Traveling Salesman problem in python!

rohanp/travelingSalesman

Folders and files, repository files navigation, traveling salesman problem.

Formally, the problem asks to find the minimum distance cycle in a set of nodes in 2D space. Informally, you have a salesman who wants to visit a number of cities and wants to find the shortest path to visit all the cities. This NP-hard problem has no efficient algorithm to find the optimal solution (for now...).

I had a competition with my friends to see who could find the shortest path in the 100 city dataset we were given in a given amount of time. I realized that all TSP algorithms spend a lot of time looping through objects, and Python has notoriously slow for-loops, so I figured that instead of coming up with a fancy algorithm, I could snake the competition by using Numba/Numpy/Cython/Inline C to speed up a simpler algorithm. Lets see how it goes.

Brute Force

Searches every permutation of cycles and returns the shortest one. Simple algorithm that guarantees the optimal solution, but has O(N!) efficiency, and is therefore not feasible to run on a 100 city dataset (at least on my puny computer), regardless of how fast your for loops were.

- Starts at an arbitrary city

- Go to the next closest unvisited city

- Repeat (2) until all cities have been visited, save path length

- Start again at (1) with a diferent initial city.

- Repeat (1-4) until all possibilities have been exausted and return the shortest path

While this algorithm is relatively fast O(N^3), it does not come up with very good solutions.

*The length shown is not the sum of the distances from each city to the next, but rather the sum of the square of the distances form each city to the next. This applies for all algorithms shown.

- Start with a random route

- Perform a swap between two edges

- Keep new route if it is shorter

- Repeat (2-3) for all possible swaps

- Repeat (1-5) for M possible initial configurations

This algorithm is both faster, O(M*N^2) and produces better solutions. The intuition behind the algorithm is that swapping two edges at a time untangles routes that cross over itself.

The 2-opt swap performed much better than greedy; the path it drew looks similar to something a human might draw.

Same as the 2-opt swap, but instead swapping N nodes at a time. This leads to more stochasticity, decreasing the chances of falling into a local minima while also allowing the algorithm to explore more of the search space, at the cost of a more coarse search. This algorithm approaches The Random Algorithm TM as N approaches the number of cities.

In this example I used a 3-opt swap. Interestingly, it performed much worse than both the 2-opt swap and the greedy algorithm. I'm not sure why this is the case.

Optimizations

Although normally Python lists are more efficient than Numpy arrays when indexed, Numba should optimize Numpy array indexing.

A "Numpy aware" JIT compiler.

I tried to use Weave for inline C code for the distance_squared function, since I spent a lot of time it in, but for some reason this ended up being slower than Numba.

I ended up not getting to this, but it would likely have been similar to Numba-level performance.

I lost the competition :(. I learned that a good algorithm >>>> low-level optimizations. If I could go back I would try to create an algorithm that tried to find a convex route (or as close to possible), since that is what I noticed us humans intuitively try to do when coming up with solutions to TSP, which we are actually quite good at. On the bright side, because I spent so much time struggling to install Numba properly, I finally started to use a real package manager (Conda: it's reallyyy nice would reccomend).

Run Intructions

Dependencies

Edit the import statement in salesman.py to use the algorithm of your choice. For example, to use the algorithm in brute.py , the statement would be from brute import algorithm . Finally, run via

python salesman.py

Contributors 2

- Python 100.0%

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 24 April 2024

Energy-efficient superparamagnetic Ising machine and its application to traveling salesman problems

- Jia Si ORCID: orcid.org/0000-0003-0737-4905 1 , 2 ,

- Shuhan Yang 1 ,

- Yunuo Cen 1 ,

- Jiaer Chen 1 ,

- Yingna Huang 1 ,

- Zhaoyang Yao 1 ,

- Dong-Jun Kim 1 ,

- Kaiming Cai 1 ,

- Jerald Yoo ORCID: orcid.org/0000-0002-3150-1727 1 ,

- Xuanyao Fong ORCID: orcid.org/0000-0001-5939-7389 1 &

- Hyunsoo Yang ORCID: orcid.org/0000-0003-0907-2898 1

Nature Communications volume 15 , Article number: 3457 ( 2024 ) Cite this article

1475 Accesses

4 Altmetric

Metrics details

- Electrical and electronic engineering

- Magnetic devices

The growth of artificial intelligence leads to a computational burden in solving non-deterministic polynomial-time (NP)-hard problems. The Ising computer, which aims to solve NP-hard problems faces challenges such as high power consumption and limited scalability. Here, we experimentally present an Ising annealing computer based on 80 superparamagnetic tunnel junctions (SMTJs) with all-to-all connections, which solves a 70-city traveling salesman problem (TSP, 4761-node Ising problem). By taking advantage of the intrinsic randomness of SMTJs, implementing global annealing scheme, and using efficient algorithm, our SMTJ-based Ising annealer outperforms other Ising schemes in terms of power consumption and energy efficiency. Additionally, our approach provides a promising way to solve complex problems with limited hardware resources. Moreover, we propose a cross-bar array architecture for scalable integration using conventional magnetic random-access memories. Our results demonstrate that the SMTJ-based Ising computer with high energy efficiency, speed, and scalability is a strong candidate for future unconventional computing schemes.

Similar content being viewed by others

Efficient combinatorial optimization by quantum-inspired parallel annealing in analogue memristor crossbar

Combinatorial optimization by weight annealing in memristive hopfield networks

Ferroelectric compute-in-memory annealer for combinatorial optimization problems

Introduction.

The demands for future data-intensive and energy-efficient computing tasks overwhelm the computational power of conventional von Neumann architectures 1 . For example, NP-hard problems are often encountered in combinatorial optimizations 2 , resource allocation 3 , cryptography 4 , finance 5 , image processing 6 , tour planning 7 , and job sequencing 8 , and their computational time and hardware resources increase exponentially with the problem size, which makes them very difficult or impossible to be solved by conventional computers in a finite time. These problems can be mapped to the Ising model, a mathematical model to characterize interactions between magnetic spins 9 . The dynamics of the model is algorithm- based, i.e. by constructing a proper coupling matrix and allowing the system to evolve utilizing an intrinsic convergence property of the Ising model, the ground state could be obtained as a solution to the corresponding problems. However, as the system might be trapped in many local minima, the annealing process has usually been adopted in Ising computers to address such limitations. It is commonly agreed that adding fluctuations prevents the Ising computer from being stuck at the local minima.

Efficient algorithms and hardware systems for finding an optimal or near-optimal solution of an Ising model at a fast speed and low power have been sought. Adiabatic quantum computing (AQC) 10 , 11 and quantum computing 12 , 13 , 14 , 15 based on superconducting qubits are capable of converging the Ising model by tunneling out of local minima to the global minima. A 100-node Maxcut problem was solved using a quantum computer of 2048 spins with huge power consumption 16 . Besides the high cost and complexity of cryogenic temperature, this proof-of-concept system was limited by the sparse connections only between the nearest neighbors, which leads to sub-optimal outcomes 17 . Simulated annealing based on CMOS implementations was exploited for parallel Ising computing, including central processing units (CPU) 18 , 19 , graphics processing units (GPU) 20 , and field-programmable gate array (FPGA) 21 , 22 . These hardware have reported as large as 16,384 spins, however, it requires huge hardware resources for generating random numbers to introduce stochasticity to escape from the local minima 4 , 18 , 23 , 24 . Coherent Ising machine (CIM) is an optical scheme with competitive energy efficiency. However, it requires a long fiber ring cavity and relies on external FPGA for implementing coupling 25 , 26 . The temporal multiplexing process is also time-consuming and hard to expand to large systems. Recently, experiments and simulation works have investigated various devices to emulate the behavior of Ising spins by taking advantage of their intrinsic physics. An 8-spin asynchronous probabilistic computer based on superparamagnetic tunnel junctions for solving integer factorization tasks of values up to 945 was demonstrated 4 . SPICE simulations of 16-city TSP using simulated annealing method were presented 27 . Other works such as 8-spin phase-transition nano-oscillators 28 , multiferroic oxide devices with a high thermal stability 29 , and magnetoresistive random access memory (MRAM) 30 , 31 have also conceptually proved that spin-based devices are suitable for representing Ising units. However, these works have encountered challenges in either partially-connected Ising spins or small scalability which limit the Ising computer from solving practical problems.

TSP discussed in this paper is a well-known problem which is much beyond the limitation of locally connected Ising models. Other combinatorial optimization problems, such as knapsack problems, coloring problems, and number partitioning, need all-to-all connection to satisfy specific constraints 9 . In practice, an additional graph embedding process is often required when mapping to 2-dimensional CMOS circuitry which only considered the coupling between adjacent spins 32 , 33 , 34 . Since the embedding increases the required number of auxiliary spins and causes spin connections to change, the annealing accuracy is degraded significantly, especially when the problem size is large. This means that supporting a fully connected Ising model is highly recommended for dealing with a wide range of problems. Another problem is the rapidly increasing connectivity when considering large-scale systems, which usually results in huge energy consumption and latency. Since the number of spins that a particular annealing processor can handle limit the scale of the problem that can be solved, how to solve complex problems with limited hardware in an energy-efficient way has also drawn significant attention.

In this work, we experimentally report a scalable Ising computer based on 80 SMTJs with all-to-all connections and successfully solve the 4761-node TSP problem. The intrinsic stochasticity in SMTJ enables ultra-fast and low-power Ising annealing without using extra resources for random number generation and Metropolis determining process 7 . By combining global annealing with intrinsic annealing in SMTJ, the convergence of the Ising problem is guaranteed especially in large-scale Ising problems. The method to determine parameters of global annealing is discussed. With an all-to-all connection among Ising spins, the combinatorial optimization of 9-city TSP is solved with the optimal solution. We further develop the algorithm for constrained TSP (CTSP) with no extra auxiliary Ising bits both in algorithm and hardware, indicating the superiority and flexibility of this Ising computer. Furthermore, we propose an optimization strategy based on graph partitioning (GP) and CTSP and experimentally solved a 70-city TSP, which typically needs 4761 nodes, on our 80-node Ising computer with a near-optimal solution. The system can obtain the lowest power consumption of 0.64 mW as well as high energy efficiency of 39 solutions per second per watt among state-of-art Ising annealers. We have experimentally demonstrated that large-scale Ising problems can be solved by small-scale hardware in an energy-efficient way.

SMTJ-based artificial Ising spin

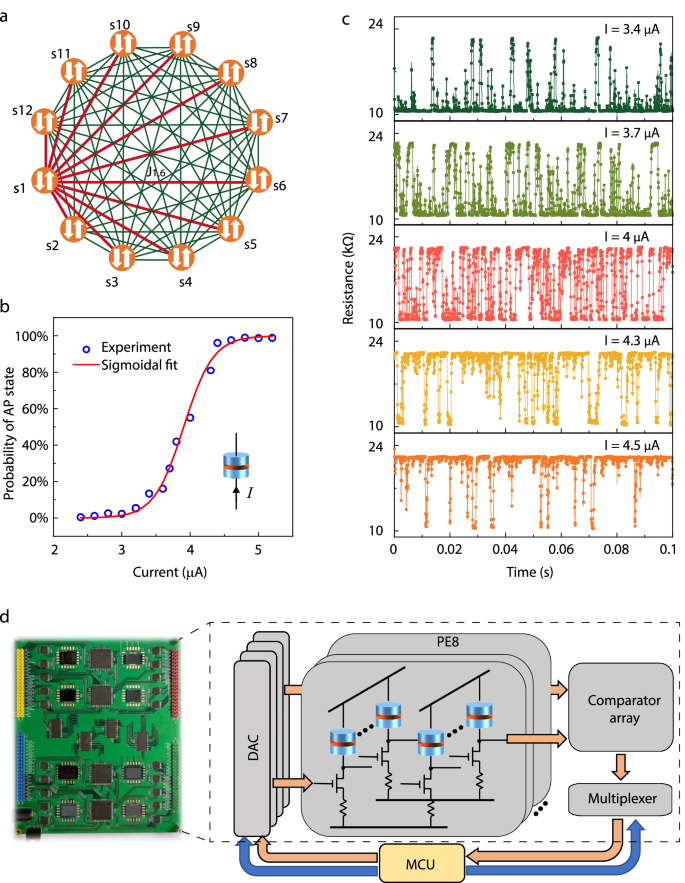

Various NP-hard problems can be solved by constructing corresponding Ising models and observing the ground states during evolution processes. Figure 1a shows an all-to-all connected Ising model, whose Ising Hamiltonian can be written as

where \(H\) is the total energy of the system, \(N\) is the total number of spins, \({s}_{i}\) is the \(\,i\) -th spin with one of two states; “+1” (Ising spin up) or “−1” (Ising spin down), \({J}_{i,j}\) is the coefficient of coupling between the \(i\) -th and the \(j\) -th spins, and \({h}_{i}\) is the external field of the \(\,i\) -th spin. For a fixed configuration of other spins than \({s}_{k}\) , the probability of \({s}_{k}\) staying in the down-state is given by

where \(\Lambda=\frac{\partial H}{\partial {s}_{k}}\) (see Supplementary Note 1 ).

a All-to-all connected 12-spin Ising model with s represents the spin and J 1,6 represents the coupling between s1 and s6. b Sigmoidal fit of probability of AP state ( \({p}_{{AP}}\) ) of an SMTJ under different input currents ( I ). \({p}_{{{{{{\rm{AP}}}}}}}=\frac{1}{1+{e}^{-4.672\times (I-3.905{{{{{\rm{\mu }}}}}}{{{{{\rm{A}}}}}})}}\) . Inset: diagram of an SMTJ. A tunneling barrier layer is sandwiched by a reference layer and a free layer. c Time-dependent resistance of an SMTJ under different input currents ( I ). d Photograph and schematic diagram of SMTJ-based Ising computer. The system contains 8 processing elements (PEs), 4 digital-to-analog converters (DACs), a comparator array, a multiplexer and a microcontroller unit (MCU). Each PE has 10 SMTJ computing units. Each computing unit includes a transistor and a resistor to adjust the property into stochastic. Blue lines and orange arrows represent the control and data flow, respectively.

One natural implementation of this Ising spin is based on a stochastic nanomagnet. The inset of Fig. 1b shows the sketch of an SMTJ, consisting of a tunneling barrier sandwiched by a reference layer and a free layer (see Methods section). Because of the small device diameter (~50 nm), the energy barrier of the free layer between the anti-parallel (AP) and parallel (P) states is low that the retention time of either state is in the range of μs to ms, similar to previous studies 4 , 35 . The SMTJ resistance, measured as a function of time in Fig. 1c , shows preferred AP states at high currents and P states at low currents. When the current ( I ) is ~4 μA, SMTJ shows an equal chance of AP and P states. The probability of the AP state under different input currents over 0.1 s is fitted in Fig. 1b by a sigmoid function:

where \({{{{{\rm{a}}}}}}=4.67 \, {{{{{\rm{and\; b}}}}}}=3.9 \, {{{{{\rm{\mu }}}}}}{{{{{\rm{A}}}}}}.\) In order to emulate Ising spin \({s}_{k}\) with our SMTJ device, we only need to make the probability of the down-state of \({s}_{k}\) to be equal to that for the AP state of SMTJ, namely \({p}{\_}{{{{{{\rm{\_}}}}}}{{{{{\rm{AP}}}}}}}={p}{\_}{{{{{{\rm{\_}}}}}}\downarrow }\) , with two calibration coefficients. Thus, we can derive the form of the current \({{I\_}}_{k}\) injected to SMTJ as (see Supplementary Note 1 ):

where \(c=1/{kT}\) is the effective inverse temperature which can be conducted for global annealing.

Intrinsic annealing in SMTJs-based Ising computer

By integrating 80 SMTJs with a peripheral circuit and a microcontroller unit (MCU), we build an 80-node Ising computer (see Supplementary Note 2 ). Each Ising spin in Eq. ( 1 ) is emulated by an SMTJ with intrinsic randomness, where P (AP) state represents spin-up (down). Figure 1d shows the photograph of the printed circuit board (PCB) and the diagram of the system (see Methods section). The system contains 8 processing elements (PEs); each PE has 10 SMTJ computing units. Each SMTJ computing unit includes a transistor and a resistor to adjust the state of SMTJ into stochastic. During the computing process, an MCU examines the states of all SMTJs by reading the output of comparator arrays through multiplexers and generates new input voltages for digital-to-analog converters (DACs) according to the updating rule in Eq. ( 4 ) (see Supplementary Note 3 for calibration of 80 SMTJ computing units).

During the evolution process, an Ising solver could be easily trapped in a local minimum state. To avoid this non-optimal solution, annealing algorithms such as simulated annealing (SA) or quantum annealing (QA) were developed. The general idea of SA is to make the system evolve from a high temperature to a low temperature gradually 7 . The convergence and relaxation of SA can be mathematically provable 36 . During each iteration, a random number is generated for stochasticity and introduced to determine whether the result in this iteration should be accepted or not. In QA, quantum fluctuations cause quantum tunneling between states 17 . In both SA and QA, stochasticity needs to be introduced into the annealing process. In contrast, our Ising system utilizes the intrinsic stochastic behaviors of SMTJ to perform the Metropolis process of standard SA in hardware, which greatly saves the solution time and hardware resources for generating randomness (see Supplementary Note 4 ). Besides, our Ising computer has an all-to-all connection which has wider application scenarios, as well as a better capability of escaping from local minima.

Ising mapping of N-city TSP and CTSP

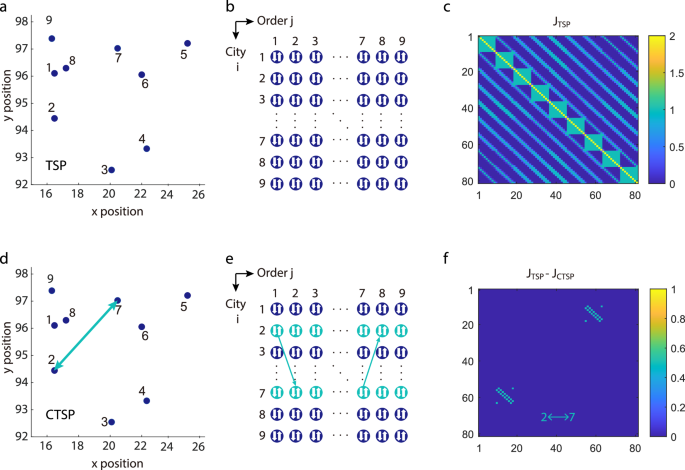

We have applied our Ising computer to the TSP problem, one of the combinatorial optimization problems, which applies to various sectors, such as vehicle routing, logistics, planning, and scheduling. The goal is to find the shortest route that visits all listed cities once and only once given distances between the cities in the list. In order to solve this problem, we first map N -city-TSP to an \({N}^{2}\) -spin Ising model, or \({(N-1)}^{2}\) -spin model assuming a fixed starting city. Figure 2a shows the coordinates of 9 cities and Fig. 2b shows the 81-spin Ising model, whose rows indicate the cities and columns indicate the visiting order. We define the binary spin, s , as \({s}_{i,j}\) = 1 if city i is visited as j -th city or \({s}_{i,j}\) = −1 otherwise. The total Hamiltonian of TSP is expressed by 9

where the first term is a constraint that represents only one city is visited at the j -th visit, and the second term represents one city is visited only one time. \(w\) is a constant small enough ( \(0 \, < \, w \, < \, 1\) ) not to violate the two constraints of the TSP cycle. \({d}_{i,{i{{\hbox{'}}}}}\) is the distance between city \(i\) and city \({i{{\hbox{'}}}}\) . According to Eqs. ( 1 ) and ( 5 ), coupling matrix \(J\) of 81 spins could be obtained, as shown in Fig. 2c (see Supplementary Note 5 ). It shows that spins in the same row or column have strong coupling, as indicated by the first two terms in Eq. ( 5 ).

a Coordinates of all 9 cities used in this problem which are the first 9 cities in the dataset Burma14 from TSPLIB. b Ising spin representation for 9-city TSP (81 spins). Rows indicate names of cities and columns indicate the visiting order. Each spin can be 1 (visited) or −1 (not visited) in each iteration. c Color map of the coupling matrix J TSP of 9-city TSP, and the color bar represents an effective energy with the unit of kT . Here, k is the Boltzmann constant and T is the temperature. d Constrained TSP (CTSP) with a fixed vising sequence from city 2 to city 7 or from city 7 to city 2. The arrows represent the visiting sequence. e The Ising spin representation for CTSP with the fixed visiting sequence in d . Arrows represent possible vising sequences. f Color map of the difference of coupling matrix between TSP (J TSP ) in a and CTSP (J CTSP ) in d . Arrows represent the fixed vising sequences from city 2 to city 7 or from city 7 to 2.

We define CTSP as the visiting orders of some cities are enforced during the traveling. This is quite useful in real-life scenarios. For example, a delivery man collects food and drinks at shop A and must deliver hot drinks to B first even though the total cost is higher than optimal. We propose an algorithm for solving CTSP by adding negative “distance” to the Hamiltonian. For example, suppose that city A and city B are required to be connected in the CTSP as city 2 and city 7 shown in Fig. 2d , and then we add the term

such that the energy of a path, where city A and city B are connected, is always lowered by \(\theta .\) When \(\theta\) is sufficiently large, the optimal path must have city 2 and city 7 connected. Thus, the total Hamiltonian of the CTSP is expressed by

Constructing an Ising model for CTSP is exactly the same as TSP except for extra allowed visiting sequences, as shown in Fig. 2e . This would lead to a modification of the coupling matrix of \(J\) according to Eq. ( 7 ) (see the deduction of \({J}_{{CTSP}}\) in Supplementary Note 6 ). From Fig. 2f we can clearly see the differences between \({J}_{{CTSP}}\) and \({J}_{{TSP}}\) . This algorithm of CTSP fits for arbitrary constraints of visiting sequences as well as their combinations.

Experimental demonstration of 9-city TSP

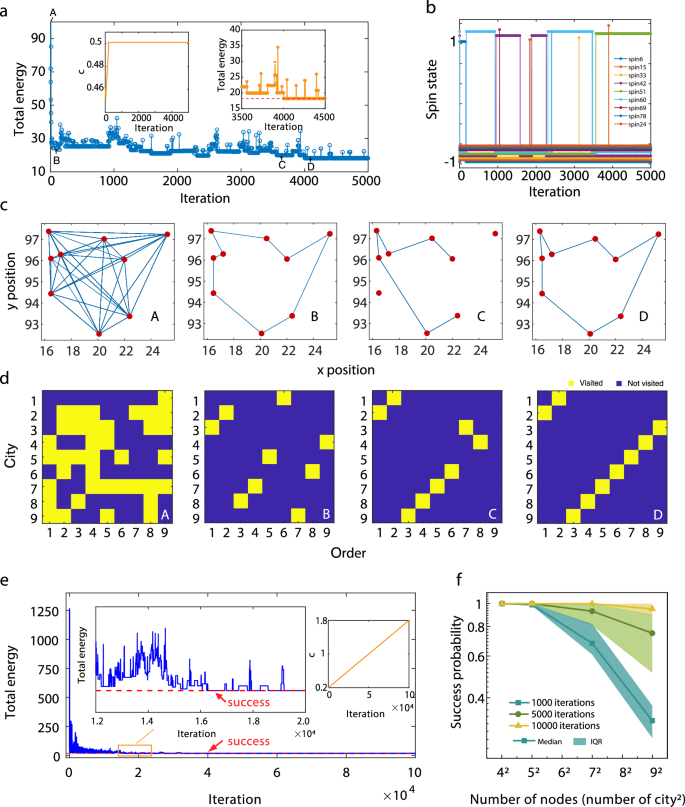

We first run a 9-city TSP in the 80 SMTJ-based Ising computer at a relatively low but non-zero effective temperature to examine the intrinsic annealing in SMTJ. The iteration time is set comparable to the longest retention time of SMTJs to avoid reading previous spin states. In our experiments, we set the iteration time as 0.1 ms. As shown in Fig. 3a , as the effective inverse temperature ( c ) is increased quickly to 0.5, the system converges rapidly to a low energy state within 50 iterations and reaches the ground state after 4000 iterations. It should be noted that the intrinsic stochasticity in SMTJs helps the system escape from local minima without an extra annealing process, as shown in the right inset of Fig. 3a . Figure 3b illustrates the evolution of 9 spins out of 81 spins. The evolution of all 81 spins can be found in Supplementary Note 7 .

a Total energy transition of 9-city TSP with 5000 iterations (the optimal solution with the energy of 18.23 corresponds to the dashed horizontal line). Insets: effective inverse temperature ( c ) and total energy within 3500–4500 iterations. b Evolution of 9 representative SMTJ states in 5000 iterations. An offset is used in the y -axis to show each SMTJ clearly. c Visiting routes of state A, B, C, and D in a . d Corresponding Ising spins of state A, B, C, and D in a . The yellow squares represent ‘visited ( \({s}_{i,j}=1\) )’ and the purple squares represent ‘not visited ( \({s}_{i,j}=-1\) )’. e Total energy transition with increasing c from 0.2 to 1.8. Left inset: zoom-in view of total energy transition with increasing c from 0.392 to 0.52. Right inset: transition of c with iterations. The red dashed line represents the optimal path (success). f Success probability of solving TSP with varying the node size. The data points and shadows represent the median value and the interquartile range (IQR), respectively.

We choose four states in Fig. 3a to inspect the traveling path in Fig. 3c and their Ising spins, namely \({s}_{i,j}\) , as shown in Fig. 3d . The yellow square in Fig. 3d represents \({s}_{i,j}=1\) (visited) and the blue square represents \({s}_{i,j}=-1\) (not visited). In an initial state A, the spin states are randomly set and then converge to a relatively low energy at state B. State C is an intermediate solution during the annealing process. State D is the optimal solution satisfying two constraints of the TSP. Because we anneal the system to a relatively low but non-zero temperature so that the convergence to a sub-optimal state could be guaranteed, and at the same time, the intrinsic randomness in SMTJ helps the system to escape from local minima and find a ground state quickly. We test 10 different random initial states each with 5000 iterations and find that in all cases the system can obtain a relatively small energy, as shown in Supplementary Note 8 . However, there is a probability that the system jumps out of the ground state because of the non-zero temperature. If we continue to observe the evolution in a large timescale, the system would move back to the global minimum state. In some cases, where the speed and near-optimal solution matter but the accurate optimal solution is not, the number of iterations can be chosen to be small.

Further global annealing of the system to a lower effective temperature may guarantee the convergence of the computation. Here we use linear annealing as an example to examine the convergence of this algorithm in a very large-iteration limit. The initial temperature should be chosen sufficiently high to ensure that the thermal energy exceeds any energy barrier ( \(\Delta H{=H}_{\max }-{H}_{\min }\) ) within the system, while still adhering to the fundamental constraints of the specific Ising model. For a given N-city TSP, \({H}_{\max }\) in Eq. ( 5 ) can be estimated as \(w\times N\times \bar{d}\) , assuming that the distance between any two cities is the same as the average distance \(\bar{d}\) . Similarly, \({H}_{\min }\) can be estimated as \(w\times N\times {d}_{\min }\) . Therefore, the initial \(c\) of 9-city TSP in our experiment can be estimated as \({c}_{{{{{{\rm{initial}}}}}}}\, \sim 1/\Delta H=0.07\) , where \(w=0.5\) , \(N=9\) for a total of 9 cities, \(\bar{d}=4\) and \({d}_{\min }=0.8\) for the average and shortest distance of each two cities, respectively in Fig. 3c . We then choose \({c}_{{{{{{\rm{initial}}}}}}}\) = 0.2 which is sufficiently safe for annealing. As the temperature linearly decreases, the dynamical system gradually stabilizes. The final temperature should be low enough i.e., \({c}_{{{{{{\rm{final}}}}}}} \, \gg \, 1/\Delta H\) , to freeze all possible fluctuations. Here we set \({c}_{{{{{{\rm{final}}}}}}}=1.8\) which is at least one order larger than \(1/\Delta H\) . This can also be verified by observing randomly generated states under \({c}_{{{{{{\rm{final}}}}}}}\) for long iterations. Regarding the annealing speed, if several changes in the spin configuration are observed under each value of c , then this annealing speed is valid. Plenty trials are required to find the proper annealing speed (details in Supplementary Note 8 ).

In Fig. 3e we can find the first global minimum energy appears after 16,500 iterations, and converge to the ground state after 40,000 iterations. Temperature schedules can be optimized to reduce iteration numbers, e.g. increase the effective temperature in the first few time steps, and then decrease gradually, or learned by the reinforcement learning method 37 . In practice, we use one memory to store the minimum energy state during the computation, and another memory to record the final energy state. We take the minimum value of these two results as the solution. Figure 3f shows the success probability (defined as finding the optimal path) of TSP with various node sizes. The success probability of 9-city TSP reaches 95% after 10 4 iterations. The success probability with the parameter \(w\) in Eq. ( 5 ) which determines the relative strength of the constrain term and distance term is also discussed. If the \(w\) is too large, then the probabilities of violations, namely the invalid path, would increase, as shown in Supplementary Note 8 . If \(w\) is too small, then the effect of the distance term is small, which results in a slower convergence to the ground state.

The advantages of this annealer are threefold: (1) Selective working modes by using different temperature schemes. One is the probabilistic sampling mode working at a constant temperature, which is similar to an asynchronous probabilistic computer 4 ; the other is the annealing mode conducted by reducing the effective temperature. (2) Fast speed and low power consumption to find the ground state because of the intrinsic annealing properties in SMTJ. (3) Global annealing outperforms probabilistic sampling in achieving efficient convergence, especially for large-scale problems.

We have implemented a synchronous design with a lower requirement on the speed of peripheral circuits. This design also effectively mitigates issues such as leakage, sneak currents, and parasitic resistances which might encountered in asynchronous hardware with a memristive (or resistive) crossbar array.

Compressing 70-city TSP to 80-node Ising computer

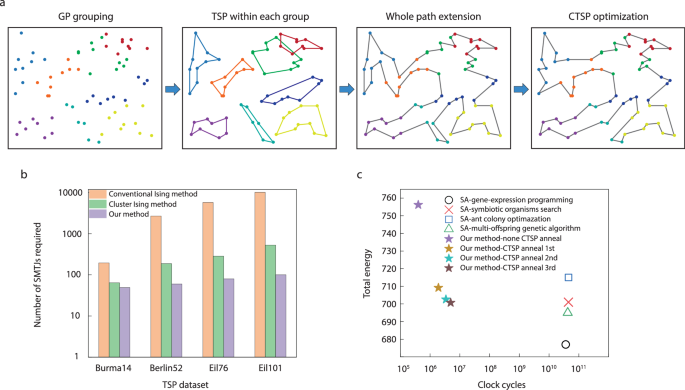

Generally, the number of spins required for an N -city TSP is ( N -1) 2 , which limits the scalability of TSP on state-of-the-art computing systems. Here, we propose a graph Ising compressing algorithm based on CTSP that can significantly reduce the number of spins and interactions for solving a TSP. Figure 4a is an example of how we apply this algorithm to our 80-node SMTJ Ising computer for solving a 70-city TSP (4761 nodes, st70 data set from TSPLIB 38 ). The major steps of this algorithm can be described as follows: (a) divide the cities into several smaller groups until the number of cities in each group is less than 10 by GP method; (b) solve TSP within each group separately; (c) integrate neighboring groups to obtain an initial path of the whole group; and (d) optimize the path in (c) by a CTSP window sliding over the whole map.

a Optimization algorithm for 70-city TSP. b Number of required SMTJs for various problems using different methods. Burma14, berlin52, eil76, and eil101 are TSP of 14, 52, 76, and 101 cities, respectively. c Comparison of total Ising energy (path) and total clock cycles for final solution with different SA-based algorithms, including symbiotic organisms search 40 , ant colony optimazation 41 , multi-offspring genetic algorithm 42 , and gene-expression programming 7 . Our method is tested on our Ising system and others are tested on Intel Core-i7 PC. In this comparison, our system runs at a main frequency of 10 kHz.

It is worth mentioning that GP is also an Ising problem. When converting a global TSP into local TSPs, using GP would be more hardware-friendly for our Ising computer compared to other clustering algorithms. It is based on the idea that the original graph can be separated into multiple sub-graphs depending on the Euclidean distance. The number of spins required for solving GP is ~ N and thus, GP is quite efficient for local TSPs since the problem size can be reduced to ~ \({\left(N-1\right)}^{2}/a\) , where \(a\) is the number of groups, and each TSP can be optimized independently (see GP mapping in Supplementary Note 9 ).

The final step (d) is based on CTSP, where a rectangular window slides over the path and cuts it into several disconnected lines, among which the two longest lines are chosen and the edge cities are connected as a circular path (Supplementary Note 10 ). The CTSP is solved within each window for sub-area optimization without changing the visiting order of edge cities. After this, the two lines at the edge cities are opened and CTSP is carried out again after sliding to the next window. GP-CTSP-based optimization algorithm provides an efficient way of finding near-optimal solutions for large-scale TSP on limited hardware resources.

Figure 4b shows the comparison of numbers of spins for different TSPs by a conventional Ising method 9 , cluster Ising method 39 , and our method. The required number of spins in our method is relatively unchanged for various TSPs, while that of other methods increases substantially with the scale of the problem. Figure 4c shows the total path of 70-city TSP as a function of iteration number using different SA-based algorithms, including symbiotic organisms search 40 , ant colony optimization 41 , multi-offspring genetic algorithm 42 , and gene-expression programming 7 . Finally, we obtain the near-optimal path with a total energy of 700.71, which is slightly higher than the optimal solution of 675. However, the iteration number for an optimized solution is 4.9 \(\times\) 10 6 by our method, which is two to three orders lower than that of SA-based algorithms running on Intel Core-i7 CPU 7 with the main frequency of 3 GHz, as shown in Fig. 4c .

Ising computer scaling and cross-bar architecture

The above experimental demonstration shows our Ising computer with 80 SMTJs is capable of finding a near-optimal solution to a medium-scale NP-hard problem. We then explore the performance with increasing from 70 to 200 cities. The simulation of complete TSP task is carried out using MATLAB, incorporating a stochastic model of the SMTJ employed in our experiment (details in Supplementary Note 11 ). The solution quality is defined as

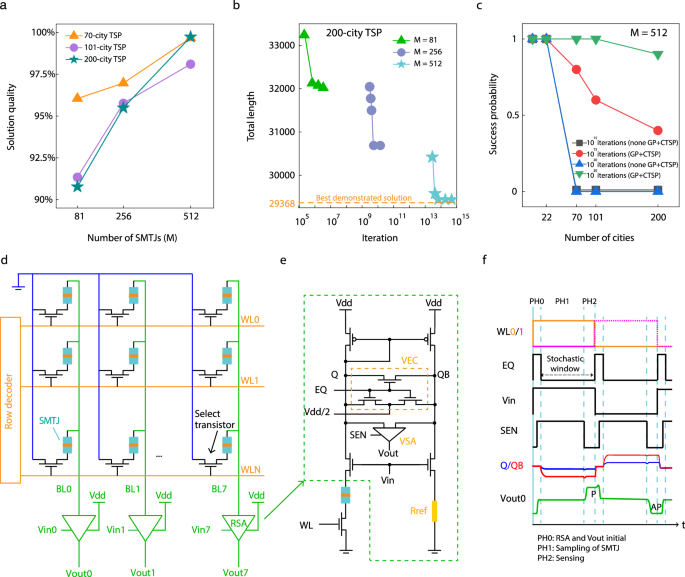

Figure 5a illustrates the solution quality of the best results obtained for each TSP task (Supplementary Note 12 for the best solutions). Notably, as the number of SMTJ (M) increases, higher quality solutions can be attained. It is worth emphasizing that the shortest path obtained for the 101-city TSP is 640.9755 in our study, surpassing the optimal path of 642.3095 provided by TSPLIB (Eil101.opt.tour). This outcome serves as evidence of the superiority of our method. The utilization of more SMTJs solving TSP per sliding window leads to improved optimization of CTSP annealing, resulting in an enhanced solution quality, as depicted in Fig. 5b . Consequently, the time to convergence s would also increase with the use of more SMTJS. When dealing with a fixed hardware capacity, an appropriate number of SMTJs for CTSP optimization can be assigned, taking into account both the solution quality and convergence speed. Figure 5c showcases the success rate (defined as achieving 95% solution quality) as the problem size increases. The success probability of 200-city TSP, whose complexity is ~40,000 nodes, can reach as high as 90%, demonstrating the scalability of our method compared to typical TSP (without GP and CTSP) 9 .

a Solution quality of various problems using different number of SMTJs (M) in the array. The datasets used are St70, Eil101 and KroA200, for 70, 101 and 200 cities, respectively. b Total length of KroA200 TSP at different convergence speeds using different number of SMTJs. The dashed line represents the best demonstrated solution. c Success probability of different TSP algorithm (without/with GP and CTSP) as the number of cities increases after running for 50 times. A total of 512 SMTJs are used. Here we define the success as achieving the solution quality of 95%. d SMTJ cross-bar array which contains row decoder, SMTJ, select transistor and read sense amplifier (RSA). BL represents bit line, WL represents word line, Vin, Vout and Vdd represent the input voltage, output voltage and supply voltage of RSA. e Circuit of one RSA which contains a current mirror, voltage equalization circuit (VEC, with a control signal of EQ which initializes the voltages in Q and QB points, under a reference voltage of Vdd/2), voltage sense amplifier (VSA, with a control signal of SEN), reference resistance ( \({{{{{\rm{Rref}}}}}}=\frac{1}{2}({{{{{\rm{Rap}}}}}}+{{{{{\rm{Rp}}}}}})\) , Rap and Rp represent SMTJ’s resistance in AP and P state respectively), and control transistors. f Signals of writing/reading two adjacent SMTJ cells in one BL, selected by WL0 and WL1 in sequence. All signals are defined in e and f .

We also propose a cross-bar architecture for large-scale Ising computer implementation, which can be integrated by using modern MRAM and CMOS technologies. The core part of this architecture consists of SMTJ bit cells organized as a cross-bar array, integrated with row decoders and read sense amplifiers (RSA), as shown in Fig. 5d . Each SMTJ bit cell contains one select transistor and one SMTJ (1T1SMTJ), whereas the gate of the select transistor is driven by word lines (WL), and the source of all bit cells are connected to the ground. Each bit line is assigned with an RSA. The current flows through SMTJ can be continuously adjusted by Vin of RSA, and the state of SMTJ can be read by RSA at the same time. Figure 5e illustrated the circuit of RSA, in which two clamp transistors control the current flow through the bit cell path and reference path by the gate voltage (Vin), and a current mirror is used to guarantee the same current of the above two paths. Then different voltages would show in the Q and QB point when the resistance of SMTJ is higher or lower than the reference resistor (Rref). By utilizing an enabled voltage sense amplifier (VSA), the voltages at the Q and QB points are sensed, allowing the SMTJ state to be determined as either Vdd (P state) or 0 V (AP state). Particularly, a voltage equalization circuit (VEC) is designed for initializing VSA to avoid incorrect readout. Electrical coupling through a resistance change 43 is evaluated to have neglectable effects (details in Supplementary Note 11 ). Figure 5f shows the signals to control and read bit cells. In phase 0 (PH0), one row of SMTJs is selected by WL, and Vin prepared by peripheral circuit is applied to the corresponding RSA. EQ is set high to initialize Q, QB and Vout as Vdd/2. In phase 1 (PH1), the SMTJ fluctuates from the falling edge to next rising edge of EQ. Finally, in phase 2 (PH2), RSAs read the data of one row in parallel at the falling edge of SEN. After the first row has been retrieved, the partial sum starts to be computed. Meanwhile, the same process for the second row can be started, so and so forth. To avoid reading the previous state, the duration of PH1 is preferred to be comparable with the retention time of SMTJ, which limits the main frequency of the system (see details in Supplementary Note 11 ).

We compare our system with other state-of-art Ising solvers, including CMOS annealer (Intel Core i7 processor) 7 , quantum annealer (D-Wave 2000Q) 16 , 17 , CIM with FPGA 26 , memristor Hopfield neural networks (mem-HNN) 44 , and phase-transition nano-oscillators (PTNO) 28 in solving 4761-node TSP70, as shown in Table 1 . We use the experimental data for benchmarking from literature, and two kinds of SMTJs for comparison. One is our perpendicular anisotropy SMTJ device and the other is assuming recently reported in-plane anisotropy SMTJ with a retention time of 8 ns 45 , 46 . The major attributes are the main frequency (defined as 1/iteration time), power, time-to-solution as well as energy efficiency (defined as solutions per second per watt). As quantum computers, CIM, mem-HNN, and PTNO only demonstrated ~100-node max-cut problems, we estimate the time-to-solution for solving TSP70 by assuming that the algorithm and the total number of spins to find a near-optimal solution is the same as our work (details in Supplementary Note 13 ). Here, we set 80-spin Ising computer as a standard and fix the number of iterations of 400,000 for a good solution to TSP70. Only Ising computing parts are calculated for power consumption.

In Table 1 , although the main frequency of CPU is the highest among all candidates, the energy efficiency is lower than our SMTJ-based approach. This is due to the redundant logic and data transfer delay between the memory and PEs in a conventional von-Neumann architecture. The SMTJ-based approach currently outperforms the quantum annealer both in the power consumption as well as time to solution. The power of quantum annealer is huge which needs to be optimized further for real applications. CIM is another promising architecture with a fast speed and acceptable power consumption. Current CIM systems are proof-of-concept systems which are not at present optimized for energy efficiency. Mem-HNN has a relatively fast speed assuming the 180-nm CMOS technology. However, the required number of devices is large, which limits the integrated density. The PTNO approach uses capacitors or resistors to mimic spin coupling, whose main frequency would be limited by the system scale and parasitic effects. It is reported that the ideal main frequency would decrease from 500 to 87 MHz when the system scale increases from 8-node to 100-node 28 . Our SMTJ-based Ising computer outperforms other approaches with low power consumption with 0.64 mW (details in Supplementary Note 13 ).

We experimentally demonstrate perpendicular MTJs with a retention time of ~0.1 ms and solve TSP70 Ising problems at an energy efficiency of 39 solutions per second per watt. Furthermore, we simulate an Ising computer with 4 Kb SMTJs using 40 nm commercial CMOS technology. The simulated energy efficiency for solving TSP70 by using the same SMTJ can reach 68 solutions per second per watt. By using reported in-plane SMTJ 45 and advanced CMOS, the system could obtain the highest energy efficiency of \(5.4\times {10}^{3}\) , which shows several orders of magnitude improvement over other approaches. This result suggests that an SMTJ-based Ising computer can be a good candidate for solving dense Ising problems in a highly energy-efficient and fast way.

In summary, we have experimentally demonstrated an intrinsic all-to-all Ising computer based on 80 SMTJs, and solved 9-city TSP with the optimal solution. Furthermore, a compressing strategy based on CTSP and GP is proposed to experimentally solve 4761-node 70-city TSP on an 80-node system with a near-optimum solution as well as ultra-low energy consumption. A cross-bar architecture is then proposed for large-scale Ising computers and the 200 city TSP task is simulated. Our system provides a feasible solution to fast, energy-efficient, and scalable Ising computing schemes to solve NP-hard problems.

Sample growth and device fabrication

Thin film samples of substrate/[W (3)/Ru (10)] 2 /W (3)/Pt (3)/Co (0.25)/Pt (0.2)/[Co (0.25)/Pt (0.5)] 5 /Co (0.6)/Ru (0.85)/Co (0.6)/Pt (0.2)/Co (0.3)/Pt (0.2)/Co (0.5)/W (0.3)/CoFeB (0.9)/MgO (1.1)/CoFeB (1.5)/Ta (3)/Ru (7)/Ta (5) were deposited via DC (metallic layers) and RF magnetron (MgO layer) sputtering on the Si substrates with thermal oxide of 300 nm with a base pressure of less than \(2\times {10}^{-8}\) Torr at room temperature. The numbers in parentheses are thicknesses in nanometers. To fabricate the superparamagnetic tunnel junctions, bottom electrode structures with a width of 10 µm were firstly patterned via photolithography and Ar ion milling. MTJ pillar structures with a diameter of ~50 nm for the superparamagnetic behavior were patterned by using e-beam lithography. The encapsulation layer of Si 3 N 4 was in-situ deposited after ion milling without breaking vacuum by using RF magnetron sputtering, and top electrode structures with a width of 10 µm were patterned via photolithography and top electrodes of Ta (5 nm)/Cu (40 nm) were deposited by using DC magnetron sputtering.

MTJ characterization by probe station

The setup includes a source meter (Keithley 2400) for supplying DC bias currents and a data acquisition card (NI-DAQmx USB-6363) for the read operation. A single SMTJ operation cycle comprises two steps (i.e. bias and read). A small DC input current with an amplitude of 1–20 μA is applied to SMTJ. Simultaneously, the DAQ card reads the voltage signal across the SMTJ at a maximum sampling rate of 2 MHz. The MTJ switching probability varies in accordance with the amplitude of applied currents. The retention time of MTJ is determined from random telegraph noise measurements over 250 ms. The expectation values of event time τ is determined by fitting an exponential function to the experimental results.

80 SMTJ arrays and peripheral circuits are integrated on a 12 cm × 15 cm PCB, controlled by an MCU (Arduino Mega 2560 Rev3). Four 12-bit rail-to-rail DACs (AD5381) with 160 output channels in total are used to generate analog DC inputs for PE and comparator arrays. Half of the DAC output channels are used to provide stimulation to the gate terminal of NMOSs (2N7002DW-G), and others are used to provide reference voltages to comparators (AD8694). The drain voltages of NMOS are compared with reference voltages and generate outputs in parallel. Outputs of comparator arrays are read by MCU through four multiplexers (FST16233) and then are calculated to obtain new inputs for DACs. The supply voltage of the PCB board and SMTJs is 5 V and 0.8 V, respectively. The value of resistors in each computing unit can be designed to adjust the center of sigmoidal curves.

Data availability

The data generated during this study are available within the article and the Supplementary Information file. Source data are provided with this paper.

Code availability

The codes that support this study can be available from the corresponding author upon request.

Theis, T. N. & Wong, H. S. P. The End of Moore’s Law: A New Beginning for Information Technology. Comput. Sci. Eng. 19 , 41–50 (2017).

Article Google Scholar

Shim, Y., Jaiswal, A. & Roy, K. Ising computation based combinatorial optimization using spin-Hall effect (SHE) induced stochastic magnetization reversal. J. Appl. Phys. 121 , 193902 (2017).

Article ADS Google Scholar

Tindell, K. W., Burns, A. & Wellings, A. J. Allocating hard real-time tasks: An NP-Hard problem made easy. J. Real.-Time Syst. 4 , 145–165 (1992).

Borders, W. A. et al. Integer factorization using stochastic magnetic tunnel junctions. Nature 573 , 390–393 (2019).

Article ADS CAS PubMed Google Scholar

Tatsumura, K., Hidaka, R., Yamasaki, M., Sakai, Y. & Goto, H. A Currency Arbitrage Machine Based on the Simulated Bifurcation Algorithm for Ultrafast Detection of Optimal Opportunity. in 2020 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2020). https://doi.org/10.1109/ISCAS45731.2020.9181114 .

Cohen, E., Carmi, M., Heiman, R., Hadar, O. & Cohen, A. Image restoration via ising theory and automatic noise estimation. In 2013 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB) 1–5 (IEEE, 2013). https://doi.org/10.1109/BMSB.2013.6621708 .

Zhou, A.-H. et al. Traveling-Salesman-Problem Algorithm Based on Simulated Annealing and Gene-Expression Programming. Information 10 , 7 (2018).

Garza-Santisteban, F. et al. A Simulated Annealing Hyper-heuristic for Job Shop Scheduling Problems. In 2019 IEEE Congress on Evolutionary Computation (CEC) 57–64 (IEEE, 2019). https://doi.org/10.1109/CEC.2019.8790296 .

Lucas, A. Ising formulations of many NP problems. Front. Physics 2 , 5 (2014).

Albash, T. & Lidar, D. A. Adiabatic quantum computation. Rev. Mod. Phys. 90 , 015002 (2018).

Article ADS MathSciNet Google Scholar

Dickson, N. G. & Amin, M. H. S. Does Adiabatic Quantum Optimization Fail for NP-Complete Problems? Phys. Rev. Lett. 106 , 050502 (2011).

Article ADS PubMed Google Scholar

Heim, B., Ronnow, T. F., Isakov, S. V. & Troyer, M. Quantum versus classical annealing of Ising spin glasses. Science 348 , 215–217 (2015).

Article ADS MathSciNet CAS PubMed Google Scholar

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Phys. Rev. E 58 , 5355–5363 (1998).

Article ADS CAS Google Scholar

Martoňák, R., Santoro, G. E. & Tosatti, E. Quantum annealing of the traveling-salesman problem. Phys. Rev. E 70 , 057701 (2004).

Okuyama, T., Hayashi, M. & Yamaoka, M. An Ising Computer Based on Simulated Quantum Annealing by Path Integral Monte Carlo Method. In 2017 IEEE International Conference on Rebooting Computing (ICRC) 1–6 (IEEE, 2017). https://doi.org/10.1109/ICRC.2017.8123652 .

Hamerly, R. et al. Experimental investigation of performance differences between Coherent Ising Machines and a quantum annealer. Sci. Adv. 5 , eaau0823 (2019).

Article ADS PubMed PubMed Central Google Scholar

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473 , 194–198 (2011).

Yamaoka, M. et al. A 20k-Spin Ising Chip to Solve Combinatorial Optimization Problems With CMOS Annealing. IEEE J. Solid State Circuits 51 , 303–309 (2016).

Yamaoka, M. et al. 24.3 20k-spin Ising chip for combinational optimization problem with CMOS annealing. In 2015 IEEE International Solid-State Circuits Conference - (ISSCC) Digest of Technical Papers 1–3 (IEEE, 2015). https://doi.org/10.1109/ISSCC.2015.7063111 .

Davendra, D., Metlicka, M. & Bialic-Davendra, M. CUDA Accelerated 2-OPT Local Search for the Traveling Salesman Problem. In Novel Trends in the Traveling Salesman Problem (eds. Davendra, D. & Bialic-Davendra, M.) (IntechOpen, 2020). https://doi.org/10.5772/intechopen.93125 .

Tatsumura, K., Dixon, A. R. & Goto, H. FPGA-Based Simulated Bifurcation Machine. In 2019 29th International Conference on Field Programmable Logic and Applications (FPL) 59–66 (IEEE, 2019). https://doi.org/10.1109/FPL.2019.00019 .

Tatsumura, K., Yamasaki, M. & Goto, H. Scaling out Ising machines using a multi-chip architecture for simulated bifurcation. Nat. Electron 4 , 208–217 (2021).

Mathew, S. K. et al. μ RNG: A 300–950 mV, 323 Gbps/W All-Digital Full-Entropy True Random Number Generator in 14 nm FinFET CMOS. IEEE J. Solid-State Circuits 51 , 1695–1704 (2016).

Pervaiz, A. Z., Sutton, B. M., Ghantasala, L. A. & Camsari, K. Y. Weighted p-Bits for FPGA Implementation of Probabilistic Circuits. IEEE Trans. Neural Netw. Learn. Syst. 30 , 1920–1926 (2019).

Article PubMed Google Scholar

McMahon, P. L. et al. A fully programmable 100-spin coherent Ising machine with all-to-all connections. Science 354 , 614–617 (2016).

Inagaki, T. et al. A coherent Ising machine for 2000-node optimization problems. Science 354 , 603–606 (2016).

Sutton, B., Camsari, K. Y., Behin-Aein, B. & Datta, S. Intrinsic optimization using stochastic nanomagnets. Sci. Rep. 7 , 44370 (2017).

Dutta, S. et al. An Ising Hamiltonian solver based on coupled stochastic phase-transition nano-oscillators. Nat. Electron 4 , 502–512 (2021).

Sharmin, S., Shim, Y. & Roy, K. Magnetoelectric oxide based stochastic spin device towards solving combinatorial optimization problems. Sci. Rep. 7 , 11276 (2017).

Faria, R., Camsari, K. Y. & Datta, S. Implementing Bayesian networks with embedded stochastic MRAM. AIP Adv. 8 , 045101 (2018).

Zand, R., Camsari, K. Y., Datta, S. & DeMara, R. F. Composable Probabilistic Inference Networks Using MRAM-based Stochastic Neurons. J. Emerg. Technol. Comput. Syst. 15 , 1–22 (2019).

Choi, V. Minor-embedding in adiabatic quantum computation: I. The parameter setting problem. Quantum Inf. Process 7 , 193–209 (2008).

Article MathSciNet Google Scholar

Sugie, Y. et al. Minor-embedding heuristics for large-scale annealing processors with sparse hardware graphs of up to 102,400 nodes. Soft Comput 25 , 1731–1749 (2021).

Cai, J., Macready, W. G. & Roy, A. A practical heuristic for finding graph minors. Preprint at http://arxiv.org/abs/1406.2741 (2014).

Chaves-O’Flynn, G. D., Wolf, G., Sun, J. Z. & Kent, A. D. Thermal Stability of Magnetic States in Circular Thin-Film Nanomagnets with Large Perpendicular Magnetic Anisotropy. Phys. Rev. Appl. 4 , 024010 (2015).

Mitra, D., Romeo, F. & Sangiovanni-Vincentelli, A. Convergence and finite-time behavior of simulated annealing. Adv. Appl. Probab. 18 , 747–771 (1986).

Mills, K., Ronagh, P. & Tamblyn, I. Finding the ground state of spin Hamiltonians with reinforcement learning. Nat. Mach. Intell. 2 , 509–517 (2020).

MP-TESTDATA - The TSPLIB Symmetric Traveling Salesman Problem Instances. http://comopt.ifi.uni-heidelberg.de/software/TSPLIB95/tsp/ (2013).

Dan, A., Shimizu, R., Nishikawa, T., Bian, S. & Sato, T. Clustering Approach for Solving Traveling Salesman Problems via Ising Model Based Solver. In 2020 57th ACM/IEEE Design Automation Conference (DAC) 1–6 (IEEE, 2020). https://doi.org/10.1109/DAC18072.2020.9218695 .

Ezugwu, A. E.-S., Adewumi, A. O. & Frîncu, M. E. Simulated annealing based symbiotic organisms search optimization algorithm for traveling salesman problem. Expert Syst. Appl. 77 , 189–210 (2017).

Mohsen, A. M. Annealing Ant Colony Optimization with Mutation Operator for Solving TSP. Comput. Intell. Neurosci. 2016 , 1–13 (2016).

Wang, J., Ersoy, O. K., He, M. & Wang, F. Multi-offspring genetic algorithm and its application to the traveling salesman problem. Appl. Soft Comput. 43 , 415–423 (2016).

Talatchian, P. et al. Mutual control of stochastic switching for two electrically coupled superparamagnetic tunnel junctions. Phys. Rev. B 104 , 054427 (2021).

Cai, F. et al. Power-efficient combinatorial optimization using intrinsic noise in memristor Hopfield neural networks. Nat. Electron 3 , 409–418 (2020).

Hayakawa, K. et al. Nanosecond Random Telegraph Noise in In-Plane Magnetic Tunnel Junctions. Phys. Rev. Lett. 126 , 117202 (2021).

Safranski, C. et al. Demonstration of Nanosecond Operation in Stochastic Magnetic Tunnel Junctions. Nano Lett. 21 , 2040–2045 (2021).

Download references

Acknowledgements

This work was supported by National Research Foundation (NRF), Prime Minister’s Office, Singapore, under its Competitive Research Programme (NRF-000214-00 to H.Y.), Advanced Research and Technology Innovation Center (ARTIC to H.Y.), the National University of Singapore under Grant (project number: A-0005947-19-00 to H.Y.), and Ministry of Education, Singapore, under Tier 2 (T2EP50123-0025 to H.Y.). We thank Yuqi Su, and Chne-Wuen Tsai from National University of Singapore and Zhi-Da Song from Peking University for useful discussions.

Author information

Authors and affiliations.

Department of Electrical and Computer Engineering, National University of Singapore, Singapore, Singapore

Jia Si, Shuhan Yang, Yunuo Cen, Jiaer Chen, Yingna Huang, Zhaoyang Yao, Dong-Jun Kim, Kaiming Cai, Jerald Yoo, Xuanyao Fong & Hyunsoo Yang

Key Laboratory for the Physics and Chemistry of Nanodevices and Center for Carbon-based Electronics, School of Electronics, Peking University, Beijing, China

You can also search for this author in PubMed Google Scholar

Contributions

J.S. and H.Y. conceived and designed the experiments. J.S. designed, fabricated, and coded the hardware system. D.K., and S.Y. fabricated the devices. J.S., S.Y., and K.C. performed device measurements. Z.Y. bonded the components on PCB. J.S. designed SMTJ-based Ising system. J.S., J.C., Y.C., Y.H. and X.F. developed the optimization algorithm and performed simulations. J.S., S.Y., Y.C., J.Y., X.F. and H.Y. analyzed the data. J.S. and H.Y. wrote the manuscript. H.Y. proposed and supervised this work. All authors discussed the results and revised the manuscript.

Corresponding author

Correspondence to Hyunsoo Yang .

Ethics declarations

Competing interests.