Understanding & Using Time Travel ¶

Snowflake Time Travel enables accessing historical data (i.e. data that has been changed or deleted) at any point within a defined period. It serves as a powerful tool for performing the following tasks:

Restoring data-related objects (tables, schemas, and databases) that might have been accidentally or intentionally deleted.

Duplicating and backing up data from key points in the past.

Analyzing data usage/manipulation over specified periods of time.

Introduction to Time Travel ¶

Using Time Travel, you can perform the following actions within a defined period of time:

Query data in the past that has since been updated or deleted.

Create clones of entire tables, schemas, and databases at or before specific points in the past.

Restore tables, schemas, and databases that have been dropped.

When querying historical data in a table or non-materialized view, the current table or view schema is used. For more information, see Usage notes for AT | BEFORE.

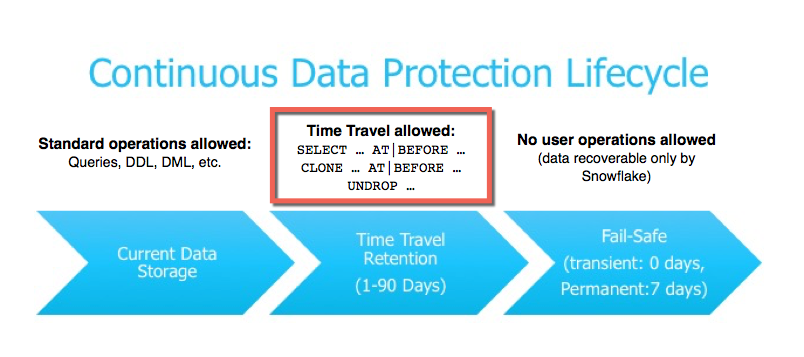

After the defined period of time has elapsed, the data is moved into Snowflake Fail-safe and these actions can no longer be performed.

A long-running Time Travel query will delay moving any data and objects (tables, schemas, and databases) in the account into Fail-safe, until the query completes.

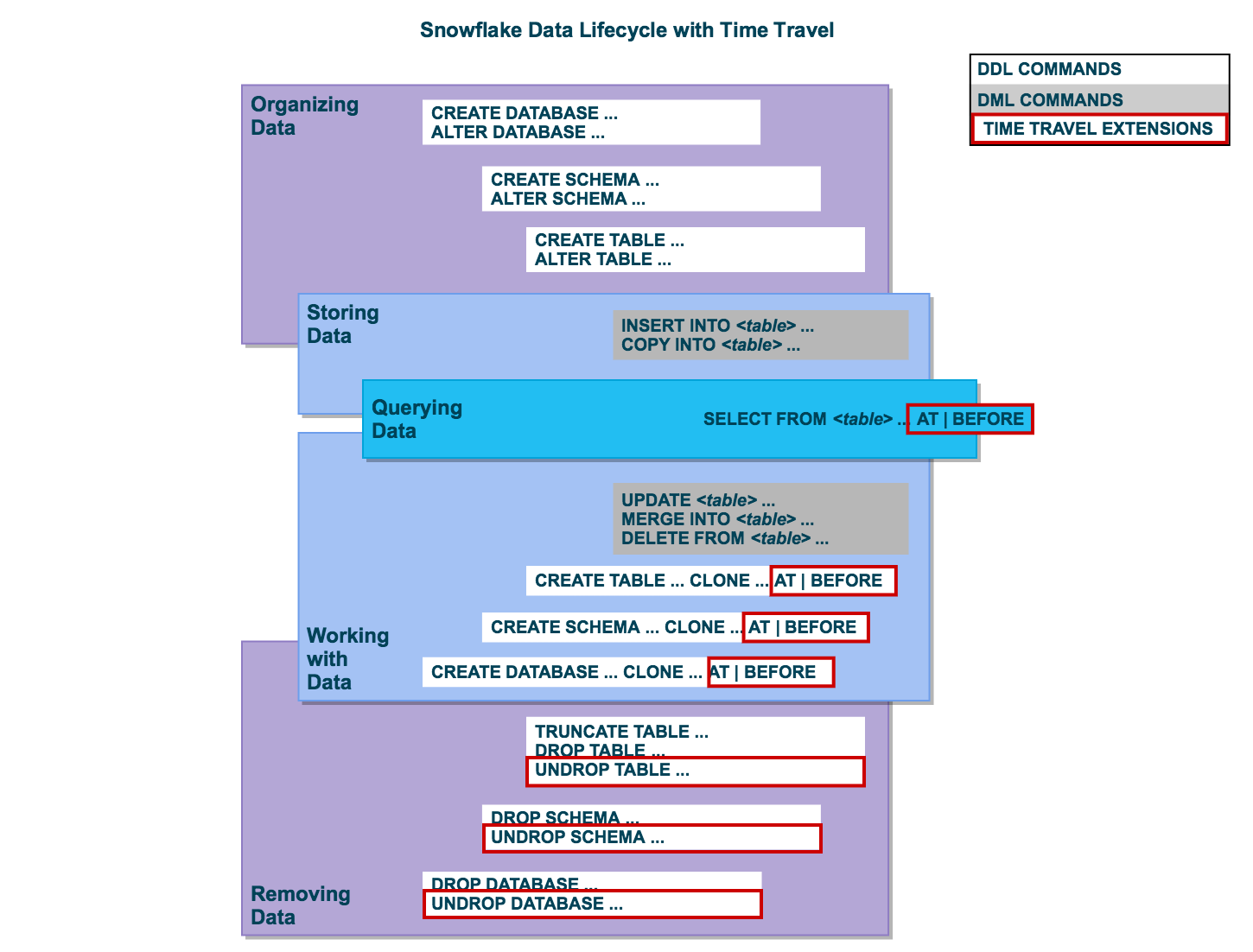

Time Travel SQL Extensions ¶

To support Time Travel, the following SQL extensions have been implemented:

AT | BEFORE clause which can be specified in SELECT statements and CREATE … CLONE commands (immediately after the object name). The clause uses one of the following parameters to pinpoint the exact historical data you wish to access:

OFFSET (time difference in seconds from the present time)

STATEMENT (identifier for statement, e.g. query ID)

UNDROP command for tables, schemas, and databases.

Data Retention Period ¶

A key component of Snowflake Time Travel is the data retention period.

When data in a table is modified, including deletion of data or dropping an object containing data, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved and, therefore, Time Travel operations (SELECT, CREATE … CLONE, UNDROP) can be performed on the data.

The standard retention period is 1 day (24 hours) and is automatically enabled for all Snowflake accounts:

For Snowflake Standard Edition, the retention period can be set to 0 (or unset back to the default of 1 day) at the account and object level (i.e. databases, schemas, and tables).

For Snowflake Enterprise Edition (and higher):

For transient databases, schemas, and tables, the retention period can be set to 0 (or unset back to the default of 1 day). The same is also true for temporary tables.

For permanent databases, schemas, and tables, the retention period can be set to any value from 0 up to 90 days.

A retention period of 0 days for an object effectively disables Time Travel for the object.

When the retention period ends for an object, the historical data is moved into Snowflake Fail-safe :

Historical data is no longer available for querying.

Past objects can no longer be cloned.

Past objects that were dropped can no longer be restored.

To specify the data retention period for Time Travel:

The DATA_RETENTION_TIME_IN_DAYS object parameter can be used by users with the ACCOUNTADMIN role to set the default retention period for your account.

The same parameter can be used to explicitly override the default when creating a database, schema, and individual table.

The data retention period for a database, schema, or table can be changed at any time.

The MIN_DATA_RETENTION_TIME_IN_DAYS account parameter can be set by users with the ACCOUNTADMIN role to set a minimum retention period for the account. This parameter does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. However it may change the effective data retention time. When this parameter is set at the account level, the effective minimum data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Enabling and Disabling Time Travel ¶

No tasks are required to enable Time Travel. It is automatically enabled with the standard, 1-day retention period.

However, you may wish to upgrade to Snowflake Enterprise Edition to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables. Note that extended data retention requires additional storage which will be reflected in your monthly storage charges. For more information about storage charges, see Storage Costs for Time Travel and Fail-safe .

Time Travel cannot be disabled for an account. A user with the ACCOUNTADMIN role can set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that all databases (and subsequently all schemas and tables) created in the account have no retention period by default; however, this default can be overridden at any time for any database, schema, or table.

A user with the ACCOUNTADMIN role can also set the MIN_DATA_RETENTION_TIME_IN_DAYS at the account level. This parameter setting enforces a minimum data retention period for databases, schemas, and tables. Setting MIN_DATA_RETENTION_TIME_IN_DAYS does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. It may, however, change the effective data retention period for objects. When MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Time Travel can be disabled for individual databases, schemas, and tables by specifying DATA_RETENTION_TIME_IN_DAYS with a value of 0 for the object. However, if DATA_RETENTION_TIME_IN_DAYS is set to a value of 0, and MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level and is greater than 0, the higher value setting takes precedence.

Before setting DATA_RETENTION_TIME_IN_DAYS to 0 for any object, consider whether you wish to disable Time Travel for the object, particularly as it pertains to recovering the object if it is dropped. When an object with no retention period is dropped, you will not be able to restore the object.

As a general rule, we recommend maintaining a value of (at least) 1 day for any given object.

If the Time Travel retention period is set to 0, any modified or deleted data is moved into Fail-safe (for permanent tables) or deleted (for transient tables) by a background process. This may take a short time to complete. During that time, the TIME_TRAVEL_BYTES in table storage metrics might contain a non-zero value even when the Time Travel retention period is 0 days.

Specifying the Data Retention Period for an Object ¶

By default, the maximum retention period is 1 day (i.e. one 24 hour period). With Snowflake Enterprise Edition (and higher), the default for your account can be set to any value up to 90 days:

When creating a table, schema, or database, the account default can be overridden using the DATA_RETENTION_TIME_IN_DAYS parameter in the command.

If a retention period is specified for a database or schema, the period is inherited by default for all objects created in the database/schema.

A minimum retention period can be set on the account using the MIN_DATA_RETENTION_TIME_IN_DAYS parameter. If this parameter is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Changing the Data Retention Period for an Object ¶

If you change the data retention period for a table, the new retention period impacts all data that is active, as well as any data currently in Time Travel. The impact depends on whether you increase or decrease the period:

Causes the data currently in Time Travel to be retained for the longer time period.

For example, if you have a table with a 10-day retention period and increase the period to 20 days, data that would have been removed after 10 days is now retained for an additional 10 days before moving into Fail-safe.

Note that this doesn’t apply to any data that is older than 10 days and has already moved into Fail-safe.

Reduces the amount of time data is retained in Time Travel:

For active data modified after the retention period is reduced, the new shorter period applies.

For data that is currently in Time Travel:

If the data is still within the new shorter period, it remains in Time Travel. If the data is outside the new period, it moves into Fail-safe.

For example, if you have a table with a 10-day retention period and you decrease the period to 1-day, data from days 2 to 10 will be moved into Fail-safe, leaving only the data from day 1 accessible through Time Travel.

However, the process of moving the data from Time Travel into Fail-safe is performed by a background process, so the change is not immediately visible. Snowflake guarantees that the data will be moved, but does not specify when the process will complete; until the background process completes, the data is still accessible through Time Travel.

If you change the data retention period for a database or schema, the change only affects active objects contained within the database or schema. Any objects that have been dropped (for example, tables) remain unaffected.

For example, if you have a schema s1 with a 90-day retention period and table t1 is in schema s1 , table t1 inherits the 90-day retention period. If you drop table s1.t1 , t1 is retained in Time Travel for 90 days. Later, if you change the schema’s data retention period to 1 day, the retention period for the dropped table t1 is unchanged. Table t1 will still be retained in Time Travel for 90 days.

To alter the retention period of a dropped object, you must undrop the object, then alter its retention period.

To change the retention period for an object, use the appropriate ALTER <object> command. For example, to change the retention period for a table:

Changing the retention period for your account or individual objects changes the value for all lower-level objects that do not have a retention period explicitly set. For example:

If you change the retention period at the account level, all databases, schemas, and tables that do not have an explicit retention period automatically inherit the new retention period.

If you change the retention period at the schema level, all tables in the schema that do not have an explicit retention period inherit the new retention period.

Keep this in mind when changing the retention period for your account or any objects in your account because the change might have Time Travel consequences that you did not anticipate or intend. In particular, we do not recommend changing the retention period to 0 at the account level.

Dropped Containers and Object Retention Inheritance ¶

Currently, when a database is dropped, the data retention period for child schemas or tables, if explicitly set to be different from the retention of the database, is not honored. The child schemas or tables are retained for the same period of time as the database.

Similarly, when a schema is dropped, the data retention period for child tables, if explicitly set to be different from the retention of the schema, is not honored. The child tables are retained for the same period of time as the schema.

To honor the data retention period for these child objects (schemas or tables), drop them explicitly before you drop the database or schema.

Querying Historical Data ¶

When any DML operations are performed on a table, Snowflake retains previous versions of the table data for a defined period of time. This enables querying earlier versions of the data using the AT | BEFORE clause.

This clause supports querying data either exactly at or immediately preceding a specified point in the table’s history within the retention period. The specified point can be time-based (e.g. a timestamp or time offset from the present) or it can be the ID for a completed statement (e.g. SELECT or INSERT).

For example:

The following query selects historical data from a table as of the date and time represented by the specified timestamp :

SELECT * FROM my_table AT ( TIMESTAMP => 'Fri, 01 May 2015 16:20:00 -0700' ::timestamp_tz ); Copy

The following query selects historical data from a table as of 5 minutes ago:

SELECT * FROM my_table AT ( OFFSET => - 60 * 5 ); Copy

The following query selects historical data from a table up to, but not including any changes made by the specified statement:

SELECT * FROM my_table BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

If the TIMESTAMP, OFFSET, or STATEMENT specified in the AT | BEFORE clause falls outside the data retention period for the table, the query fails and returns an error.

Cloning Historical Objects ¶

In addition to queries, the AT | BEFORE clause can be used with the CLONE keyword in the CREATE command for a table, schema, or database to create a logical duplicate of the object at a specified point in the object’s history.

The following CREATE TABLE statement creates a clone of a table as of the date and time represented by the specified timestamp:

CREATE TABLE restored_table CLONE my_table AT ( TIMESTAMP => 'Sat, 09 May 2015 01:01:00 +0300' ::timestamp_tz ); Copy

The following CREATE SCHEMA statement creates a clone of a schema and all its objects as they existed 1 hour before the current time:

CREATE SCHEMA restored_schema CLONE my_schema AT ( OFFSET => - 3600 ); Copy

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed prior to the completion of the specified statement:

CREATE DATABASE restored_db CLONE my_db BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

The cloning operation for a database or schema fails:

If the specified Time Travel time is beyond the retention time of any current child (e.g., a table) of the entity. As a workaround for child objects that have been purged from Time Travel, use the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter of the CREATE <object> … CLONE command. For more information, see Child objects and data retention time . If the specified Time Travel time is at or before the point in time when the object was created.

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed four days ago, skipping any tables that have a data retention period of less than four days:

CREATE DATABASE restored_db CLONE my_db AT ( TIMESTAMP => DATEADD ( days , - 4 , current_timestamp ) ::timestamp_tz ) IGNORE TABLES WITH INSUFFICIENT DATA RETENTION ; Copy

Dropping and Restoring Objects ¶

Dropping objects ¶.

When a table, schema, or database is dropped, it is not immediately overwritten or removed from the system. Instead, it is retained for the data retention period for the object, during which time the object can be restored. Once dropped objects are moved to Fail-safe , you cannot restore them.

To drop a table, schema, or database, use the following commands:

DROP SCHEMA

DROP DATABASE

After dropping an object, creating an object with the same name does not restore the object. Instead, it creates a new version of the object. The original, dropped version is still available and can be restored.

Restoring a dropped object restores the object in place (i.e. it does not create a new object).

Listing Dropped Objects ¶

Dropped tables, schemas, and databases can be listed using the following commands with the HISTORY keyword specified:

SHOW TABLES

SHOW SCHEMAS

SHOW DATABASES

SHOW TABLES HISTORY LIKE 'load%' IN mytestdb . myschema ; SHOW SCHEMAS HISTORY IN mytestdb ; SHOW DATABASES HISTORY ; Copy

The output includes all dropped objects and an additional DROPPED_ON column, which displays the date and time when the object was dropped. If an object has been dropped more than once, each version of the object is included as a separate row in the output.

After the retention period for an object has passed and the object has been purged, it is no longer displayed in the SHOW <object_type> HISTORY output.

Restoring Objects ¶

A dropped object that has not been purged from the system (i.e. the object is displayed in the SHOW <object_type> HISTORY output) can be restored using the following commands:

UNDROP TABLE

UNDROP SCHEMA

UNDROP DATABASE

Calling UNDROP restores the object to its most recent state before the DROP command was issued.

UNDROP TABLE mytable ; UNDROP SCHEMA myschema ; UNDROP DATABASE mydatabase ; Copy

If an object with the same name already exists, UNDROP fails. You must rename the existing object, which then enables you to restore the previous version of the object.

Access Control Requirements and Name Resolution ¶

Similar to dropping an object, a user must have OWNERSHIP privileges for an object to restore it. In addition, the user must have CREATE privileges on the object type for the database or schema where the dropped object will be restored.

Restoring tables and schemas is only supported in the current schema or current database, even if a fully-qualified object name is specified.

Example: Dropping and Restoring a Table Multiple Times ¶

In the following example, the mytestdb.public schema contains two tables: loaddata1 and proddata1 . The loaddata1 table is dropped and recreated twice, creating three versions of the table:

Current version Second (i.e. most recent) dropped version First dropped version

The example then illustrates how to restore the two dropped versions of the table:

First, the current table with the same name is renamed to loaddata3 . This enables restoring the most recent version of the dropped table, based on the timestamp. Then, the most recent dropped version of the table is restored. The restored table is renamed to loaddata2 to enable restoring the first version of the dropped table. Lastly, the first version of the dropped table is restored. SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ DROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ CREATE TABLE loaddata1 ( c1 number ); INSERT INTO loaddata1 VALUES ( 1111 ), ( 2222 ), ( 3333 ), ( 4444 ); DROP TABLE loaddata1 ; CREATE TABLE loaddata1 ( c1 varchar ); SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | Fri, 13 May 2016 19:05:51 -0700 | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata3 ; UNDROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata2 ; UNDROP TABLE loaddata1 ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA2 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ Copy

- Español – América Latina

- Português – Brasil

- Documentation

Data retention with time travel and fail-safe

This document describes time travel and fail-safe data retention for datasets. During the time travel and fail-safe periods, data that you have changed or deleted in any table in the dataset continues to be stored in case you need to recover it.

Time travel

You can access data from any point within the time travel window, which covers the past seven days by default. Time travel lets you query data that was updated or deleted, restore a table or dataset that was deleted, or restore a table that expired.

Configure the time travel window

You can set the duration of the time travel window, from a minimum of two days to a maximum of seven days. Seven days is the default. You set the time travel window at the dataset level, which then applies to all of the tables within the dataset.

You can configure the time travel window to be longer in cases where it is important to have a longer time to recover updated or deleted data, and to be shorter where it isn't required. Using a shorter time travel window lets you save on storage costs when using the physical storage billing model . These savings don't apply when using the logical storage billing model.

For more information on how the storage billing model affects cost, see Billing .

How the time travel window affects table and dataset recovery

A deleted table or dataset uses the time travel window duration that was in effect at the time of deletion.

For example, if you have a time travel window duration of two days and then increase the duration to seven days, tables deleted before that change are still only recoverable for two days. Similarly, if you have a time travel window duration of five days and you reduce that duration to three days, any tables that were deleted before the change are still recoverable for five days.

Because time travel windows are set at the dataset level, you can't change the time travel window of a deleted dataset until it is undeleted.

If you reduce the time travel window duration, delete a table, and then realize that you need a longer period of recoverability for that data, you can create a snapshot of the table from a point in time prior to the table deletion. You must do this while the deleted table is still recoverable. For more information, see Create a table snapshot using time travel .

Specify a time travel window

You can use the Google Cloud console, the bq command-line tool, or the BigQuery API to specify the time travel window for a dataset.

For instructions on how to specify the time travel window for a new dataset, see Create datasets .

For instructions on how to update the time travel window for an existing dataset, see Update time travel windows .

If the timestamp specifies a time outside time travel window, or from before the table was created, then the query fails and returns an error like the following:

Time travel and row-level access

If a table has, or has had, row-level access policies , then only a table administrator can access historical data for the table.

The following Identity and Access Management (IAM) permission is required:

The following BigQuery role provides the required permission:

The bigquery.rowAccessPolicies.overrideTimeTravelRestrictions permission can't be added to a custom role .

Run the following command to get the equivalent Unix epoch time:

Replace the UNIX time 1691164834000 received from the above command in the bq command-line tool. Run the following command to restore a copy of the deleted table deletedTableID in another table restoredTable , within the same dataset myDatasetID :

BigQuery provides a fail-safe period. During the fail-safe period, deleted data is automatically retained for an additional seven days after the time travel window, so that the data is available for emergency recovery. Data is recoverable at the table level. Data is recovered for a table from the point in time represented by the timestamp of when that table was deleted. The fail-safe period is not configurable.

You can't query or directly recover data in fail-safe storage. To recover data from fail-safe storage, contact Cloud Customer Care .

If you set your storage billing model to use physical bytes, the total storage costs you are billed for include the bytes used for time travel and fail-safe storage. If you set your storage billing model to use logical bytes, the total storage costs you are billed for do not include the bytes used for time travel or fail-safe storage. You can configure the time travel window to balance storage costs with your data retention needs.

If you use physical storage, you can see the bytes used by time travel and fail-safe by looking at the TIME_TRAVEL_PHYSICAL_BYTES and FAIL_SAFE_PHYSICAL_BYTES columns in the TABLE_STORAGE and TABLE_STORAGE_BY_ORGANIZATION views.

Limitations

- Time travel only provides access to historical data for the duration of the time travel window. To preserve table data for non-emergency purposes for longer than the time travel window, use table snapshots .

- If a table has, or has previously had, row-level access policies, then time travel can only be used by table administrators. For more information, see Time travel and row-level access .

- Time travel does not restore table metadata.

What's next

- Learn how to query and recover time travel data .

- Learn more about table snapshots .

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-04-24 UTC.

Time Travel Debugging is now available in WinDbg Preview

- James Pinkerton

We are excited to announce that Time Travel Debugging (TTD) features are now available in the latest version of WinDbg Preview. About a month ago, we released WinDbg Preview , which provides great new debugging user experience s . We are now publicly launching a preview version of TTD for the first time and are looking forward to your feedback.

What is TTD?

Wouldn’t it be great to go back in time and fix a problem? We can’t help you go back in time to fix poor life choices, but we can help you go back in time to fix code problems.

Time Travel Debugging (TTD) is a reverse debugging solution that allows you to record the execution of an app or process, replay it both forwards and backwards and use queries to search through the entire trace. Today’s debuggers typically allow you to start at a specific point in time and only go forward. TTD improves debugging since you can go back in time to better understand the conditions that lead up to the bug. You can also replay it multiple times to learn how best to fix the problem.

TTD is as easy as 1 – 2 – 3.

- Record: Record the app or process on the machine which can reproduce the bug. This creates a Trace file (.RUN extension) which has all of the information to reproduce the bug.

- Replay: Open the Trace file in WinDbg Preview and replay the code execution both forward and backward as many times as necessary to understand the problem.

- Analyze: Run queries & commands to identify common code issues and have full access to memory and locals to understand what is going on.

Getting Started

I know you are all excited and ready to start using TTD. Here are a few things you should know to get started.

- Install : You can use TTD by installing the WinDbg Preview (build 10.0.16365.1002 or greater) from the Store if you have Windows 10 Anniversary Update or newer at https://aka.ms/WinDbgPreview .

- Feedback: This is a preview release of TTD, so we are counting on your feedback as we continue to finish the product. We are using the Feedback Hub to help us prioritize what improvements to make. The Windows Insider website has a great overview on how to give good feedback https://insider.windows.com/en-us/how-to-feedback .

- Questions : We expect you will have some questions as you work with TTD. So feel free to post them on this blog or send them in the Feedback Hub and we will do our best to answer. We’ll be posting a TTD FAQ on our blog shortly.

- Documentation : We’ve got some initial documentation at https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/time-travel-debugging-overview and will be improving our content based upon customer feedback and usage. You can give us feedback or propose edits on the docs.microsoft.com documentation by hitting “Comments” or “Edit” on any page.

- Blog s: Watch for more in-depth TTD updates and tips in the future on our team’s blog https://blogs.msdn.microsoft.com/windbg .

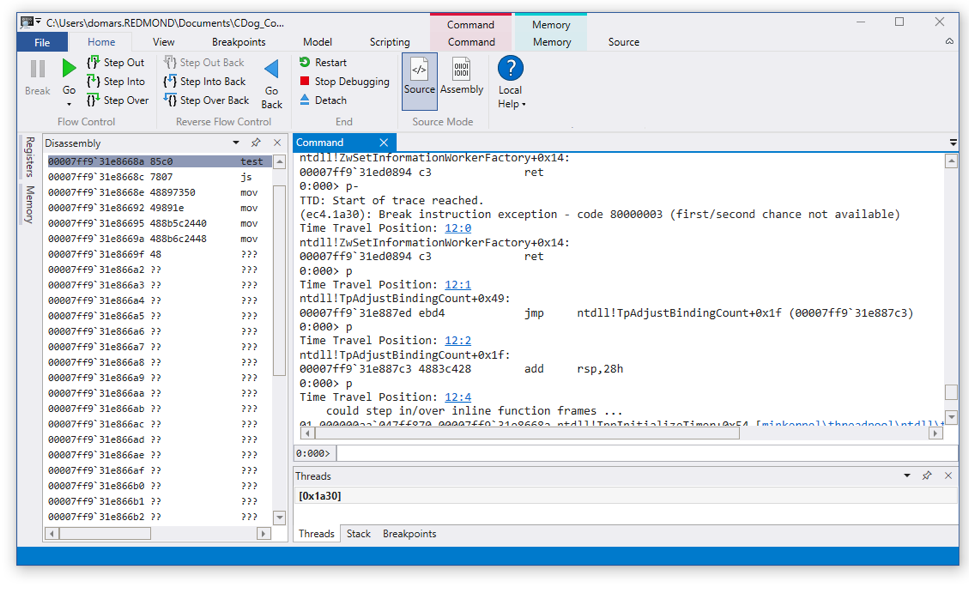

How to use TTD

You use TTD directly in the WinDbg Preview app. We have added all of the key TTD features into WinDbg Preview to provide a familiar debugging experience, which makes it intuitive to go backwards and forwards in time during your debugging session.

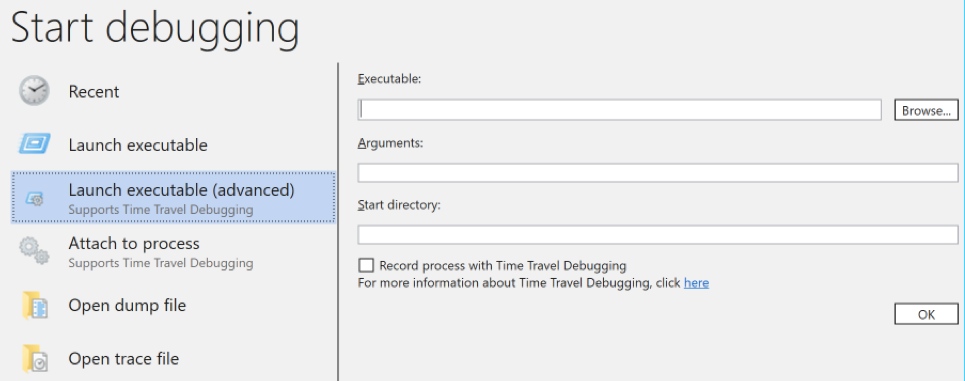

Record a Trace

WinDbg Preview makes it easy to record a trace. Simply click File >> Start Debugging and point to the app or process. You will have an option to Record during attach and launch. See https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/time-travel-debugging-overview for more information.

Replay a Trace

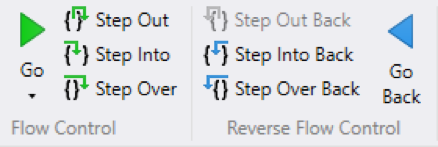

Once the Trace is complete, we automatically load and index the Trace for faster replay and memory lookups. Then simply use the WinDbg ribbon buttons or commands to step forwards and backwards through the code.

Basic TTD Commands

You can use the ribbon or enter the following TTD commands in WinDbg Preview. See https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/time-travel-debugging-overview for a complete list of TTD commands.

Final Thoughts

We are very excited to get TTD into the hands of our developers; but there are a few things to remember.

- TTD is a preview, so we will be regularly improving performance and features

- This only runs on Windows 10 since WinDbg Preview is a Store app

- See docs.microsoft.com documentation for TTD known issues and compatibility

Welcome to the world of time travel. Our goal is to improve the lives of developers by making debugging easier to increase product quality. Please send us feedback and feature requests in the Feedback Hub to let us know how we are doing!

This blog post is older than a year. The information provided below may be outdated.

Time travel debugging: It’s a blast! (from the past)

The Microsoft Security Response Center (MSRC) works to assess vulnerabilities that are externally reported to us as quickly as possible, but time can be lost if we have to confirm details of the repro steps or environment with the researcher to reproduce the vulnerability. Microsoft has made our “Time Travel Debugging” (TTD) tool publicly available to make it easy for security researchers to provide full repro, shortening investigations and potentially contributing to higher bounties (see “ Report quality definitions for Microsoft’s Bug Bounty programs ”). We use it internally, too—it has allowed us to find root cause for complex software issues in half the time it would take with a regular debugger.

If you’re wondering where you can get the TTD tool and how to use it, this blogpost is for you.

Understanding time travel debugging

Whether you call it “Timeless debugging”, “record-replay debugging”, “reverse-debugging”, or “time travel debugging”, it’s the same idea: the ability to record the execution of a program. Once you have this recording, you can navigate forward or backward, and you can share with colleagues. Even better, an execution trace is a deterministic recording; everybody looking at it sees the same behavior at the same time. When a developer receives a TTD trace, they do not even need to reproduce the issue to travel in the execution trace, they can just navigate through the trace file.

There are usually three key components associated to time travel debugging:

- A recorder that you can picture as a video camera,

- A trace file that you can picture as the recording file generated by the camera,

- A replayer that you can picture as a movie player.

Good ol’ debuggers

Debuggers aren’t new, and the process of debugging an issue has not drastically changed for decades. The process typically works like this:

- Observing the behavior under a debugger . In this step, you recreate an environment like that of the finder of the bug. It can be as easy as running a simple proof-of-concept program on your machine and observing a bug-check, or it can be as complex as setting up an entire infrastructure with specific software configurations just to be able to exercise the code at fault. And that’s if the bug report is accurate and detailed enough to properly set up the environment.

- Understanding why the issue happened . This is where the debugger comes in. What you expect of a debugger regardless of architectures and platforms is to be able to precisely control the execution of your target (stepping-over, stepping-in at various granularity level: instruction, source-code line), setting breakpoints, editing the memory as well as editing the processor context. This basic set of features enables you to get the job done. The cost is usually high though. A lot of reproducing the issue over and over, a lot of stepping-in and a lot of “Oops… I should not have stepped-over, let’s restart”. Wasteful and inefficient.

Whether you’re the researcher reporting a vulnerability or a member of the team confirming it, Time Travel Debugging can help the investigation to go quickly and with minimal back and forth to confirm details.

High-level overview

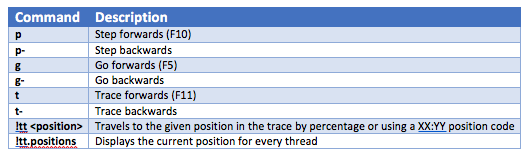

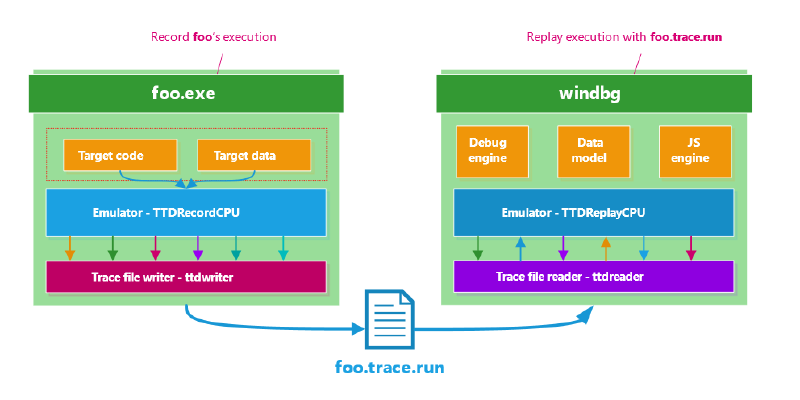

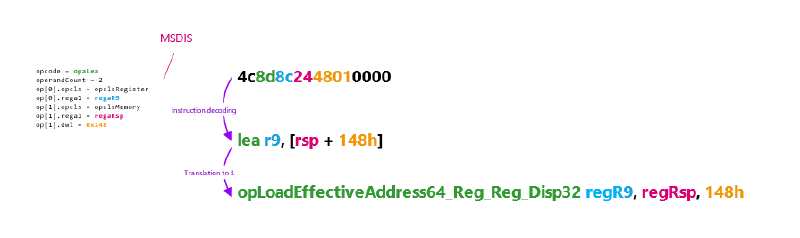

The technology that Microsoft has developed is called “TTD” for time-travel debugging. Born out of Microsoft Research around 2006 (cf “ Framework for Instruction-level Tracing and Analysis of Program Executions ”) it was later improved and productized by Microsoft’s debugging team. The project relies on code-emulation to record every event necessary that replay will need to reproduce the exact same execution. The exact same sequence of instructions with the exact same inputs and outputs. The data that the emulator tracks include memory reads, register values, thread creation, module loads, etc.

Recording / Replaying

The recording software CPU, TTDRecordCPU.dll , is injected into the target process and hijacks the control flow of the threads. The emulator decodes native instructions into an internal custom intermediate language (modeled after simple RISC instructions), caches block, and executes them. From now on, it carries the execution of those threads forward and dispatches callbacks whenever an event happens such as: , when an instruction has been translated, etc. Those callbacks allow the trace file writer component to collect information needed for the software CPU to replay the execution based off the trace file.

The replay software CPU, TTDReplayCPU.dll shares most of the same codebase than the record CPU, except that instead of reading the target memory it loads data directly from the trace file. This allows you to replay with full fidelity the execution of a program without needing to run the program.

The trace file

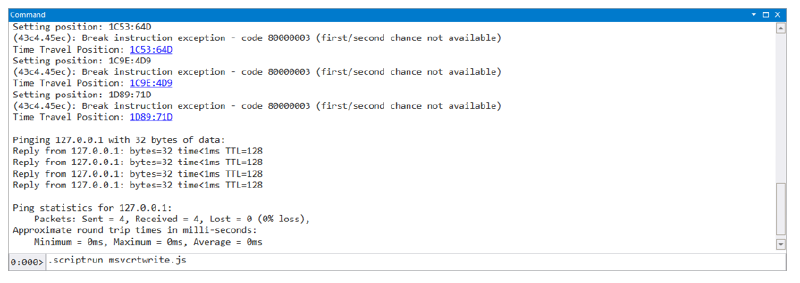

The trace file is a regular file on your file system that ends with the ‘run’ extension. The file uses a custom file format and compression to optimize the file size. You can also view this file as a database filled with rich information. To access information that the debugger requires very fast, the “WinDbg Preview” creates an index file the first time you open a trace file. It usually takes a few minutes to create. Usually, this index is about one to two times as large as the original trace file. As an example, a tracing of the program ping.exe on my machine generates a trace file of 37MB and an index file of 41MB. There are about 1,973,647 instructions (about 132 bits per instruction). Note that, in this instance, the trace file is so small that the internal structures of the trace file accounts for most of the space overhead. A larger execution trace usually contains about 1 to 2 bits per instruction.

Recording a trace with WinDbg Preview

Now that you’re familiar with the pieces of TTD, here’s how to use them.

Get TTD: TTD is currently available on Windows 10 through the “WinDbg Preview” app that you can find in the Microsoft store: https://www.microsoft.com/en-us/p/windbg-preview/9pgjgd53tn86?activetab=pivot:overviewtab .

Once you install the application the “ Time Travel Debugging - Record a trace ” tutorial will walk you through recording your first execution trace.

Building automations with TTD

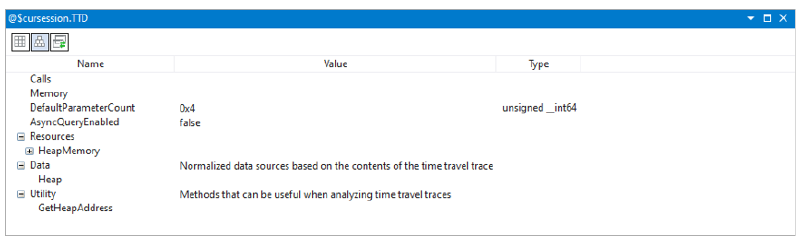

A recent improvement to the Windows debugger is the addition of the debugger data model and the ability to interact with it via JavaScript (as well as C++). The details of the data model are out of scope for this blog, but you can think of it as a way to both consume and expose structured data to the user and debugger extensions. TTD extends the data model by introducing very powerful and unique features available under both the @$cursession.TTD and @$curprocess.TTD nodes.

TTD.Calls is a function that allows you to answers questions like “Give me every position where foo!bar has been invoked” or “Is there a call to foo!bar that returned 10 in the trace”. Better yet, like every collection in the data-model, you can query them with LINQ operators. Here is what a TTD.Calls object look like:

The API completely hides away ISA specific details, so you can build queries that are architecture independent.

TTD.Calls: Reconstructing stdout

To demo how powerful and easy it is to leverage these features, we record the execution of “ping.exe 127.0.0.1” and from the recording rebuild the console output.

Building this in JavaScript is very easy:

- Iterate over every call to msvcrt!write ordered by the time position,

- Read several bytes (the amount is in the third argument) pointed by the second argument,

- Display the accumulated results.

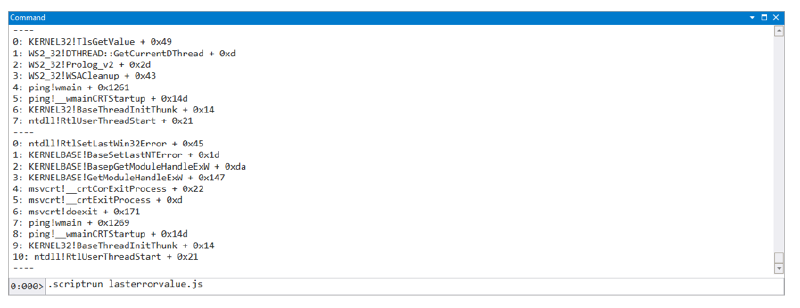

TTD.Memory: Finding every thread that touched the LastErrorValue

TTD.Memory is a powerful API that allows you to query the trace file for certain types (read, write, execute) of memory access over a range of memory. Every resulting object of a memory query looks like the sample below:

This result identifies the type of memory access done, the time stamp for start and finish, the thread accessing the memory, the memory address accessed, where it has been accessed and what value has been read/written/executed.

To demonstrate its power, let’s create another script that collects the call-stack every time the application writes to the LastErrorValue in the current thread’s environment block:

- Iterate over every memory write access to &@$teb->LastErrorValue ,

- Travel to the destination, dump the current call-stack,

- Display the results.

Note that there are more TTD specific objects you can use to get information related to events that happened in a trace, the lifetime of threads, so on. All of those are documented on the “ Introduction to Time Travel Debugging objects ” page.

Wrapping up

Time Travel Debugging is a powerful tool for security software engineers and can also be beneficial for malware analysis, vulnerability hunting, and performance analysis. We hope you found this introduction to TTD useful and encourage you to use it to create execution traces for the security issues that you are finding. The trace files generated by TTD compress very well; we recommend to use 7zip (usually shrinks the file to about 10% of the original size) before uploading it to your favorite file storage service.

Axel Souchet

Microsoft Security Response Center (MSRC)

Can I edit memory during replay time?

No. As the recorder only saves what is needed to replay a particular execution path in your program, it doesn’t save enough information to be able to re-simulate a different execution.

Why don’t I see the bytes when a file is read?

The recorder knows only what it has emulated. Which means that if another entity (the NT kernel here but it also could be another process writing into a shared memory section) writes data to memory, there is no way for the emulator to know about it. As a result, if the target program never reads those values back, they will never appear in the trace file. If they are read later, then their values will be available at that point when the emulator fetches the memory again. This is an area the team is planning on improving soon, so watch this space 😊.

Do I need private symbols or source code?

You don’t need source code or private symbols to use TTD. The recorder consumes native code and doesn’t need anything extra to do its job. If private symbols and source codes are available, the debugger will consume them and provide the same experience as when debugging with source / symbols.

Can I record kernel-mode execution?

TTD is for user-mode execution only.

Does the recorder support self-modifying code?

Yes, it does!

Are there any known incompatibilities?

There are some and you can read about them in “ Things to look out for ”.

Do I need WinDbg Preview to record traces?

Yes. As of today, the TTD recorder is shipping only as part of “ WinDbg Preview ” which is only downloadable from the Microsoft Store.

Time travel debugging

- Time Travel Debugging - Overview - https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/time-travel-debugging-overview

- Time Travel Debugging: Root Causing Bugs in Commercial Scale Software - https://www.youtube.com/watch?v=l1YJTg_A914

- Defrag Tools #185 - Time Travel Debugging – Introduction - https://channel9.msdn.com/Shows/Defrag-Tools/Defrag-Tools-185-Time-Travel-Debugging-Introduction

- Defrag Tools #186 - Time Travel Debugging – Advanced - https://channel9.msdn.com/Shows/Defrag-Tools/Defrag-Tools-186-Time-Travel-Debugging-Advanced

- Time Travel Debugging and Queries – https://github.com/Microsoft/WinDbg-Samples/blob/master/TTDQueries/tutorial-instructions.md

- Framework for Instruction-level Tracing and Analysis of Program Executions - https://www.usenix.org/legacy/events/vee06/full_papers/p154-bhansali.pdf

- VulnScan – Automated Triage and Root Cause Analysis of Memory Corruption Issues - https://blogs.technet.microsoft.com/srd/2017/10/03/vulnscan-automated-triage-and-root-cause-analysis-of-memory-corruption-issues/

- What’s new in WinDbg Preview - https://mybuild.techcommunity.microsoft.com/sessions/77266

Javascript / WinDbg / Data model

- WinDbg Javascript examples - https://github.com/Microsoft/WinDbg-Samples

- Introduction to Time Travel Debugging objects - https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/time-travel-debugging-object-model

- WinDbg Preview - Data Model - https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/windbg-data-model-preview

How satisfied are you with the MSRC Blog?

Your detailed feedback helps us improve your experience. Please enter between 10 and 2,000 characters.

Thank you for your feedback!

We'll review your input and work on improving the site.

Photo by Zulfa Nazer on Unsplash

Using BigQuery Time Travel

In this practical BigQuery exercise, we’re going to look at BigQuery Time Travel and see how it can help us when working with data. It’s not as powerful as Marty McFly’s DeLorean in Back to the Future (nobody knows what your future data will look like), but a useful tool in our toolset nevertheless.

First of all, what is BigQuery Time Travel? It allows for retrieving the state of a particular table at a given point within a time window, which is set by default to 7 days.

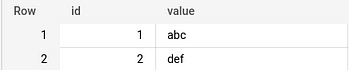

Let’s have a look at an example. At 11:00 AM we’ll create the following table.

Then, several minutes later, we make some changes to that table, say, insert a row.

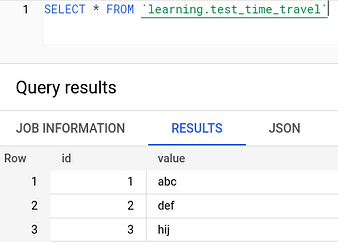

We can now confirm that we have the extra row. But what if we’d like to query the table as of earlier?

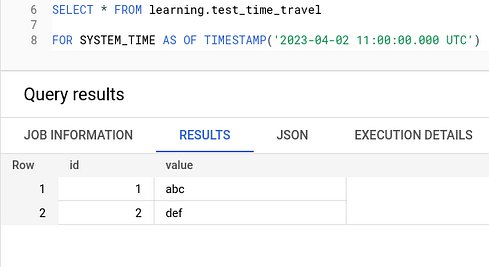

Using the approach above we can query the table at any particular point in the time travel window, set by default to 7 days, but configurable to be between 2 and 7 days.

Changing the time travel window

The time travel window is set at a dataset level, so affects all the tables in that dataset. The default time travel windows (7 days) can be overridden either at dataset creation time or on an existing dataset.

Practical considerations

The FOR SYSTEM_TIME AS OF clause comes after the table you’d like to apply Time Travel to, so a join query would look as follows.

Also, if you’d like to copy the table at a particular point in time, that can be done using the bq utility (part of the gcloud CLI ).

Note that the timestamp there is the UNIX epoch in milliseconds, which can be obtained as follows.

In this short practical exercise, we’ve looked at BigQuery Time Travel, a very handy tool to aid us in querying previous states of a particular table. I found it very helpful when debugging data pipelines.

Thanks for reading and stay tuned for more practical BigQuery tips.

Found it useful? Subscribe to my Analytics newsletter at notjustsql.com .

Did you find this article valuable?

Support Constantin Lungu by becoming a sponsor. Any amount is appreciated!

How-To Geek

How to change the time on windows 10.

Yes, time travel is indeed possible with Windows 10!

Quick Links

Manually change the time on windows 10, set the time to update automatically on windows 10.

Microsoft's Windows 10 operating system allows you to adjust your clock manually as well as automatically using a Settings option. We'll show you how to do just that.

Changing your PC's date and time may affect certain apps that rely on those options. If you experience any problems with those apps after changing the time, consider going back to the original date and time settings.

Related: How to Change the Date and Time on Windows 11

If you'd like to manually specify your PC's time , use the built-in Settings option to do that.

Start by launching Settings on your PC . Press the Windows+i keys and the app will open.

On the Settings window, choose "Time & Language."

On the "Date & Time" page that opens, make sure both "Set Time Automatically" and "Set Time Zone Automatically" options are disabled.

Beneath "Set the Date and Time Manually," click "Change."

You'll see a "Change Date and Time" window. Here, click the current date and time options and set them to your choice. When that's done, at the bottom, click "Change."

Your PC now uses your newly-specified time, and you're all set.

Related: How to Change Windows 10's Wallpaper Based on Time of Day

If you don't wish to use the manually-specified time on your PC , you can get Windows 10 to automatically adjust the clock. Your PC syncs with Microsoft's time servers to fetch the current time for your region.

This feature should be enabled by default, but you or another user may have disabled it in the past. To enable it again, first, open Settings by pressing Windows+i. On the Settings window, click "Time & Language."

On the "Date & Time" page, turn on both "Set Time Automatically" and "Set Time Zone Automatically" options.

And that's it. Your PC will get the current time from Microsoft's servers and use that as the system time.

Did you know you can change the format of the date and time on your Windows 10 PC? It lets you view your clock in your preferred format. Try it out if you're interested!

Related: How to Change the Format of Dates and Times in Windows 10

Change the system clock to any specified time

Time travel can change the system clock with just one click. It is the fastest way to change the system clock multiple times in a short period. It is a great tool for testing any time specific tasks on the computer.

Change the running speed of the system clock

Time Travel can change the running speed of the system clock. In Sci-Fi terms, Time Travel can stretch time. It can make an hour to pass by in a second, or a second to pass by in an hour.

Keep track of the current time during run time

Time Travel also keeps track of the real time throughout its operation. It allows the user to adjust the system time at will and stay aware of the real time.

Automatically reset system clock upon exiting

Time travel will automatically reset the system time back to the current local time after exiting the program. It will only affect the system time during its run time , and it will not change time setting after exiting the program

Automatically save and load previous configuration

Time Travel will remember the settings from the previous run time and load it upon its start. The program does not need to be configured each time it is being used.

Preset configurations

Time Travel has a load preset function, where a list of configurations can be loaded into the program for the convenience of the user. The user can also create a preset within the program and export the list for future uses.

Customization

Want a feature that is not listed above? Contact us for more information. We offer many different customization and consulting services.

Overview of Time Travel and its functions

- Download TimeTravel.zip and unzip it.

- Run TimeTravel.exe. (It needs to be run as administrator. Right-click the TimeTravel.exe file ->Run as administrator.)

- Click Change button to change the running speed of the computer clock.

- Click Set Time button will change the computer clock to the specified time.

- Resume From Last Shutdown Time button will restart from the last shut down time. It is the computer time, not real time. In case the computer shutdown accidently, it will remember the last running computer time.

- Sync with Real Time button will change the computer clock to the Real Time. Only if Current Running Speed is 1, the computer clock will run the same speed as the Real Time.

- When close/exit the program, your computer clock will be set to your current local time automatically.

Related Pruducts: N-Button Pro (Automation software) Quick Timer (Timer software) Relay Timer (Shedule software)

Pruducts you may also like: Comm Tunnel (Free) Comm Operator --> Comm Echo (Free) Comm Operator Pal (Free)

No-code Data Pipeline For BigQuery

Load data from 100+ sources to your desired destination like BigQuery in real-time using Hevo.

BigQuery Time Travel: How to access Historical Data? | Easy Steps

By: Ishwarya M Published: March 21, 2022

BigQuery is one of the most popular and highly efficient analytics platforms that allow you to store, process, and analyze Big Data. In addition, BigQuery can process over 100 trillion rows and run more than 10,000 queries at the same time across the organization. It not only has advanced processing capabilities but also is highly scalable and fault-tolerant, which enables users to seamlessly implement advanced Analytics. One such advanced fault-tolerant feature is BigQuery Time Travel , which allows you to travel back in time for retrieving the previously deleted or modified data.

Table of Contents

In this article, you will learn about BigQuery, BigQuery Time Travel, and how to time travel to retrieve the deleted BigQuery data.

Prerequisites

- Key Features of BigQuery

What is BigQuery Time Travel?

How to access historical data using bigquery time travel .

Fundamental knowledge of data analysis using SQL.

What is BigQuery?

Developed by Google in 2010, BigQuery is a Cloud-based data warehousing platform that allows you to store, manage, process, and analyze Big Data. BigQuery’s fully managed Serverless Architecture will enable you to implement high-end Analytics operations like Geospatial Analysis and Business Intelligence. It not only allows you to implement Big Data Analytics but also empowers you to build end-to-end Machine Learning Models.

Since BigQuery has a Serverless Infrastructure, you can focus on analyzing Big Data and building Machine Learning models instead of concentrating on resource and infrastructure management. Furthermore, developers and data professionals can use client libraries of popular programming languages like Python, Java, and JavaScript , as well as BigQuery’s REST API and RPC API for transforming, managing, and analyzing BigQuery data.

Key Features of Google BigQuery

The serverless Data Warehouse has various built-in capabilities that help with data analysis and provide deep insights. Take a look at some of BigQuery’s most important features.

- Fully Managed by Google: The Data Warehouse infrastructure is managed by Google. It keeps track of, updates, monitors, and distributes all of your data and information. Google will be notified if your task fails.

- Easy Implementation: BigQuery is simple to implement because it doesn’t require any additional software, cluster deployment, virtual machines, or tools. BigQuery is a serverless Data Warehouse that is very cost-effective. To evaluate and solve queries, all you have to do is upload or directly stream your data and run SQL.

- Speed: BigQuery can process a large number of rows in a matter of seconds. It can also perform terabyte-scale inquiries in seconds and petabyte-scale queries in minutes.

Simplify BigQuery ETL with Hevo’s No-code Data Pipeline

Hevo Data is a No-code Data Pipeline that offers a fully managed solution to set up Data Integration for 100+ Data Sources ( including 40+ Free sources ) and will let you directly load data from sources like Google Data Studio to a Data Warehouse or the Destination of your choice. It will automate your data flow in minutes without writing any line of code. Its fault-tolerant architecture makes sure that your data is secure and consistent. Hevo provides you with a truly efficient and fully automated solution to manage data in real-time and always have analysis-ready data.

Let’s look at some of the salient features of Hevo:

- Fully Managed : It requires no management and maintenance as Hevo is a fully automated platform.

- Data Transformation : It provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Real-Time : Hevo offers real-time data migration. So, your data is always ready for analysis.

- Schema Management : Hevo can automatically detect the schema of the incoming data and map it to the destination schema.

- Connectors : Hevo supports 100+ Integrations to SaaS platforms FTP/SFTP, Files, Databases, BI tools, and Native REST API & Webhooks Connectors. It supports various destinations including Google BigQuery, Amazon Redshift, Snowflake, Firebolt, Data Warehouses; Amazon S3 Data Lakes; Databricks; and MySQL, SQL Server, TokuDB, MongoDB , PostgreSQL Databases to name a few.

- Secure : Hevo has a fault-tolerant architecture that ensures that the data is handled in a secure, consistent manner with zero data loss.

- Hevo Is Built To Scale : As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Live Monitoring : Advanced monitoring gives you a one-stop view to watch all the activities that occur within Data Pipelines.

- Live Support : Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

Users can use BigQuery to process and analyze large amounts of data in order to implement end-to-end Cloud Analytics. However, there is a possibility that data can be deleted accidentally or due to some man-made errors while working on BigQuery. As a result of such unpredictable errors , users will permanently lose their data if they haven’t backed up or kept a copy of the deleted data.

To eliminate this complication, BigQuery allows users to retrieve and restore the deleted data within seven days of deletion. In other words, BigQuery allows users to travel back in time to retrieve or fetch the historical data that was deleted before seven days. With a simple SQL query, users can effectively retrieve the deleted data by providing the table name and time for returning results from a given table according to the specific Timestamp .

- With the BigQuery Time Travel feature, you can easily query and fetch historical data that has been stored, updated, or deleted before. The BigQuery’s SQL clause named FOR SYSTEM TIME AS OF helps you to time travel for a maximum of seven days in the past to access the modified or deleted data.

- However, there are some limitations of the FOR SYSTEM TIME AS OF clause.

- The deleted table that you want to retrieve must have previously been saved or stored in BigQuery; it cannot be an external table.

- To use the FOR SYSTEM TIME AS OF clause for retrieving historical table data, there is no limit on table size, and the deleted BigQuery table can have an unlimited number of rows and columns. In other words, the FOR SYSTEM TIME AS OF fetches the deleted data from BigQuery irrespective of the table size.

- The source table of the FROM clause that includes FOR SYSTEM TIME AS OF in the BigQuery Time Travel query syntax should not be an ARRAY scan, including a flattened array or even the output of the UNNEST operator. The syntax should not include a common table expression defined by a WITH clause.

- The timestamp_expression in a BigQuery Time Travel query syntax must always be a constant expression and should not be in the form of subqueries and UDFs (User Defined Functions). The timestamp_expression should not be in the form of correlated references, which refers to columns that appear at a higher level of the query statement, like in the SELECT clause.

- Finally, the timestamp_expression in SQL syntax should not fall within a specific time range that includes the start and end times for retrieving data between the given range. The time period specified in the timestamp expression should never be longer than seven days.

- The FOR SYSTEM TIME AS OF clause allows you to switch to the previous versions of the specific table definition and rows with respect to the given timestamp or time expression. On providing the particular timestamp in the syntax of the SQL query, you can easily travel back to the given time for fetching the historical data. The below-given command is the sample SQL query to fetch and return historical data in BigQuery.

- On executing the query given above, you can fetch or return deleted data of the table named from exactly one hour before. You can only return a historical version of the table before one hour since you gave the FOR SYSTEM_TIME AS OF parameter as INTERVAL 1 HOUR . In the above SQL query, we just passed the fetching interval to return data from the particular table based on the given interval. However, you can also set a specific timestamp to point back in time for fetching historical data precisely according to the given timestamp.

- For returning historical values in a table at an absolute or specific point in time, you can follow the sample SQL query given below.

- By following the above command, you are able to fetch the aggregate values from the table named table_a with respect to the given timestamp in the point_in_time_timestmap parameter. You should always make sure that the parameter is given in the form of yyyy-mm-dd hh:mm:ss . For example, the point_in_time_timestmap should resemble 2022-03-17 10:30:00 .

- If the timestamp you provide is greater than seven days earlier or before the table was created, the SQL query will fail to execute and return an error message given below.

- If you use the CREATE OR REPLACE TABLE statement for replacing the active and existing table, you could still use the FOR SYSTEM TIME AS OF clause to query the previous version of the table based on the recent interval command.

- When the existing table is accidentally deleted, the query fails and returns an error message, as given below.

- However, you can restore an active table by retrieving data from a specific point in time based on the timestamp, as explained in the above steps.

- Instead of just retrieving deleted data, you can use the BigQuery Time Travel feature to directly copy the modified or deleted historical data into a new table. This direct copying of deleted data into a new table works even if the table was deleted or expired, as long as you retrieve and restore historical data within seven days of deletion.

- For copying deleted historical data into the new table, you should use decorators in the SQL query.

- There are three types of decorators: “tableid@TIME,” “tableid@-TIME_OFFSET,“ and “tableid@0.“

- The tableid@TIME decorator retrieves historical data based on a specific time in milliseconds, while the tableid@-TIME OFFSET decorator fetches data from the deleted table based on a specific time interval. For fetching the oldest available historical data (within a time period of seven days), you can use the tableid@0 decorator.

- Execute the code given below to fetch the historical data from a deleted table and copy it into the new table.

- In the above code, but is the load command in BigQuery, which allows you to transfer or load data from one table to another table. With the SQL query, you are fetching data from a table named “ table1 “ and copying it into the table called “ table1_restored .“ Consequently, the query retrieves the one-hour old data from the table1 and stores it in the “ table1_restored ” table. The query treated the time as one hour because you provided the time in milliseconds, i.e.,3600000, which equals one hour. Note : (3600000=1 hour).

By following the above-given steps, you can easily travel back in time to retrieve the deleted or modified data in BigQuery.

In this article, you learned about BigQuery and how to time travel to retrieve the deleted BigQuery data. The BigQuery Time Travel feature is one of the unique and advanced features of BigQuery that allows users to recover the lost data by traveling back in time and bringing them back. However, BigQuery also has more advanced features like teleportation, miniaturization, and multiverse for implementing cloud analytics, which you can explore later. In case you want to export data from a source of your choice into your desired Database/destination like BigQuery then Hevo Data is the right choice for you!

Hevo Data , a No-code Data Pipeline provides you with a consistent and reliable solution to manage data transfer between a variety of sources and a wide variety of Desired Destinations, with a few clicks. Hevo Data with its strong integration with 100+ sources ( including 40+ free sources ) allows you to not only export data from your desired data sources & load it to the destination of your choice like BigQuery , but also transform & enrich your data to make it analysis-ready so that you can focus on your key business needs and perform insightful analysis using BI tools.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs.

Share your experience of learning about BigQuery Time Travel ! Let us know in the comments section below!

Ishwarya has experience working with B2B SaaS companies in the data industry and her passion for data science drives her to product informative content to aid individuals in comprehending the intricacies of data integration and analysis.

- BigQuery Functions

Related Articles

Hevo - No Code Data Pipeline

Continue Reading

Suraj Kumar Joshi

Athena vs Redshift Serverless: The Ultimate Guide on Data Query Service

Sarthak Bhardwaj

Snowflake vs Redshift: 6 Critical Differences

Veeresh Biradar

Redshift vs BigQuery: 7 Critical Differences

I want to read this e-book.

To revisit this article, visit My Profile, then View saved stories .

- Backchannel

- Newsletters

- WIRED Insider

- WIRED Consulting

Justin Smith-Ruiu

A New Time-Travel App, Reviewed

We all know by now that the time-reversal invariance governing statistical mechanics at the microlevel maps by a simple equation onto the macroworld, making “time travel” a wholly unsurprising possibility … but damn! The first time you go back there’s just nothing like it.

I know all these first-person accounts of ChronoSwooping have become a cliché here on Substack, where, let’s face it, anyone can write pretty much whatever they want no matter how self-indulgent and derivative. Nonetheless I think I have some unusual insights to share, which derive from my own experience but which may offer some general lessons as to the nature and significance of time travel, both the original and long-prohibited “body-transit” method as well as the newer and more streamlined ChronoSwoop.

This is not only because I spent some years in the archives of the Stadzbybliotiēka of the Margravate of East K****, poring over the notebooks in which Quast first landed on the Quast equation, while in parallel jotting down sundry philosophical reflexions about the nature of Divine Tempus—as he called it—that have largely been neglected by other researchers. It is also because I have used the ChronoSwoop app in ways that are expressly prohibited by its makers, and indeed by the federal government. In light of this, while I am writing this product review for Substack and in the emerging “Substack style,” until the law changes or I depart permanently from the chronological present, I will be posting this piece only on the Hinternet-based Substack oglinda (Romanian for “looking-glass,” a hacking neologism supposedly coined by Guccifer 3.0), which I’m told is undetectable, remaining entirely unknown even to the original company’s founders. Fingers crossed.

Perhaps some readers on this oglinda will appreciate a brief summary of what’s been happening in the world of time travel since Quast first came up with his equation in 1962. I don’t know what sort of information has been circulating down here, and I don’t want anyone to feel left behind.

The early 1960s witnessed great leaps forward not just in time-travel technology, but in the technology of teletransportation as well—which is to say dematerialization of the body, and its rematerialization elsewhere, but without any measurable “metachrony.” By late 1966 poorly regulated teletransporters had begun to pop up on the state fair circuit, tempting daredevils into ever more foolish stunts. But this practice was curtailed already the following year, when, expecting to reappear kneeling before his sweetheart Deb at the stables with a ring in his hand, Roy Bouwsma, aka “the Omaha Kid,” got rematerialized instead with the stable door cutting directly through the center of his body from groin to skull—one half of him flopping down at Deb’s feet, the other half falling, like some neat bodily cross section carefully made for students of anatomy, into the stable with Deb’s confused horse Clem.

But while this atrocious moment, broadcast live on KMTV, nipped the new craze in the bud, the technology underlying it had already been adapted for use in what was then called “Tempus-Gliding,” which had the merely apparent advantage of concealing from those in the present any potential accident in the rematerialization of the voyager to the past. Of course, accidents continued to happen, and news of them eventually made its way back from past to present, bringing about all sorts of familiar paradoxes in the spacetime continuum. Tempus-Gliding, like any metachronic technology relying on body-transit, was a door thrown wide open to all the crazy scenarios we know from the time-travel tropes in science fiction going back at least to H. G. Wells: adults returning to the past and meeting themselves as children, meeting their parents before they were even born, causing themselves never to have been born and so suddenly to vanish, and so on. By the end of the 1960s people, and sometimes entire families, entire lineages, were vanishing as a daily occurrence (just recall the 1969 Harris family reunion in Provo!). You could almost never say exactly why, since the traveler to the past who would unwittingly wipe out all his descendants often had yet, in the present, ever to even try Tempus-Gliding.

Matt Jancer

Andy Greenberg

Kathy Gilsinan

Amanda Hoover

A campaign to end the practice quickly gained speed. By 1973 the “Don’t Mess With Spacetime” bumper stickers were everywhere, and by the following year Tempus-Gliding was outlawed—which is to say, as is always the case in such matters, that only outlaws continued to Tempus-Glide. Scattered disappearances continued, public outcry against illicit Tempus-Gliding became more widespread. In 1983 Nancy Reagan made an unforgettable guest appearance on Diff’rent Strokes to help get out the message about the dangers of illegal body-transit. (“More than 40,000 young lives are lost each year to illegal Metachron gangs.” “What you talkin’ ’bout Mrs. Reagan?”) By the late 1980s a combination of tough-on-crime measures and transformations in youth culture largely ended the practice, and time travel would likely have remained as dormant as moon-travel if it had not in the last decade been so smoothly integrated into our new mobile technologies, and in a way that overcomes the paradoxes and inconveniences of Tempus-Gliding. It does so, namely, by taking the body out of the trip altogether.

This is the mode of time travel, of course, that has shaped a significant subcurrent of science fiction scenarios, notably Chris Marker’s La Jetée (1962), later adapted into the better known Bruce Willis vehicle 12 Monkeys (1995). While these films might seem exceptional, they also share something important with the great majority of what may be called time-travel tales avant la lettre, in which, typically, a man such as Rip Van Winkle goes to sleep for a very long time and wakes up in “the future.” The “zero form” of time travel, we are reminded, is simply to live, which is to say to travel forward in time at a slow and steady rate that only appears to be sped up or “warped” through deep sleep.

Be that as it may, when the new app-based time-travel technologies began to emerge in the late 2010s—relying as they did on a loophole in the 1974 law against time travel that defined it strictly as “metachronic body-transit”—they were all confronted by the hard limit on innovation already predicted by Quast, who remained committed until the end to the impossibility in principle of future-directed time-travel. “If you want to get to the future, you’re just going to have to wait,” Quast wrote in an entry in his Hefte dated 6 October, 1959 (SB-1omk 21.237). “To live in time is already to travel in time. So be patient” [ In der Zeit zu leben, das ist schon in der Zeit zu reisen. Hab also Geduld ]. Rumors of future-transit apps downloadable from ultra-sketchy oglindas have been circulating for years, but I’ve never seen any, and having studied Quast’s work I have come to believe that they are a theoretical impossibility.

The earliest apps, popping up mostly from anonymous sources, were mostly perceived as too dangerous and illicit to gain widespread appeal. “We’ve got that legal cannabis here in California now,” Whoopi Goldberg said on an episode of The View in September 2019. “If I want to take a little trip, I’m sorry but there’s edibles for that. I’m not messing with spacetime [ audience laughter ].” In an echo of the panic leading to the prohibition of Tempus-Gliding in the early 1970s, the government began to issue PSAs sensitizing the public to the serious psychological trauma that a return to our own pasts can trigger. “This is not lighthearted fun,” the messaging went. “Metachronism can ruin your life.”

The campaign against these new technologies would probably have killed them, or at least pushed them so far down into the oglindas as to occlude them from the public’s consciousness, if in 2021, at the worst moment of the pandemic, the ChronoSwoop company had not appeared as if out of nowhere and dropped its addictive new app with its signature “Swoop left/Swoop right” functions. Key to ChronoSwoop’s success was the discovery that users will draw significantly more pleasure from being cast into random moments in the past (Swoop left) than from being permitted to choose particular moments they have deemed significant in the post-hoc construction of their autobiographical self-narrative. And if you find yourself thrown back into an unpleasant or dull moment, then a single swift Swoop right will bring you immediately back into the present. You can of course go into your settings and laboriously reconfigure the app to permit you to choose your precise dates, but the great miracle of ChronoSwoop’s success is that almost no one bothers to do this. The people want their time travel to come with streamlined, easy interfaces. They want to move through the past like they move through their feeds: going nowhere in particular, with no clear purpose.