Overview of Snowflake Time Travel

Consider a scenario where instead of dropping a backup table you have accidentally dropped the actual table (or) instead of updating a set of records, you accidentally updated all the records present in the table (because you didn’t use the Where clause in your update statement).

What would be your next action after realizing your mistake? You must be thinking to go back in time to a period where you didn’t execute your incorrect statement so that you can undo your mistake.

Snowflake provides this exact feature where you could get back to the data present at a particular period of time. This feature in Snowflake is called Time Travel .

Let us understand more about Snowflake Time Travel in this article with examples.

1. What is Snowflake Time Travel?

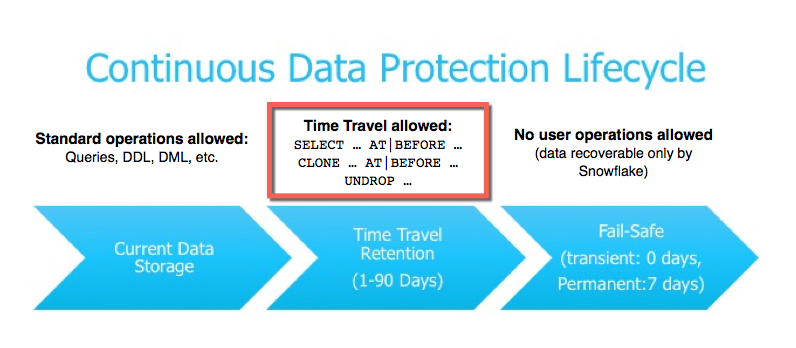

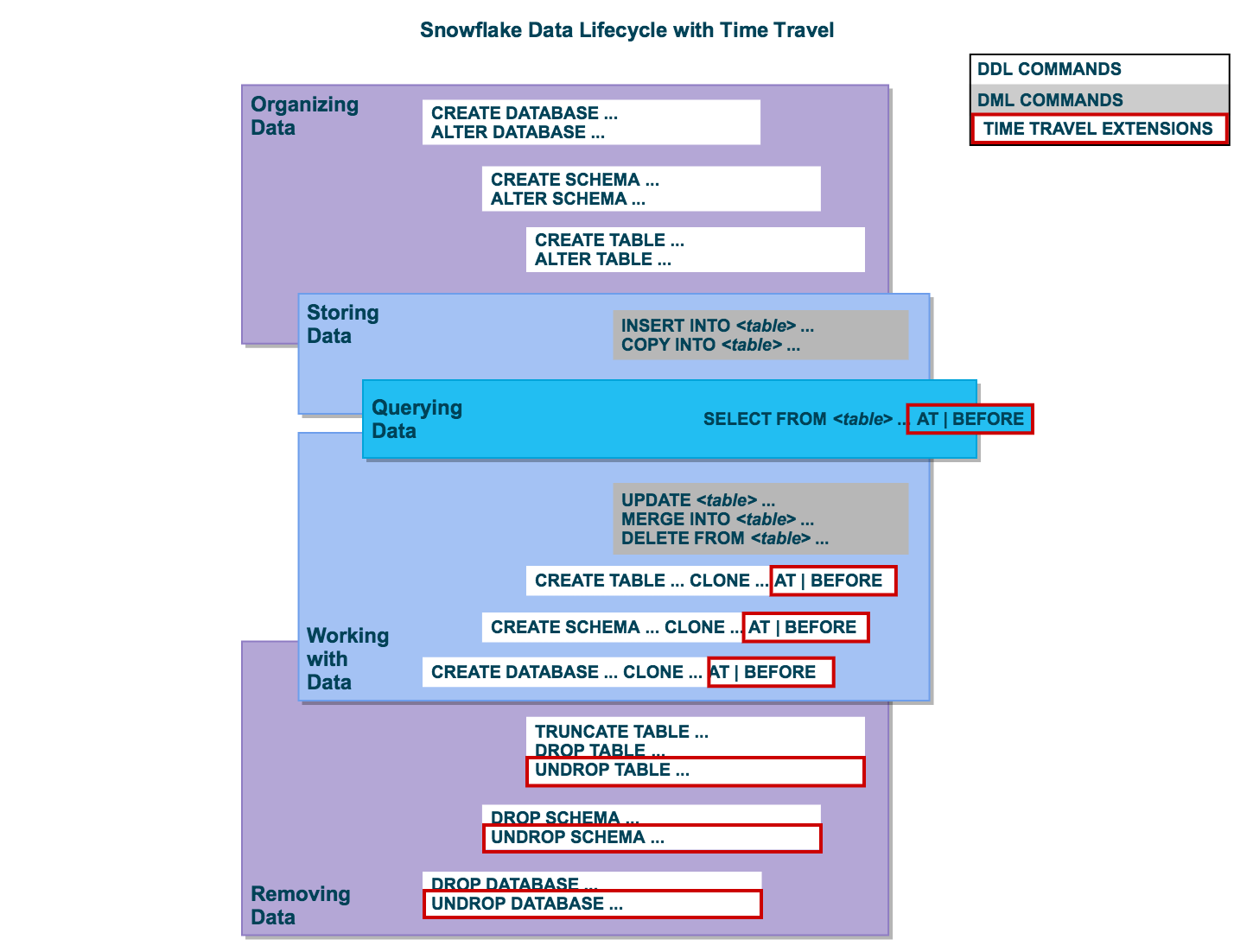

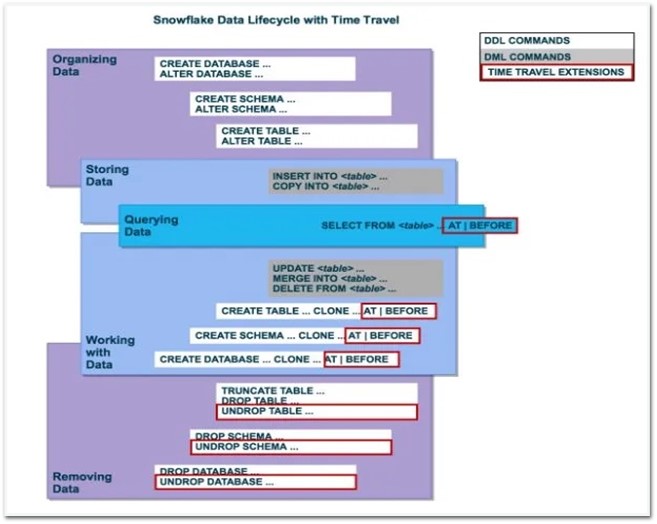

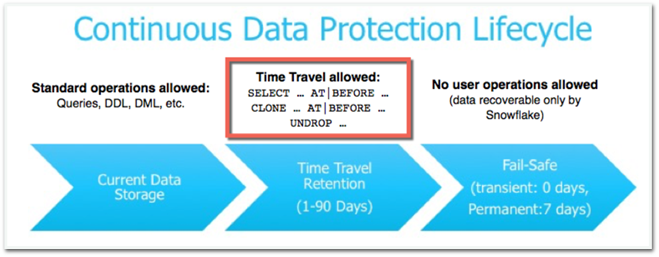

Snowflake Time Travel enables accessing historical data that has been changed or deleted at any point within a defined period. It is a powerful CDP (Continuous Data Protection) feature which ensures the maintenance and availability of your historical data.

Below actions can be performed using Snowflake Time Travel within a defined period of time:

- Restore tables, schemas, and databases that have been dropped.

- Query data in the past that has since been updated or deleted.

- Create clones of entire tables, schemas, and databases at or before specific points in the past.

Once the defined period of time has elapsed, the data is moved into Snowflake Fail-Safe and these actions can no longer be performed.

2. Restoring Dropped Objects

A dropped object can be restored within the Snowflake Time Travel retention period using the “UNDROP” command.

Consider we have a table ‘Employee’ and it has been dropped accidentally instead of a backup table.

It can be easily restored using the Snowflake UNDROP command as shown below.

Databases and Schemas can also be restored using the UNDROP command.

Calling UNDROP restores the object to its most recent state before the DROP command was issued.

3. Querying Historical Objects

When unwanted DML operations are performed on a table, the Snowflake Time Travel feature enables querying earlier versions of the data using the AT | BEFORE clause.

The AT | BEFORE clause is specified in the FROM clause immediately after the table name and it determines the point in the past from which historical data is requested for the object.

Let us understand with an example. Consider the table Employee. The table has a field IS_ACTIVE which indicates whether an employee is currently working in the Organization.

The employee ‘Michael’ has left the organization and the field IS_ACTIVE needs to be updated as FALSE. But instead you have updated IS_ACTIVE as FALSE for all the records present in the table.

There are three different ways you could query the historical data using AT | BEFORE Clause.

3.1. OFFSET

“ OFFSET” is the time difference in seconds from the present time.

The following query selects historical data from a table as of 5 minutes ago.

3.2. TIMESTAMP

Use “TIMESTAMP” to get the data at or before a particular date and time.

The following query selects historical data from a table as of the date and time represented by the specified timestamp.

3.3. STATEMENT

Identifier for statement, e.g. query ID

The following query selects historical data from a table up to, but not including any changes made by the specified statement.

The Query ID used in the statement belongs to Update statement we executed earlier. The query ID can be obtained from “Open History”.

4. Cloning Historical Objects

We have seen how to query the historical data. In addition, the AT | BEFORE clause can be used with the CLONE keyword in the CREATE command to create a logical duplicate of the object at a specified point in the object’s history.

The following queries show how to clone a table using AT | BEFORE clause in three different ways using OFFSET, TIMESTAMP and STATEMENT.

To restore the data in the table to a historical state, create a clone using AT | BEFORE clause, drop the actual table and rename the cloned table to the actual table name.

5. Data Retention Period

A key component of Snowflake Time Travel is the data retention period.

When data in a table is modified, deleted or the object containing data is dropped, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved.

Time Travel operations can be performed on the data during this data retention period of the object. When the retention period ends for an object, the historical data is moved into Snowflake Fail-safe.

6. How to find the Time Travel Data Retention period of Snowflake Objects?

SHOW PARAMETERS command can be used to find the Time Travel retention period of Snowflake objects.

Below commands can be used to find the data retention period of data bases, schemas and tables.

The DATA_RETENTION_TIME_IN_DAYS parameters specifies the number of days to retain the old version of deleted/updated data.

The below image shows that the table Employee has the DATA_RETENTION_TIME_IN_DAYS value set as 1.

7. How to set custom Time-Travel Data Retention period for Snowflake Objects?

Time travel is automatically enabled with the standard, 1-day retention period. However, you may wish to upgrade to Snowflake Enterprise Edition or higher to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables.

You can configure the data retention period of a table while creating the table as shown below.

To modify the data retention period of an existing table, use below syntax

The below image shows that the data retention period of table is altered to 30 days.

A retention period of 0 days for an object effectively disables Time Travel for the object.

8. Data Retention Period Rules and Inheritance

Changing the retention period for your account or individual objects changes the value for all lower-level objects that do not have a retention period explicitly set. For example:

- If you change the retention period at the account level, all databases, schemas, and tables that do not have an explicit retention period automatically inherit the new retention period.

- If you change the retention period at the schema level, all tables in the schema that do not have an explicit retention period inherit the new retention period.

Currently, when a database is dropped, the data retention period for child schemas or tables, if explicitly set to be different from the retention of the database, is not honored. The child schemas or tables are retained for the same period of time as the database.

- To honor the data retention period for these child objects (schemas or tables), drop them explicitly before you drop the database or schema.

Related Articles:

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Related Posts

QUALIFY in Snowflake: Filter Window Functions

GROUP BY ALL in Snowflake

Rank Transformation in Informatica Cloud (IICS)

Time Travel snowflake: The Ultimate Guide to Understand, Use & Get Started 101

By: Harsh Varshney Published: January 13, 2022

Related Articles

To empower your business decisions with data, you need Real-Time High-Quality data from all of your data sources in a central repository. Traditional On-Premise Data Warehouse solutions have limited Scalability and Performance , and they require constant maintenance. Snowflake is a more Cost-Effective and Instantly Scalable solution with industry-leading Query Performance. It’s a one-stop-shop for Cloud Data Warehousing and Analytics, with full SQL support for Data Analysis and Transformations. One of the highlighting features of Snowflake is Snowflake Time Travel.

Table of Contents

Snowflake Time Travel allows you to access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Restoring Data-Related Objects (Tables, Schemas, and Databases) that may have been removed by accident or on purpose.

- Duplicating and Backing up Data from previous periods of time.

- Analyzing Data Manipulation and Consumption over a set period of time.

In this article, you will learn everything about Snowflake Time Travel along with the process which you might want to carry out while using it with simple SQL code to make the process run smoothly.

What is Snowflake?

Snowflake is the world’s first Cloud Data Warehouse solution, built on the customer’s preferred Cloud Provider’s infrastructure (AWS, Azure, or GCP) . Snowflake (SnowSQL) adheres to the ANSI Standard and includes typical Analytics and Windowing Capabilities. There are some differences in Snowflake’s syntax, but there are also some parallels.

Snowflake’s integrated development environment (IDE) is totally Web-based . Visit XXXXXXXX.us-east-1.snowflakecomputing.com. You’ll be sent to the primary Online GUI , which works as an IDE, where you can begin interacting with your Data Assets after logging in. Each query tab in the Snowflake interface is referred to as a “ Worksheet ” for simplicity. These “ Worksheets ,” like the tab history function, are automatically saved and can be viewed at any time.

Key Features of Snowflake

- Query Optimization: By using Clustering and Partitioning, Snowflake may optimize a query on its own. With Snowflake, Query Optimization isn’t something to be concerned about.

- Secure Data Sharing: Data can be exchanged securely from one account to another using Snowflake Database Tables, Views, and UDFs.

- Support for File Formats: JSON, Avro, ORC, Parquet, and XML are all Semi-Structured data formats that Snowflake can import. It has a VARIANT column type that lets you store Semi-Structured data.

- Caching: Snowflake has a caching strategy that allows the results of the same query to be quickly returned from the cache when the query is repeated. Snowflake uses permanent (during the session) query results to avoid regenerating the report when nothing has changed.

- SQL and Standard Support: Snowflake offers both standard and extended SQL support, as well as Advanced SQL features such as Merge, Lateral View, Statistical Functions, and many others.

- Fault Resistant: Snowflake provides exceptional fault-tolerant capabilities to recover the Snowflake object in the event of a failure (tables, views, database, schema, and so on).

To get further information check out the official website here .

What is Snowflake Time Travel Feature?

Snowflake Time Travel is an interesting tool that allows you to access data from any point in the past. For example, if you have an Employee table, and you inadvertently delete it, you can utilize Time Travel to go back 5 minutes and retrieve the data. Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Query Data that has been changed or deleted in the past.

- Make clones of complete Tables, Schemas, and Databases at or before certain dates.

- Tables, Schemas, and Databases that have been deleted should be restored.

As the ability of businesses to collect data explodes, data teams have a crucial role to play in fueling data-driven decisions. Yet, they struggle to consolidate the data scattered across sources into their warehouse to build a single source of truth. Broken pipelines, data quality issues, bugs and errors, and lack of control and visibility over the data flow make data integration a nightmare.

1000+ data teams rely on Hevo’s Data Pipeline Platform to integrate data from over 150+ sources in a matter of minutes. Billions of data events from sources as varied as SaaS apps, Databases, File Storage and Streaming sources can be replicated in near real-time with Hevo’s fault-tolerant architecture. What’s more – Hevo puts complete control in the hands of data teams with intuitive dashboards for pipeline monitoring, auto-schema management, custom ingestion/loading schedules.

All of this combined with transparent pricing and 24×7 support makes us the most loved data pipeline software on review sites.

Take our 14-day free trial to experience a better way to manage data pipelines.

How to Enable & Disable Snowflake Time Travel Feature?

1) enable snowflake time travel.

To enable Snowflake Time Travel, no chores are necessary. It is turned on by default, with a one-day retention period . However, if you want to configure Longer Data Retention Periods of up to 90 days for Databases, Schemas, and Tables, you’ll need to upgrade to Snowflake Enterprise Edition. Please keep in mind that lengthier Data Retention necessitates more storage, which will be reflected in your monthly Storage Fees. See Storage Costs for Time Travel and Fail-safe for further information on storage fees.

For Snowflake Time Travel, the example below builds a table with 90 days of retention.

To shorten the retention term for a certain table, the below query can be used.

2) Disable Snowflake Time Travel

Snowflake Time Travel cannot be turned off for an account, but it can be turned off for individual Databases, Schemas, and Tables by setting the object’s DATA_RETENTION_TIME_IN_DAYS to 0.

Users with the ACCOUNTADMIN role can also set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that by default, all Databases (and, by extension, all Schemas and Tables) created in the account have no retention period. However, this default can be overridden at any time for any Database, Schema, or Table.

3) What are Data Retention Periods?

Data Retention Time is an important part of Snowflake Time Travel. Snowflake preserves the state of the data before the update when data in a table is modified, such as deletion of data or removing an object containing data. The Data Retention Period sets the number of days that this historical data will be stored, allowing Time Travel operations ( SELECT, CREATE… CLONE, UNDROP ) to be performed on it.

All Snowflake Accounts have a standard retention duration of one day (24 hours) , which is automatically enabled:

- At the account and object level in Snowflake Standard Edition , the Retention Period can be adjusted to 0 (or unset to the default of 1 day) (i.e. Databases, Schemas, and Tables).

- The Retention Period can be set to 0 for temporary Databases, Schemas, and Tables (or unset back to the default of 1 day ). The same can be said of Temporary Tables.

- The Retention Time for permanent Databases, Schemas, and Tables can be configured to any number between 0 and 90 days .

4) What are Snowflake Time Travel SQL Extensions?

The following SQL extensions have been added to facilitate Snowflake Time Travel:

- OFFSET (time difference in seconds from the present time)

- STATEMENT (identifier for statement, e.g. query ID)

- For Tables, Schemas, and Databases, use the UNDROP command.

How Many Days Does Snowflake Time Travel Work?

How to specify a custom data retention period for snowflake time travel .

The maximum Retention Time in Standard Edition is set to 1 day by default (i.e. one 24 hour period). The default for your account in Snowflake Enterprise Edition (and higher) can be set to any value up to 90 days :

- The account default can be modified using the DATA_RETENTION_TIME IN_DAYS argument in the command when creating a Table, Schema, or Database.

- If a Database or Schema has a Retention Period , that duration is inherited by default for all objects created in the Database/Schema.

The Data Retention Time can be set in the way it has been set in the example below.

Using manual scripts and custom code to move data into the warehouse is cumbersome. Frequent breakages, pipeline errors and lack of data flow monitoring makes scaling such a system a nightmare. Hevo’s reliable data pipeline platform enables you to set up zero-code and zero-maintenance data pipelines that just work.

- Reliability at Scale : With Hevo, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability : Monitor pipeline health with intuitive dashboards that reveal every stat of pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs

- Stay in Total Control : When automation isn’t enough, Hevo offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management : Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo automatically maps source schema with destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support : With Hevo you get more than just a platform, you get a partner for your pipelines. Discover peace with round the clock “Live Chat” within the platform. What’s more, you get 24×7 support even during the 14-day full-feature free trial.

- Transparent Pricing : Say goodbye to complex and hidden pricing models. Hevo’s Transparent Pricing brings complete visibility to your ELT spend. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in data flow.

How to Modify Data Retention Period for Snowflake Objects?

When you alter a Table’s Data Retention Period, the new Retention Period affects all active data as well as any data in Time Travel. Whether you lengthen or shorten the period has an impact:

1) Increasing Retention

This causes the data in Snowflake Time Travel to be saved for a longer amount of time.

For example, if you increase the retention time from 10 to 20 days on a Table, data that would have been destroyed after 10 days is now kept for an additional 10 days before being moved to Fail-Safe. This does not apply to data that is more than 10 days old and has previously been put to Fail-Safe mode .

2) Decreasing Retention

- Temporal Travel reduces the quantity of time data stored.

- The new Shorter Retention Period applies to active data updated after the Retention Period was trimmed.

- If the data is still inside the new Shorter Period , it will stay in Time Travel.

- If the data is not inside the new Timeframe, it is placed in Fail-Safe Mode.

For example, If you have a table with a 10-day Retention Term and reduce it to one day, data from days 2 through 10 will be moved to Fail-Safe, leaving just data from day 1 accessible through Time Travel.

However, since the data is moved from Snowflake Time Travel to Fail-Safe via a background operation, the change is not immediately obvious. Snowflake ensures that the data will be migrated, but does not say when the process will be completed; the data is still accessible using Time Travel until the background operation is completed.

Use the appropriate ALTER <object> Command to adjust an object’s Retention duration. For example, the below command is used to adjust the Retention duration for a table:

How to Query Snowflake Time Travel Data?

When you make any DML actions on a table, Snowflake saves prior versions of the Table data for a set amount of time. Using the AT | BEFORE Clause, you can Query previous versions of the data.

This Clause allows you to query data at or immediately before a certain point in the Table’s history throughout the Retention Period . The supplied point can be either a time-based (e.g., a Timestamp or a Time Offset from the present) or a Statement ID (e.g. SELECT or INSERT ).

- The query below selects Historical Data from a Table as of the Date and Time indicated by the Timestamp:

- The following Query pulls Data from a Table that was last updated 5 minutes ago:

- The following Query collects Historical Data from a Table up to the specified statement’s Modifications, but not including them:

How to Clone Historical Data in Snowflake?

The AT | BEFORE Clause, in addition to queries, can be combined with the CLONE keyword in the Construct command for a Table, Schema, or Database to create a logical duplicate of the object at a specific point in its history.

Consider the following scenario:

- The CREATE TABLE command below generates a Clone of a Table as of the Date and Time indicated by the Timestamp:

- The following CREATE SCHEMA command produces a Clone of a Schema and all of its Objects as they were an hour ago:

- The CREATE DATABASE command produces a Clone of a Database and all of its Objects as they were before the specified statement was completed:

Using UNDROP Command with Snowflake Time Travel: How to Restore Objects?

The following commands can be used to restore a dropped object that has not been purged from the system (i.e. the item is still visible in the SHOW object type> HISTORY output):

- UNDROP DATABASE

- UNDROP TABLE

- UNDROP SCHEMA

UNDROP returns the object to its previous state before the DROP command is issued.

A Database can be dropped using the UNDROP command. For example,

Similarly, you can UNDROP Tables and Schemas .

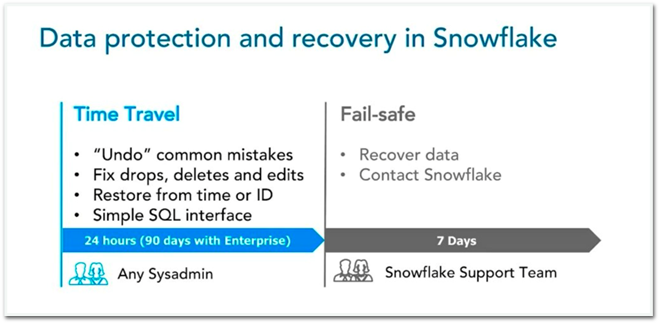

Snowflake Fail-Safe vs Snowflake Time Travel: What is the Difference?

In the event of a System Failure or other Catastrophic Events , such as a Hardware Failure or a Security Incident, Fail-Safe ensures that Historical Data is preserved . While Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time.

Fail-Safe mode allows Snowflake to recover Historical Data for a (non-configurable) 7-day period . This time begins as soon as the Snowflake Time Travel Retention Period expires.

This article has exposed you to the various Snowflake Time Travel to help you improve your overall decision-making and experience when trying to make the most out of your data. In case you want to export data from a source of your choice into your desired Database/destination like Snowflake , then Hevo is the right choice for you!

However, as a Developer, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, and Marketing Platforms to your Database can seem to be quite challenging. If you are from non-technical background or are new in the game of data warehouse and analytics, Hevo can help!

Hevo will automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Customer Management, etc. Hevo provides a wide range of sources – 150+ Data Sources (including 40+ Free Sources) – that connect with over 15+ Destinations. It will provide you with a seamless experience and make your work life much easier.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Harsh comes with experience in performing research analysis who has a passion for data, software architecture, and writing technical content. He has written more than 100 articles on data integration and infrastructure.

No-code Data Pipeline for Snowflake

- Snowflake Commands

Hevo - No Code Data Pipeline

Continue Reading

Radhika Sarraf

Amazon Redshift Serverless: A Comprehensive Guide

Suraj Poddar

Amazon Redshift ETL – Top 3 ETL Approaches for 2024

Snowflake Features: 7 Comprehensive Aspects

I want to read this e-book.

Snowflake Time Travel in a Nutshell

Yeah, the title is a bit clickbaity, so if you are too sensitive, please stop reading, because the article won’t explain to you all the details of the mentioned feature of Snowflake. But despite that, it will show some interesting things that are not mentioned in the documentation and it will help to answer at least one question in the certification exam. So, I think it’s worth giving it a few minutes of your time.

Snowflake is an advanced data platform provided as Software-as-a-Service (SaaS). It enables data storage, processing, and analytic solutions that are faster, easier to use, and far more flexible than traditional offerings. Snowflake isn’t a service built on top of Hadoop or Spark or any other “big data” technology, it is a completely new SQL query engine designed for the cloud and cloud-only. To the user, Snowflake provides all of the functionality of an enterprise analytic database, along with many additional special features and unique capabilities.

In this article, we won’t go into explaining what Snowflake is but will be more specific about one of the cool features of this data platform, which is Time Travel. Let me know in the comments if you want a brief overview of this product or maybe some explanation of its other features.

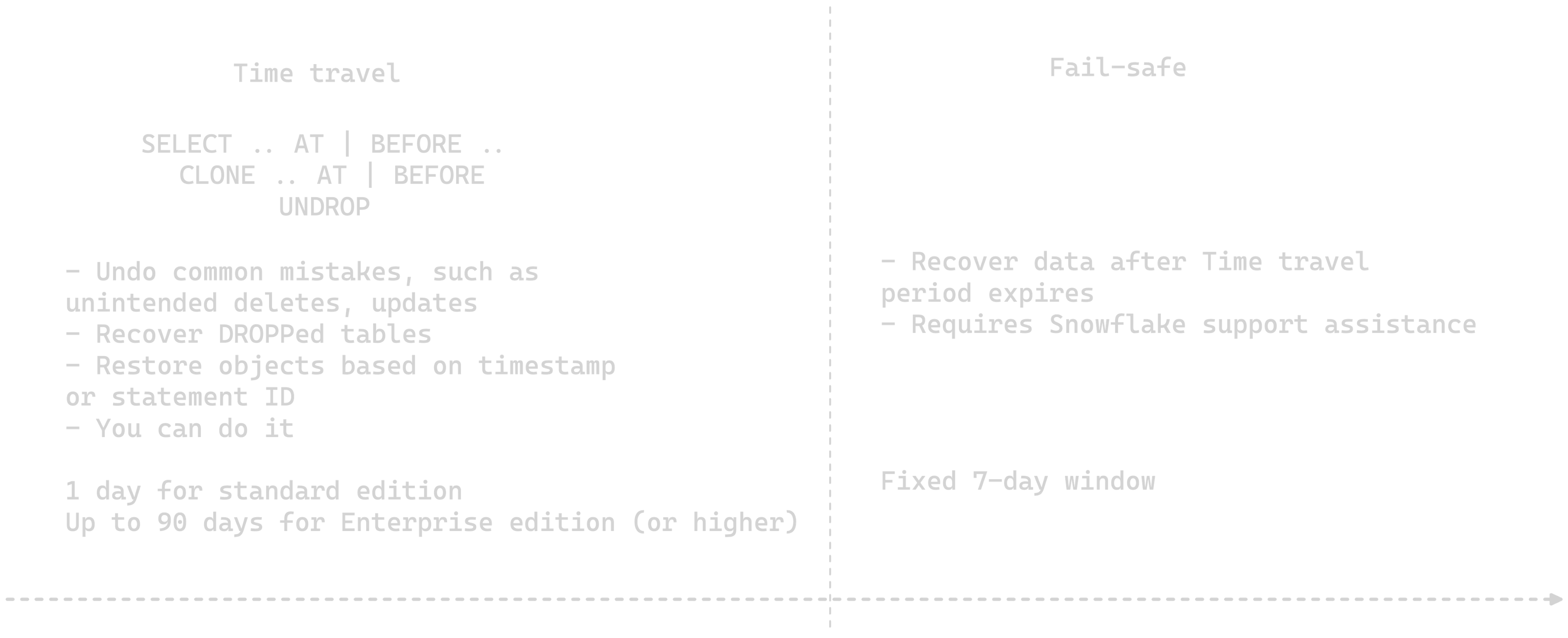

Time travel

Snowflake Time Travel enables to query data as it was saved at a particular point in time and roll back to the corresponding version. It means that the intentional or unintentional changes to the underlying data can be reverted. Time Travel is a very powerful feature that allows:

- Query data in the past that has since been updated or deleted.

- Create clones of entire tables, schemas, and databases at or before specific points in the past.

- Restore tables, schemas, and databases that have been dropped.

To support Time Travel, the following SQL extensions have been implemented:

- OFFSET (time difference in seconds from the present time)

- STATEMENT (identifier for statement, e.g. query ID)

- UNDROP command for tables, schemas, and databases.

A key component of Snowflake Time Travel is the data retention period. When data in a table is modified, including deletion of data or dropping an object containing data, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved and, therefore, Time Travel operations (SELECT, CREATE … CLONE, UNDROP) can be performed on the data.

By default, the data retention period is set to 1 day and Snowflake recommends keeping this setting as is to be able to prevent unintentional data modifications. This period also can be extended up to 90 days, but keep in mind that Time Travel incurs additional storage costs. Setting the data retention period to 0 will disable Time Travel. This feature can be enabled/disabled on account, database, schema or table level.

After the retention period expired the data is moved into Snowflake Fail-Safe and cannot be restored by the regular user. Only Snowflake Support can restore the data in Fail-Safe.

Querying historical data

When any DML operations are performed on a table, Snowflake retains previous versions of the table data for a defined period of time. This enables querying earlier versions of the data using the AT | BEFORE clause. Now let’s see the examples. For the sake of this article, we will create a separate DB in Snowflake, a table and fill it with some seed values. Here we go:

And we have our seed records. Now let’s add some duplicates.

Having duplicates in our table isn’t a good idea, so we can use Time Travel to see how the data was looking before dups appeared. As was mentioned before there are few different methods to do that, let’s check them all.

As you can see we are back to the first version of the data! Next queries will return the same result.

Also, it is possible to restore the data before the change happened by finding the Query ID that introduced mentioned change. We can find it on the “Query History” tab in “Activity”.

Cloning historical data

There is another feature in Snowflake that is worth investigating – Zero-Copy Cloning. Basically, it creates a copy of a database, schema or table. A snapshot of data present in the source object is taken when the clone is created and is made available to the cloned object. The cloned object is writable and is independent of the clone source. That is, changes made to either the source object or the clone object are not part of the other. Cloning in Snowflake is zero-copy cloning, meaning that at the time of clone creation the data is not being copied and the newly created cloned table references the existing data partitions of the mother table. But it is worth another article, so at the moment brief intro and later we will dig deeper.

Cloning with Time Travel works using the same parameters:

The results of SELECTs are the same:

Dropping and Undropping

When a table, schema, or database is dropped, it is not immediately overwritten or removed from the system. Instead, it is retained for the data retention period for the object, during which time the object can be restored.

To drop a table, schema, or database, the following commands are used:

- DROP SCHEMA

- DROP DATABASE

To undrop a table, schema, or database:

- UNDROP TABLE

- UNDROP SCHEMA

- UNDROP DATABASE

This is actually where the fun comes. Let’s start with a simple example and then go into the woods.

Simple example:

The dropped tables can be seen by using the command:

In the results of the SHOW TABLES HISTORY in the column “ dropped_on ” the last time the table was dropped will be shown. If the value for the corresponding table in this column is NULL it means that the table is up and running. Dropping and undropping the same table multiple times will not create additional records in this view, only the timestamp in “ dropped_on ” will be updated.

But if a table is dropped and then a new table is created with the same name, the UNDROP command will fail, stating that the table exists.

As you can see in the screenshot above there are two tables restored_table_2, but one of them has a timestamp value in the column “dropped_on”. This is because the first time I ran the query to create this table I used the wrong schema, so I ran CREATE OR REPLACE TABLE … command, which actually dropped a wrong table and created a new one with the same name. So if now I try to UNDROP the old table with wrong records, the query will fail as stated earlier.

But it doesn’t mean that the data from the previous table is lost. As we saw earlier, we still can see 2 tables in SHOW TABLES HISTORY. In order to restore the original (or in this case wrong) table, the newly created table has to be renamed:

And now the UNDROP will work.

And we can see the wrong records I added to this table:

Now, I hope you won’t do such a thing, but here we saw what happens if you drop a table and then create a totally new one with the same name and still we can recover an old table. So what happens if you drop this new table and then create again a new one again with the same name? Will you be able to recover the data from the most ancient table? The answer is yes. It will take some effort, but it is possible. This is how our SHOW TABLES HISTORY looks like right now, we have 5 tables, and all are up and running:

Let’s drop restored_table and create it again using the same command and add a few new records to it:

And now we go further and drop again restored_table and create a new one.

To restore the first table that was dropped we will need to go through a set of renamings. Our original table had 4 rows. Let’s go. Restore the second table.

Restore the original table and rename it to v1

And here we are, with all the versions of our data restored. As you can see in Snowflake it becomes obsolete the creation of different versions of the tables as you can always go back in time, but be careful with the data retention period – if it’s expired you cannot get your data back that easily.

In the end, Time Travel is a very powerful tool for:

- Restoring data-related objects (tables, schemas, and databases) that might have been accidentally or intentionally deleted.

- Duplicating or backing up data from key points in the past.

- Analyzing data usage/manipulation over specified periods of time.

Hope you find it useful 😉 see ya in the next one 😉

Photo by Maddy Baker on Unsplash

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Sergi's Blog Cookies Policy

This Website uses cookies to improve your experience. Please visit the Cookies Policy page for more information about cookies and how we use them.

Strategic Services

Digital Engineering Services

Managed Services

Harness the power of Generative AI

Amplify innovation, creativity, and efficiency through disciplined application of generative AI tools and methods.

Focus Industries

Healthcare & Life Sciences

Retail & CPG

Energy & Utilities

Banking, Financial Services & Insurance

Travel, Hospitality & Logistics

Telecom & Media

Explore Client Success Stories

We create competitive advantage through accelerated technology innovation. We provide the tools, talent, and processes needed to accelerate your path to market leadership.

Global Delivery with Encora

Nearshore in the Americas

Nearshore in Europe

Nearshore in Asia & Oceania

Expertise at Scale in India

Hybrid Global Teams

Experience the power of Global Digital Engineering with Encora.

Refine your global engineering location strategy with the speed of collaboration in Nearshore and the scale of expertise in India.

15+ other partnerships

Accelerating Innovation Cycles and Business Outcomes

Through strategic partnerships, Encora helps clients tap into the potential of the world’s leading technologies to drive innovation and business impact.

Featured Insights

Using Generative AI to Tackle Physician Burnout

Unlocking the Potential of Gen AI for the Automotive Industry

Narrowing Down a Use Case for Shared Loyalty in Travel

Exploring The Potential of Web3 for Shared Loyalty in Travel

Latest News

Press Releases

Encora Earns Kubernetes Specialization on Microsoft Azure, E...

Encora Ranks in India's Top 50 Workplaces in Health and Well...

Encora Attains AWS Cloud Operations Competency

Encora Secures Top Rankings Across Ten ER&D Segments in Zinn...

Open positions by country

Philippines

North Macedonia

Make a Lasting Impact on the World through Technology

Come Grow with Us

< Go Back

Time Travel in Snowflake

Talati adit anil.

June 01, 2023

Consider a scenario where you accidentally dropped the actual table or instead of deleting a set of records, you updated all the records present in the table. What will you do? How will you restore your data that has already been deleted/altered? You must be hoping of going back in time and correcting incorrectly executed statements. Snowflake provides this feature wherein you can get back the data that is present at a particular time. This feature of Snowflake is called Time Travel .

Introduction

Snowflake Time Travel is a very important tool that allows users to access Historical Data (i.e. data that has been updated or removed) at any point in time in the past. It is a powerful Continuous Data Protection (CDP) feature that ensures the maintenance and availability of historical data.

Key Features

- Query Optimization: As a user, we should not be concerned about optimizing queries because Snowflake on its own optimizes queries by using Clustering and Partitioning.

- Secure Data Sharing: Using Snowflake Database, Tables, Views, and UDFs, data can be shared securely from one account to another.

- Support for File Formats: Supports almost all file formats: JSON, Avro, ORC, Parquet, and XML are all Semi-Structured data formats that Snowflake can import. Column type — Variant lets the user store Semi-Structured data.

- Caching: Caching strategy of Snowflake returns results quickly for repeated queries as it stores query results in a cache within a given session.

- Fault Resistant: In case of event failure, Snowflake provides exceptional fault-tolerant capabilities to recover tables, views, databases, schema, and so on.

- To query past data.

- To make clones of complete Tables, Schemas, and Databases at or before certain dates.

- To restore deleted Tables, Schemas, and Databases.

- To restore original data that was updated accidentally.

- To check consumption over a period of time.

- Cloning and Backing up data from previous times.

How to Enable & Disable Time Travel in Snowflake?

Enable time travel.

No additional configurations are required to enable Time Travel, it is enabled by default, with a one-day retention period. Although to configure longer data retention periods, we need to upgrade to Snowflake Enterprise Edition. The retention period can be set to a maximum of 90 days. Based on the retention period, charges will increase. The below query builds a table with a retention period of 90 days:

The retention period can also be changed using the ‘alter’ query as below:

Disable Time Travel

Time Travel cannot be turned off for accounts, but it can be turned off for individual databases, schemas, and tables by setting data_retention_time_in_days field to 0 using the below query:

Query Time Travel Data

Whenever any Data Manipulation Language (DML) query is executed on a table, Snowflake saves prior versions of the Table data for a given period of time depending on the retention period. The previous version of data can be queried using the AT | BEFORE Clause. Using AT, the user can get data at a given period of time whereas using BEFORE all the data from that point till the end of the retention period can be fetched. The following SQL extensions have been added to facilitate Snowflake Time Travel:

- CLONE: To create a logical duplicate of the object at a specific point in its history.

- TIMESTAMP: From a given time (Data & Time) provided.

- OFFSET: Time difference from current time till offset provided in seconds.

- STATEMENT: Using a Statement ID from the point where the last DML query was fired.

- UNDROP: If a table is dropped accidentally, it can be restored using the UNDROP command.

The below query generates a Clone of a Table from the given Date and Time as indicated by the Timestamp:

The below query creates a Clone of a Schema and all its Objects as they were an hour ago:

The below query pulls Historical Data from a Table from a given Timestamp:

The below query pulls Historical Data from a Table that was updated 5 minutes ago:

The below query collects Historical Data from a Table up to the given statement’s Modifications (Statement ID):

The below query is used to restore Database EMP:

The following graphic from the Snowflake documentation summarizes all the above points visually:

Data Retention

Snowflake preserves the previous state of the data when DML operations are performed. By default, all Snowflake accounts have a standard retention duration of one day which is automatically enabled.

- For Snowflake Standard Edition, the Retention Period can be adjusted to 0 from default 1 day for all objects (Temporary & Permanent).

- For Snowflake Enterprise Edition (or higher) it gives more flexibility for setting retention period, that is The Retention Time for permanent Databases, Schemas, and Tables can be configured to any number between 0 and 90 days whereas for temporary objects it can be set to 0 from the default 1 day.

The below query sets a retention period of 90 days while creating the table:

Snowflake provides another exciting feature called Fail-safe where historical data can be protected in case of any failure. Fail-safe allows a maximum period of 7 days which begins after the Time Travel retention period ends wherein Historical data can be recovered. Recovering data through Fail-safe can take hours to days and it involves cost.

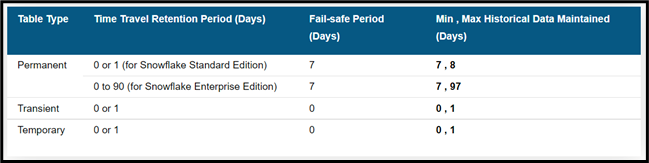

The number of days historical data is maintained is based on the table type and the Fail-safe period for the table. Transient and temporary tables have no Fail-safe period.

Storage fees are incurred for maintaining historical data during both the Time Travel and Fail-safe periods. The fees are calculated for each 24 hours (i.e. 1 day) from the time the data changed. The number of days historical data is maintained is based on the table type and retention period set for the table.

Snowflake minimizes the amount of storage required for historical data by maintaining only the information required to restore the individual table rows that were updated or deleted. As a result, storage usage is calculated as a percentage of the table that changed. In most cases, Snowflake does not keep a full copy of data. Only when tables are dropped or truncated, full copies of tables are maintained.

Temporary and Transient Tables

To manage the storage costs Snowflake provides two table types: TEMPORARY & TRANSIENT, which do not incur the same fees as standard (i.e. permanent) tables:

- Transient tables can have a Time Travel retention period of either 0 or 1 day.

- Temporary tables can also have a Time Travel retention period of 0 or 1 day; however, this retention period ends as soon as the table is dropped or the session in which the table was created ends.

- Transient and temporary tables have no Fail-safe period.

- The maximum additional fees incurred for Time Travel and Fail-safe by these types of tables are limited to 1 day.

The above table illustrates the different scenarios, based on table type.

hbspt.cta._relativeUrls=true;hbspt.cta.load(7958737, '1308a939-5241-47c3-bf0f-864090d8516d', {"useNewLoader":"true","region":"na1"});

Snowflake Time Travel is a powerful feature that enables users to examine data usage and manipulations over a specific time. Syntax to query with time travel is fairly the same as in SQL Server which is easy to understand and execute. Users can restore deleted objects, make duplicates, make a Snowflake backup, and recover historical data.

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.

Encora accelerates enterprise modernization and innovation through award-winning digital engineering across cloud, data, AI, and other strategic technologies. With robust nearshore and India-based capabilities, we help industry leaders and digital natives capture value through technology, human-centric design, and agile delivery.

Share this post

Table of Contents

Related insights.

5 Axioms to Improve Your Team Communication and Collaboration

Good communication within a team is key to keeping everyone on the right track. But it can be ...

JavaScript: setTimeout() and Promise under the Hood

In this blog, we will delve deeper into how setTimeout works under the hood.

Exponential Smoothing Methods for Time Series Forecasting

Recently, we covered basic concepts of time series data and decomposition analysis. We started ...

Innovation Acceleration

Headquarters - Scottsdale, AZ 85260 ©Encora Digital LLC

Global Delivery

Partnerships

CREATE <object> … CLONE ¶

Creates a copy of an existing object in the system. This command is primarily used for creating zero-copy clones of databases, schemas, and tables; however, it can also be used to quickly/easily create clones of other schema objects , such as external stages, file formats, and sequences, and database roles.

The command is a variation of the object-specific CREATE <object> commands with the addition of the CLONE keyword.

Clone objects using Time Travel ¶

For databases, schemas, and non-temporary tables, CLONE supports an additional AT | BEFORE clause for cloning using Time Travel .

For databases and schemas, CLONE supports the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter to skip any tables that have been purged from Time Travel (for example, transient tables with a one day data retention period ).

Databases, Schemas ¶

Dynamic tables ¶, event tables ¶, database roles ¶, other schema objects ¶, time travel parameters ¶.

The AT | BEFORE clause accepts one of the following parameters:

Specifies an exact date and time to use for Time Travel. The value must be explicitly cast to a TIMESTAMP.

Specifies the difference in seconds from the current time to use for Time Travel, in the form -N where N can be an integer or arithmetic expression (e.g. -120 is 120 seconds, -30*60 is 1800 seconds or 30 minutes).

Specifies the query ID of a statement to use as the reference point for Time Travel. This parameter supports any statement of one of the following types:

DML (e.g. INSERT, UPDATE, DELETE)

TCL (BEGIN, COMMIT transaction)

The query ID must reference a query that has been executed within the last 14 days. If the query ID references a query over 14 days old, the following error is returned:

To work around this limitation, use the timestamp for the referenced query.

Ignore tables that no longer have historical data available in Time Travel to clone. If the time in the past specified in the AT | BEFORE clause is beyond the data retention period for any child table in a database or schema, skip the cloning operation for the child table. For more information, see Child Objects and Data Retention Time .

General Usage Notes ¶

A clone is writable and is independent of its source (i.e. changes made to the source or clone are not reflected in the other object).

Parameters that are explicitly set on a source database, schema, or table are retained in any clones created from the source container or child objects.

To create a clone, your current role must have the following privilege(s) on the source object:

OWNERSHIP on the database role and the CREATE DATABASE ROLE privilege on the target database.

If you specify the WITH MANAGED ACCESS clause, the required privileges depends on whether the source schema is a managed or unmanaged schema. For details, see CREATE SCHEMA privileges .

In addition, to clone a schema or an object within a schema, your current role must have required privileges on the container object(s) for both the source and the clone.

For database roles:

A database role is cloned when you run the CREATE DATABASE … CLONE command to clone a database. However, if you clone other database objects, such as a schema or table, database roles in the database are not cloned with the schema or table.

If the database role is already cloned to the target database, the command fails. If this occurs, drop the database role from the target database and try the CLONE command again.

For databases and schemas, cloning is recursive:

Cloning a database clones all the schemas and other objects in the database.

Cloning a schema clones all the contained objects in the schema.

However, the following object types are not cloned:

External tables

Internal (Snowflake) stages

For databases, schemas, and tables, a clone does not contribute to the overall data storage for the object until operations are performed on the clone that modify existing data or add new data, such as:

Adding, deleting, or modifying rows in a cloned table.

Creating a new, populated table in a cloned schema.

Cloning a table replicates the structure, data, and certain other properties (e.g. STAGE FILE FORMAT ) of the source table.

A cloned table does not include the load history of the source table. One consequence of this is that data files that were loaded into a source table can be loaded again into its clones.

Although a cloned table replicates the source table’s clustering keys, the new table starts with Automatic Clustering suspended – even if Automatic Clustering is not suspended for the source table.

The CREATE TABLE … CLONE and CREATE EVENT TABLE … CLONE syntax includes the COPY GRANTS keywords, which affect a new table clone as follows:

If the COPY GRANTS keywords are used, then the new object inherits any explicit access privileges granted on the original table but does not inherit any future grants defined for the object type in the schema.

If the COPY GRANTS keywords are not used, then the new object clone does not inherit any explicit access privileges granted on the original table but does inherit any future grants defined for the object type in the schema (using the GRANT <privileges> … ON FUTURE syntax).

If the statement is replacing an existing table of the same name, then the grants are copied from the table being replaced. If there is no existing table of that name, then the grants are copied from the source table being cloned.

Regarding metadata:

Customers should ensure that no personal data (other than for a User object), sensitive data, export-controlled data, or other regulated data is entered as metadata when using the Snowflake service. For more information, see Metadata Fields in Snowflake .

CREATE OR REPLACE <object> statements are atomic. That is, when an object is replaced, the old object is deleted and the new object is created in a single transaction.

Additional Rules that Apply to Cloning Objects ¶

An object clone inherits the name and structure of the source object current at the time the CREATE <object> CLONE statement is executed or at a specified time/point in the past using Time Travel . An object clone inherits any other metadata, such as comments or table clustering keys, that is current in the source object at the time the statement is executed, regardless of whether Time Travel is used.

A database or schema clone includes all child objects active at the time the statement is executed or at the specified time/point in the past. A snapshot of the table data represents the state of the source data when the statement is executed or at the specified time/point in the past. Child objects inherit the name and structure of the source child objects at the time the statement is executed.

Cloning a database or schema does not clone objects of the following types in the database or schema:

A database or schema clone includes only pipe objects that reference external (Amazon S3, Google Cloud Storage, or Microsoft Azure) stages; internal (Snowflake) pipes are not cloned.

The default state of a pipe clone is as follows:

When AUTO_INGEST = FALSE , a cloned pipe is paused by default.

When AUTO_INGEST = TRUE , a cloned pipe is set to the STOPPED_CLONED state. In this state, pipes do not accumulate event notifications as a result of newly staged files. When a pipe is explicitly resumed, it only processes data files triggered as a result of new event notifications.

A pipe clone in either state can be resumed by executing an ALTER PIPE … RESUME statement.

Cloning a database or schema affects tags in that database or schema as follows:

Tag associations in the source object (e.g. table) are maintained in the cloned objects.

For a database or a schema:

The tags stored in that database or schema are also cloned.

When a database or schema is cloned, tags that reside in that schema or database are also cloned.

If a table or view exists in the source schema/database and has references to tags in the same schema or database, the cloned table or view is mapped to the corresponding cloned tag (in the target schema/database) instead of the tag in the source schema or database.

A Java UDF can be cloned when the database or schema containing the Java UDF is cloned. To be cloned, the Java UDF must meet certain conditions. For more information, see Limitations on Cloning .

When cloning a database, schema, or table, a snapshot of the data in each table is taken and made available to the clone. The snapshot represents the state of the source data either at the time the statement is executed or at the specified time/point in the past (using Time Travel ).

Objects such as views, streams, and tasks include object references in their definition. For example:

A view contains a stored query that includes table references.

A stream points to a source table.

A task or alert calls a stored procedure or executes a SQL statement that references other objects.

When one of these objects is cloned, either in a cloned database or schema or as an individual object, for those object types that support cloning, the clone inherits references to other objects from the definition of the source object. For example, a clone of a view inherits the stored query from the source view, including the table references in the query.

Pay close attention to whether any object names in the definition of a source object are fully or partially qualified. A fully-qualified name includes the database and schema names. Any clone of the source object includes these parts in its own definition.

For example:

If you intend to point a view to tables with the same names in other databases or schemas, we suggest creating a new view rather than cloning an existing view. This guidance also pertains to other objects that reference objects in their definition.

Certain limitations apply to cloning operations. For example, DDL statements that affect the source object during a cloning operation can alter the outcome or cause errors.

Cloning is not instantaneous, particularly for large objects (databases, schemas, tables), and does not lock the object being cloned. As such, a clone does not reflect any DML statements applied to table data, if applicable, while the cloning operation is still running.

For more information about this and other use cases that might affect your cloning operations, see Cloning considerations .

Notes for Cloning with Time Travel (Databases, Schemas, Tables, and Event Tables Only) ¶

The AT | BEFORE clause clones a database, schema, or table as of a specified time in the past or based on a specified SQL statement:

The AT keyword specifies that the request is inclusive of any changes made by a statement or transaction with timestamp equal to the specified parameter. The BEFORE keyword specifies that the request refers to a point immediately preceding the specified parameter.

Cloning using STATEMENT is equivalent to using TIMESTAMP with a value equal to the recorded execution time of the SQL statement (or its enclosing transaction), as identified by the specified statement ID.

An error is returned if:

The object being cloned did not exist at the point in the past specified in the AT | BEFORE clause. The historical data required to clone the object or any of its child objects (e.g. tables in cloned schemas or database) has been purged. As a workaround for child objects that have been purged from Time Travel, use the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter of the CREATE <object> … CLONE command. For more information, see Child objects and data retention time .

If any child object in a cloned database or schema did not exist at the point in the past specified in the AT | BEFORE clause, the child object is not cloned.

For more information, see Understanding & Using Time Travel .

Troubleshoot cloning objects using Time Travel ¶

The following scenarios can help you troubleshoot issues that can occur when cloning an object using Time Travel.

This error can be returned for the following reasons:

Clone a database and all objects within the database at its current state:

CREATE DATABASE mytestdb_clone CLONE mytestdb ; Copy

Clone a schema and all objects within the schema at its current state:

CREATE SCHEMA mytestschema_clone CLONE testschema ; Copy

Clone a table at its current state:

CREATE TABLE orders_clone CLONE orders ; Copy

Clone a schema as it existed before the date and time in the specified timestamp:

CREATE SCHEMA mytestschema_clone_restore CLONE testschema BEFORE ( TIMESTAMP => TO_TIMESTAMP ( 40 * 365 * 86400 )); Copy

Clone a table as it existed exactly at the date and time of the specified timestamp:

CREATE TABLE orders_clone_restore CLONE orders AT ( TIMESTAMP => TO_TIMESTAMP_TZ ( '04/05/2013 01:02:03' , 'mm/dd/yyyy hh24:mi:ss' )); Copy

Clone a table as it existed immediately before the execution of the specified statement (i.e. query ID):

CREATE TABLE orders_clone_restore CLONE orders BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

Clone a database and all its objects as they existed four days ago and skip any tables that have a data retention period of less than four days:

CREATE DATABASE restored_db CLONE my_db AT ( TIMESTAMP => DATEADD ( days , - 4 , current_timestamp ) ::timestamp_tz ) IGNORE TABLES WITH INSUFFICIENT DATA RETENTION ; Copy

Get Snowflake Projects Live Faster. Reliably.

What is Snowflake Time Travel?

Snowflake Time Travel allows you to access previous snapshots of your data objects, such as tables, schemas, and databases. You can access these snapshots at any point within the specified data retention period.

Time travel in Snowflake, combined with the UNDROP SQL command helps you recover from running unintended, accidental operations on the database, such as DELETE FROM , TRUNCATE TABLE , or DROP TABLE commands.

In this article, we're going to kick things off by diving into Snowflake's Time Travel functionality and see how pairing it with the CLONE command can help us recover dropped objects. We'll also explore setting the data retention period for data objects, what fail-safe is, and understand how it works. In the end, we will explore how we can extend these concepts to code, by coupling code and data together in a stateful data system . We'll do so just by using Git, so you can safely restore any previous snapshot of your objects from your data warehouse based on a Git commit.

How to restore a dropped object?

If you're anxiously Googling because you dropped your production table: you can use the Snowflake UNDROP TABLE command. Calling UNDROP restores the latest version of a table before issuing the DROP command:

You can call UNDROP on schema or database objects as well:

Viewing dropped objects

The SHOW TABLES Snowflake command lists all current tables within a schema. To also see the dropped variations of a table, you can use the SHOW TABLES HISTORY command. This command also works with schemas or database objects:

The output of these commands includes all dropped objects, along with an additional DROPPED_ON column that indicates the timestamp when each object was dropped. If an object was dropped more than once, the output will list each instance of the object as a separate row.

It's important to note that while tables, schemas, and databases can be restored in Snowflake, views dropped through a DROP VIEW command cannot be restored and must be recreated. To prevent the loss of your view objects, we recommend saving the definition of your views in a version control system like Git.

Time Travel syntax

To query previous versions of an object, Snowflake provides several methods, including:

- AT(TIMESTAMP => '<TIMESTAMP>')

- AT(OFFSET => -<NUMBER_OF_SECONDS>)

- BEFORE(STATEMENT => '<STATEMENT_ID>')

You can find the STATEMENT_ID of a particular query from the Query History tab.

For example, to retrieve data from 10 minutes ago, you can use:

You can also calculate the TIMESTAMP for a specific point in the past using SQL timestamp functions. For instance, to access data from 10 minutes ago, you can use:

To use the BEFORE(STATEMENT => '<STATEMENT_ID>') method for querying a previous version of an object before a specific operation, you can retrieve the STATEMENT_ID from the Query History view of that particular query that changed the state of the object. This functionality is particularly useful for pinpointing and restoring data to its state prior to a specific change or operation.

Cloning past snapshots of an object

You can materialize any previous snapshot in the database by using the CLONE keyword in the CREATE command. For example:

This feature is especially useful after using the Snowflake truncate table or Snowflake delete rows commands (i.e., DELETE FROM my_table WHERE <condition> ).

Conversely, you can restore schemas or databases using the CLONE command:

The command might fail for databases and schemas if they contain child objects (schemas or tables) whose Time travel period has expired. To mitigate this issue, use the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter:

Data retention period

The standard retention period is 1 day and is automatically applied to all objects within an account. For Snowflake Enterprise or higher tiers, this retention period can be increased up to 90 days (excluding transient or temporary tables). To modify the retention period of an object, the DATA_RETENTION_TIME_IN_DAYS configuration can be used:

Only users with the ACCOUNTADMIN role can specify the DATA_RETENTION_TIME_IN_DAYS at the account level, which will then act as the default value for all objects within the account.

A user with the ACCOUNTADMIN role can also set a minimum data retention time at the account level with the MIN_DATA_RETENTION_TIME_IN_DAYS parameter. This setting enforces a minimum data retention period across all databases, schemas, and tables within the account. When MIN_DATA_RETENTION_TIME_IN_DAYS is configured at the account level, the data retention period for an object within the account becomes the maximum of DATA_RETENTION_TIME_IN_DAYS and MIN_DATA_RETENTION_TIME_IN_DAYS parameters.

To override the account default when creating a new table, schema, or database, include the DATA_RETENTION_TIME_IN_DAYS parameter in the creation command:

It's also possible to disable Time Travel for an object by setting the retention period to 0:

Once an object's retention period comes to an end, its historical data enters the Snowflake Fail-safe phase. During this phase:

- You won't be able to query historical data anymore.

- Cloning past objects is off the table.

- Restoring objects that were previously dropped becomes impossible.

Fail-safe acts as a safety net, offering a fixed 7-day window where Snowflake might still be able to recover historical data. This critical period kicks in right after the Time Travel retention period ends, giving you one last chance at data recovery, albeit with Snowflake's assistance and under specific conditions. You can read more about the steps required here .

Time Travel example

Here's a Snowflake Time Travel query you can use to restore a previous version of a table where some rows were accidentally updated and deleted:

Output: id_squared: [0, 1, 4, 9, 16]

Output: id_squared: [1, 1, 1, 1, 1]

You'll need to find the QUERY_ID of the UPDATE statement from the QUERY HISTORY view:

Once you have the snapshot of your table cloned, you can proceed to swap the two tables if needed:

Here's another Snowflake Time Travel example for when a table is accidentally dropped or renamed:

In these examples, Time Travel allows you to "go back in time" and to correct mistakes such as accidental updates, deletions, or even structural changes to your tables.

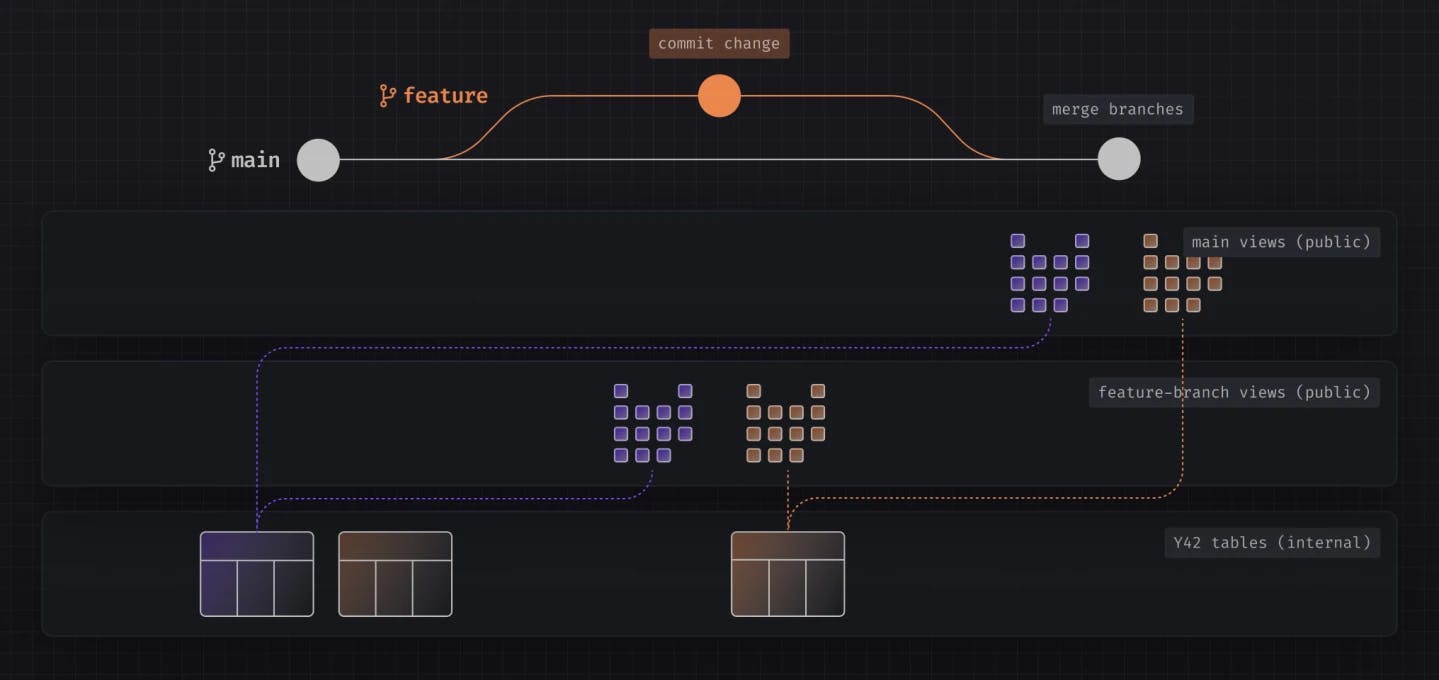

Snowflake Time Travel alternative: Git-like version control for data

Time travel is a powerful feature for restoring data objects based on a timestamp value or statement ID. In real-world scenarios, it might be difficult to reconcile to which statement or timestamp you want to revert your schema. Multiple people can issue statements concurrently on the same schema, or even on the same table in a short timeframe. This complicates time travel.

A better solution would be to couple code and data together, restoring the state of your schema based on a commit ID. Y42 provides such a stateful solution that allows you to version control code and manage both data and code together using just Git. This means if you want to roll back your database to a specific version of your code, the data from that point in time is also automatically restored alongside your code changes. This is achieved by maintaining two separate layers in the database, with pointers to all previous snapshots of an object attached to individual commit IDs.

If you want to learn more about what powers this mechanism, you can read about Virtual Data Builds here .

Conclusions

Snowflake offers robust tools to protect your data objects against accidental operations. I still remember the days when you had to perform daily backups, and restoring such a backup would take minutes or hours, depending on the S3 storage class the backup resided in. Then, you'd have to backfill the missing data from when the backup was taken to the current timestamp. Snowflake Time Travel puts all that in the rearview mirror with simple, intuitive SQL commands that allow restoring objects from any time (within a specific timeframe). However, it does so without the ability to tie the object's version from the database to a change in your codebase that caused it. This is where Y42 comes into play. Y42 enables you to maintain your data warehouse and codebase in sync at all times. With Y42, you can deploy or revert code changes, and the state of the data warehouse is automatically synced in a frictionless, zero-copy operation, empowering everyone to make changes to their production system with confidence .

In this article

Share this article

More articles

Be the first to know

Subscribe to our newsletter to get the latest news and insights from the dataverse, curated by fellow data practitioners.

IMAGES

VIDEO

COMMENTS

OFFSET (time difference in seconds from the present time) STATEMENT (identifier for statement, e.g. query ID) UNDROP command for tables, schemas, and databases. Data Retention Period¶ A key component of Snowflake Time Travel is the data retention period. When data in a table is modified, including deletion of data or dropping an object ...

Snowflake Time Travel enables accessing historical data that has been changed or deleted at any point within a defined period. It is a powerful CDP (Continuous Data Protection) feature which ensures the maintenance and availability of your historical data. ... OFFSET "OFFSET" is the time difference in seconds from the present time. The ...

This video demonstrates to users how to use Snowflake's Time Travel feature (via the Offset method).

Nov 28, 2023. Learn how to leverage Snowflake's Time Travel feature in conjunction with DbVisualizer to effortlessly explore historical data, restore tables to previous states, and track changes ...

You set the period of a time travel window through the DATA_RETENTION_TIME_IN_DAYS parameter value. Time travel applies hierarchically to the account level, or to a database, schema, or table level. There is always by default an implicit value, inherited by child objects. Or can be enforced in an explicit manner at any level.

Time Travel is Snowflake's feature that enables temporal data access. Users can access historical data that's up to 90 days old starting with Snowflake's Enterprise edition. ... (OFFSET => -60*60 ...

Snowflake Time Travel enables to query data as it was saved at a particular point in time and roll back to the corresponding version. ... OFFSET (time difference in seconds from the present time ...

The default Time Travel retention period is 1 day (24 hours). PRO TIP: Snowflake does have an additional layer of data protection called fail-safe, which is only accessible by Snowflake to restore customer data past the time travel window. However, unlike time travel, it should not be considered as a part of your organization's backup strategy.

Time Travel in Snowflake full practical session | Restoring objects | offset, timestamp and before #snowflake #time travelTopics covered in this video :time ...

Snowflake Time Travel is an interesting tool that allows you to access data from any point in the past. For example, if you have an Employee table, and you inadvertently delete it, you can utilize Time Travel to go back 5 minutes and retrieve the data. Snowflake Time Travel allows you to Access Historical Data (that is, data that has been ...

In all Snowflake editions, It is set to 1 day by default for all objects. This parameter can be extended to 90 days for Enterprise and Business-Critical editions. The parameter "DATA RETENTION PERIOD" controls an object's time travel capability. Once the time travel duration is exceeded the object enters the Fail-safe region.

OFFSET (time difference in seconds from the present time) STATEMENT (identifier for statement, e.g. query ID) UNDROP command for tables, schemas, and databases. A key component of Snowflake Time Travel is the data retention period. When data in a table is modified, including deletion of data or dropping an object containing data, Snowflake ...

Time travel Invoking methods. Snowflake provides three methods of time travel - Using Timestamp; Using Offset; Using Query ID; Using Timestamp. We can do time travel to any point before or after ...

OFFSET: Time difference from current time till offset provided in seconds. STATEMENT: Using a Statement ID from the point where the last DML query was fired. ... Snowflake Time Travel is a powerful feature that enables users to examine data usage and manipulations over a specific time. Syntax to query with time travel is fairly the same as in ...

OFFSET => time_difference. Specifies the difference in seconds from the current time to use for Time Travel, in the form -N where N can be an integer or arithmetic expression (e.g. -120 is 120 seconds, -30*60 is 1800 seconds or 30 minutes). STATEMENT => id. Specifies the query ID of a statement to use as the reference point for Time Travel.

In these examples, Time Travel allows you to "go back in time" and to correct mistakes such as accidental updates, deletions, or even structural changes to your tables. Snowflake Time Travel alternative: Git-like version control for data. Time travel is a powerful feature for restoring data objects based on a timestamp value or statement ID.

Snowflake Time Travel is an interesting tool that allows us to access historical data (data that has been modified/removed) from any point, within a defined period, in the past. ... OFFSET — the ...

Snowflake shares still dropped in the days following that report. Its sales guidance calls for 26%-27% growth next year, significantly lower than Wall Street's expectations. That's a healthy pace ...