Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

WebCodecs is a flexible web API for encoding and decoding audio and video.

w3c/webcodecs

Folders and files, repository files navigation.

The WebCodecs API allows web applications to encode and decode audio and video.

Many Web APIs use media codecs internally to support APIs for particular uses:

- HTMLMediaElement and Media Source Extensions

- WebAudio (decodeAudioData)

- MediaRecorder

But there’s no general way to flexibly configure and use these media codecs. Because of this, many web applications have resorted to implementing media codecs in JavaScript or WebAssembly, despite the disadvantages:

- Increased bandwidth to download codecs already in the browser.

- Reduced performance

- Reduced power efficiency

It's great for:

- Live streaming

- Cloud gaming

- Media file editing and transcoding

See the explainer for more info.

Code samples

Please see https://w3c.github.io/webcodecs/samples/

WebCodecs registries

This repository also contains two registries:

The WebCodecs Codec Registry provides the means to identify and avoid collisions among codec strings used in WebCodecs and provides a mechanism to define codec-specific members of WebCodecs codec configuration dictionaries. Codec-specific registrations entered in the registry are also maintained in the repository, please refer to the registry for a comprehensive list.

The WebCodecs VideoFrame Metadata Registry enumerates the metadata fields that can be attached to VideoFrame objects via the VideoFrameMetadata dictionary. Metadata registrations entered in the registry may be maintained in this repository or elsewhere. Please refer to the registry for a comprehensive list.

Code of conduct

Contributors 40.

- Makefile 0.3%

WebCodecs API

Video decoding and display.

Demuxes (using mp4box.js) and decodes an mp4 file, paints to canvas ASAP

Audio And Video Player

Demuxes, decodes, and renders synchronized audio and video.

Animated GIF Renderer

Using ImageDecoder to implement an animated GIF renderer.

Capture To File

Reading from camera, encoding via webcodecs, and creating a webm file on disk.

WebCodecs in Worker

Capture from camera, encode and decode in a worker

- Español – América Latina

- Português – Brasil

- Tiếng Việt

- Web Platform

Video processing with WebCodecs

Manipulating video stream components.

Modern web technologies provide ample ways to work with video. Media Stream API , Media Recording API , Media Source API , and WebRTC API add up to a rich tool set for recording, transferring, and playing video streams. While solving certain high-level tasks, these APIs don't let web programmers work with individual components of a video stream such as frames and unmuxed chunks of encoded video or audio. To get low-level access to these basic components, developers have been using WebAssembly to bring video and audio codecs into the browser. But given that modern browsers already ship with a variety of codecs (which are often accelerated by hardware), repackaging them as WebAssembly seems like a waste of human and computer resources.

WebCodecs API eliminates this inefficiency by giving programmers a way to use media components that are already present in the browser. Specifically:

- Video and audio decoders

- Video and audio encoders

- Raw video frames

- Image decoders

The WebCodecs API is useful for web applications that require full control over the way media content is processed, such as video editors, video conferencing, video streaming, etc.

Video processing workflow

Frames are the centerpiece in video processing. Thus in WebCodecs most classes either consume or produce frames. Video encoders convert frames into encoded chunks. Video decoders do the opposite.

Also VideoFrame plays nicely with other Web APIs by being a CanvasImageSource and having a constructor that accepts CanvasImageSource . So it can be used in functions like drawImage() and texImage2D() . Also it can be constructed from canvases, bitmaps, video elements and other video frames.

WebCodecs API works well in tandem with the classes from Insertable Streams API which connect WebCodecs to media stream tracks .

- MediaStreamTrackProcessor breaks media tracks into individual frames.

- MediaStreamTrackGenerator creates a media track from a stream of frames.

WebCodecs and web workers

By design WebCodecs API does all the heavy lifting asynchronously and off the main thread. But since frame and chunk callbacks can often be called multiple times a second, they might clutter the main thread and thus make the website less responsive. Therefore it is preferable to move handling of individual frames and encoded chunks into a web worker.

To help with that, ReadableStream provides a convenient way to automatically transfer all frames coming from a media track to the worker. For example, MediaStreamTrackProcessor can be used to obtain a ReadableStream for a media stream track coming from the web camera. After that the stream is transferred to a web worker where frames are read one by one and queued into a VideoEncoder .

With HTMLCanvasElement.transferControlToOffscreen even rendering can be done off the main thread. But if all the high level tools turned out to be inconvenient, VideoFrame itself is transferable and may be moved between workers.

WebCodecs in action

It all starts with a VideoFrame . There are three ways to construct video frames.

From an image source like a canvas, an image bitmap, or a video element.

Use MediaStreamTrackProcessor to pull frames from a MediaStreamTrack

Create a frame from its binary pixel representation in a BufferSource

No matter where they are coming from, frames can be encoded into EncodedVideoChunk objects with a VideoEncoder .

Before encoding, VideoEncoder needs to be given two JavaScript objects:

- Init dictionary with two functions for handling encoded chunks and errors. These functions are developer-defined and can't be changed after they're passed to the VideoEncoder constructor.

- Encoder configuration object, which contains parameters for the output video stream. You can change these parameters later by calling configure() .

The configure() method will throw NotSupportedError if the config is not supported by the browser. You are encouraged to call the static method VideoEncoder.isConfigSupported() with the config to check beforehand whether the config is supported and wait for its promise.

After the encoder has been set up, it's ready to accept frames via encode() method. Both configure() and encode() return immediately without waiting for the actual work to complete. It allows several frames to queue for encoding at the same time, while encodeQueueSize shows how many requests are waiting in the queue for previous encodes to finish. Errors are reported either by immediately throwing an exception, in case the arguments or the order of method calls violates the API contract, or by calling the error() callback for problems encountered in the codec implementation. If encoding completes successfully the output() callback is called with a new encoded chunk as an argument. Another important detail here is that frames need to be told when they are no longer needed by calling close() .

Finally it's time to finish encoding code by writing a function that handles chunks of encoded video as they come out of the encoder. Usually this function would be sending data chunks over the network or muxing them into a media container for storage.

If at some point you'd need to make sure that all pending encoding requests have been completed, you can call flush() and wait for its promise.

Setting up a VideoDecoder is similar to what's been done for the VideoEncoder : two functions are passed when the decoder is created, and codec parameters are given to configure() .

The set of codec parameters varies from codec to codec. For example H.264 codec might need a binary blob of AVCC, unless it's encoded in so called Annex B format ( encoderConfig.avc = { format: "annexb" } ).

Once the decoder is initialized, you can start feeding it with EncodedVideoChunk objects. To create a chunk, you'll need:

- A BufferSource of encoded video data

- the chunk's start timestamp in microseconds (media time of the first encoded frame in the chunk)

- key if the chunk can be decoded independently from previous chunks

- delta if the chunk can only be decoded after one or more previous chunks have been decoded

Also any chunks emitted by the encoder are ready for the decoder as is. All of the things said above about error reporting and the asynchronous nature of encoder's methods are equally true for decoders as well.

Now it's time to show how a freshly decoded frame can be shown on the page. It's better to make sure that the decoder output callback ( handleFrame() ) quickly returns. In the example below, it only adds a frame to the queue of frames ready for rendering. Rendering happens separately, and consists of two steps:

- Waiting for the right time to show the frame.

- Drawing the frame on the canvas.

Once a frame is no longer needed, call close() to release underlying memory before the garbage collector gets to it, this will reduce the average amount of memory used by the web application.

Use the Media Panel in Chrome DevTools to view media logs and debug WebCodecs.

The demo below shows how animation frames from a canvas are:

- captured at 25fps into a ReadableStream by MediaStreamTrackProcessor

- transferred to a web worker

- encoded into H.264 video format

- decoded again into a sequence of video frames

- and rendered on the second canvas using transferControlToOffscreen()

Other demos

Also check out our other demos:

- Decoding gifs with ImageDecoder

- Capture camera input to a file

- MP4 playback

- Other samples

Using the WebCodecs API

Feature detection.

To check for WebCodecs support:

Keep in mind that WebCodecs API is only available in secure contexts , so detection will fail if self.isSecureContext is false.

The Chrome team wants to hear about your experiences with the WebCodecs API.

Tell us about the API design

Is there something about the API that doesn't work like you expected? Or are there missing methods or properties that you need to implement your idea? Have a question or comment on the security model? File a spec issue on the corresponding GitHub repo , or add your thoughts to an existing issue.

Report a problem with the implementation

Did you find a bug with Chrome's implementation? Or is the implementation different from the spec? File a bug at new.crbug.com . Be sure to include as much detail as you can, simple instructions for reproducing, and enter Blink>Media>WebCodecs in the Components box. Glitch works great for sharing quick and easy repros.

Show support for the API

Are you planning to use the WebCodecs API? Your public support helps the Chrome team to prioritize features and shows other browser vendors how critical it is to support them.

Send emails to [email protected] or send a tweet to @ChromiumDev using the hashtag #WebCodecs and let us know where and how you're using it.

Hero image by Denise Jans on Unsplash .

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2020-10-13 UTC.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

webrtcHacks

[10 years of] guides and information for WebRTC developers

Guide Standards Technology insertable streams , streams , TransformStream , VideoEncoder , VideoFrame , w3c , webcodecs , WebGPU , WebNN , webtransport , WHATWG François Daoust · March 14, 2023

Real-Time Video Processing with WebCodecs and Streams: Processing Pipelines (Part 1)

WebRTC used to be about capturing some media and sending it from Point A to Point B. Machine Learning has changed this. Now it is common to use ML to analyze and manipulate media in real time for things like virtual backgrounds, augmented reality, noise suppression, intelligent cropping, and much more. To better accommodate this growing trend, the web platform has been exposing its underlying platform to give developers more access. The result is not only more control within existing APIs, but also a bunch of new APIs like Insertable Streams, WebCodecs, Streams, WebGPU, and WebNN.

So how do all these new APIs work together? That is exactly what W3C specialists, François Daoust and Dominique Hazaël-Massieux (Dom) decided to find out. In case you forgot, W3C is the World Wide Web Consortium that standardizes the Web. François and Dom are long-time standards guys with a deep history of helping to make the web what it is today.

This is the first of a two-part series of articles that explores the future of real-time video processing with WebCodecs and Streams. This first section provides a review of the steps and pitfalls in a multi-step video processing pipeline using existing and the newest web APIs. Part two will explore the actual processing of video frames.

I am thrilled about the depth and insights these guides provide on these cutting-edge approaches – enjoy!

About Processing Pipelines

Note: these apis are new and may not work in your browser, timing stats, what about the canvas do we need webcodecs, webcodecs advantages, note on containerized media, processing streams using… streams, backpressure, creating a pipeline, from scratch, from a camera or a webrtc track, from a webtransport stream, what about webtransport, what about data channels, transforming a stream, to a <canvas> element, to a <video> element, notes and caveats, to the cloud with webtransport, handling backpressure, multiple workers, learning: use a single worker for now, measuring performance, how to process videoframes.

{“editor”, “ chad hart “}

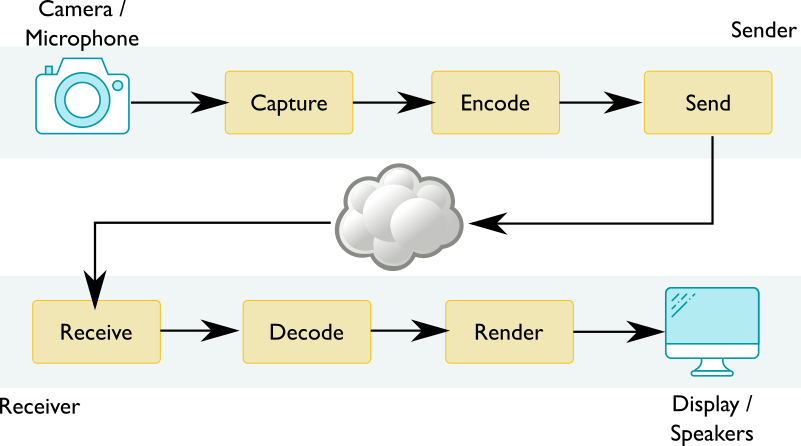

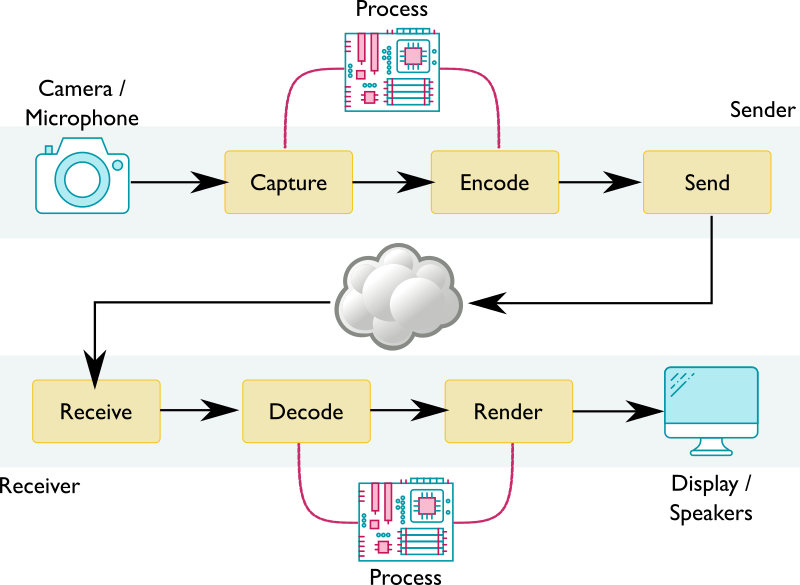

In simple WebRTC video conferencing scenarios, audio and video streams captured on one device are sent to another device, possibly going through some intermediary server. The capture of raw audio and video streams from microphones and cameras relies on getUserMedia . Raw media streams then need to be encoded for transport and sent over to the receiving side. Received streams must be decoded before they can be rendered. The resulting video pipeline is illustrated below. Web applications do not see these separate encode/send and receive/decode steps in practice – they are entangled in the core WebRTC API and under the control of the browser.

If you want to add the ability to do something like remove users’ backgrounds, the most scalable and privacy respective option is to do it client-side before the video stream is sent to the network. This operation needs access to the raw pixels of the video stream. Said differently, it needs to take place between the capture step and encode steps. Similarly, on the receiving side, you may want to give users options like adjusting colors and contrast, which also require raw pixel access between the decode and render steps. As illustrated below, this adds an extra process steps to the resulting video pipeline.

This made Dominique Hazaël-Massieux and me wonder how web applications can build such media processing pipelines.

The main problem is raw frames from a video stream cannot casually be exposed to web applications. Raw frames are:

- large – several MB per frame,

- plentiful – 25 frames per second or more,

- not easily exposable – GPU to CPU read-back often needed, and

- browsers need to deal with a variety of pixel formats (RGBA, YUV, etc.) and color spaces under the hoods.

As such, whenever possible, web technologies that manipulate video streams on the web ( HTMLMediaElement , WebRTC , getUserMedia , Media Source Extensions ) treat them as opaque objects and hide the underlying pixels from applications. This makes it difficult for web applications to create a media processing pipeline in practice.

Fortunately, the VideoFrame interface in WebCodecs may help, especially if you couple this with the MediaStreamTrackProcessor object defined in MediaStreamTrack Insertable Media Processing using Streams that creates a bridge between WebRTC and WebCodecs. WebCodecs lets you access and process raw pixels of media frames. Actual processing can use one of many technologies, starting with good ol’ JavaScript and including WebAssembly , WebGPU , or the Web Neural Network API (WebNN).

After processing, you could get back to WebRTC land through the same bridge. That said, WebCodecs can also put you in control of the encode/decode steps in the pipeline through its VideoEncoder and VideoDecoder interfaces. These can give you full control over all individual steps in the pipeline:

- For transporting the processed image somewhere while keeping latency low, you could consider WebTransport or WebRTC’s RTCDataChannel .

- For rendering, you could render directly to a canvas through drawImage , using WebGPU, or via an <video> element through VideoTrackGenerator (also defined in MediaStreamTrack Insertable Media Processing using Streams ).

Inspired by sample code created by Bernard Aboba – co-editor of the WebCodecs and WebTransport specifications and co-chair of the WebRTC Working Group in W3C – Dominique and I decided to spend a bit of time exploring the creation of processing media pipelines. First, we wanted to better grasp media concepts such as video pixel formats and color spaces – we probably qualify as web experts, but we are not media experts and we tend to view media streams as opaque beasts as well. Second, we wanted to assess whether technical gaps remain. Finally, we wanted to understand where and when copies get made and gather some performance metrics along the way.

This article describes our approach, provides highlights of our resulting demo code , and shares our learnings. The code should not be seen as authoritative or even correct (though we hope it is), it is just the result of a short journey in the world of media processing. Also, note the technologies under discussion are still nascent and do not yet support interoperability across browsers. Hopefully, this will change soon!

Note: We did not touch on audio for lack of time. Audio frames take less memory, but there are many more of them per second and they are more sensitive to timing hiccups. Audio frames are processed with the Web Audio API. It would be very interesting to add audio to the mix, be it only to explore audio/video synchronization needs.

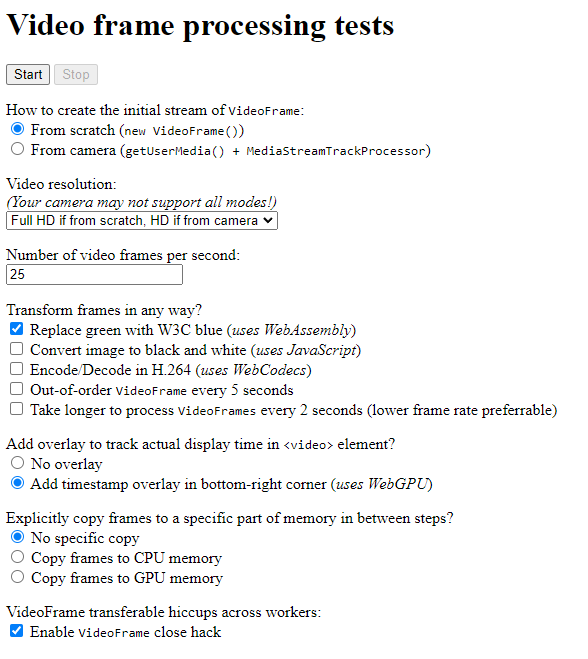

Our demo explores the creation of video processing pipelines, captures performance metrics, evaluates the impacts of choosing a specific technology to process frames, and provides insights about where operations get done and when copies are made. The processing operations loop through all pixels in the frame and “do something with them” (what they actually do is of little interest here). Different processing technologies are used for testing purposes, not because they would necessarily be a good choice for the problem at hand.

The demo lets the user:

- Choose a source of input to create an initial stream of VideoFrame: either a Nyan-cat -like animation created from scratch using OffscreenCanvas , or a live stream generated from a camera. The user may also choose the resolution and framerate of the video stream.

- Process video frames to replace green with blue using WebAssembly.

- Process video frames to turn them into black and white using pure JavaScript.

- Add an H.264 encoding/decoding transformation stage using WebCodecs.

- Introduce slight delays in the stream using regular JavaScript.

- Add an overlay to the bottom right part of the video that encodes the frame’s timestamp. The overlay is added using WebGPU and WGSL.

- Add intermediary steps to force copies of the frame to CPU memory or GPU memory, to evaluate the impact of the frame’s location in memory on transformations.

Once you hit the “Start” button, the pipeline runs and the resulting stream is displayed on the screen in a <video> element. And… that’s it, really! What mattered to us was the code needed to achieve that and the insights we gained from gathering performance metrics and playing with parameters. Let’s dive into that!

Technologies discussed in this article and used in the demo are still “emerging” (at least as of March 2023). The demo currently only runs in Google Chrome Canary with WebGPU enabled (“Unsafe WebGPU” flag set in chrome://flags/ ). Hopefully, the demo can soon run in other browsers too. Video processing with WebCodecs is available in the technical preview of Safari (16.4) and is under development in Firefox . WebGPU is also under development in Safari and Firefox . A greater unknown is support for MediaStreamTrack Insertable Media Processing using Streams in other browsers. For example, see this tracking bug in Firefox .

Timing statistics are reported to the end of the page at the end and as objects to the console (this requires opening the dev tools panel). Provided the overlay was present, display times for each frame are reported too.

We’ll discuss more on this in the Measuring Performance section.

The role of WebCodecs in client-side processing

WebCodecs is the core of the demo and the key technology we’re using to build a media pipeline. Before we dive more into this, it may be useful to reflect on the value of using WebCodecs in this context. Other approaches could work just as well.

In fact, client-side processing of raw video frames has been possible on the web ever since the <video> and <canvas> elements were added to HTML, with the following recipe:

- Render the video onto a <video> element.

- Draw the contents of the <video> element onto a <canvas> with drawImage on a recurring basis, e.g. using requestAnimationFrame or the more recent requestVideoFrameCallback that notifies applications when a video frame has been presented for composition and provides them with metadata about the frame.

- Process the contents of the <canvas> whenever it gets updated.

We did not integrate this approach in our demo. Among other things, the performance here would depend on having the processing happen out of the main thread. We would need to use an OffscreenCanvas to process contents in a worker, possibly coupled with a call to grabFrame to send the video frame to the worker.

One drawback to the Canvas approach is that there is no guarantee that all video frames get processed. Applications can tell how many frames they missed if they hook onto requestVideoFrameCallback by looking at the presentedFrames counter, but missed frames were, by definition, missed. Another drawback is that some of the code ( drawImage or grabFrame ) needs to run on the main thread to access the <video> element.

WebGL and WebGPU also provide mechanisms to import video frames as textures directly from a <video> element, e.g. through the importExternalTexture method in WebGPU. This approach works well if the processing logic can fully run on the GPU.

WebCodecs gives applications a direct handle to a video frame and mechanisms to encode/decode them. This allows applications to create frames from scratch, or from an incoming stream, provided that the stream is in non-containerized form.

One important note – media streams are usually encapsulated in a media container. The container may include other streams along with timing and other metadata. While media streams in WebRTC scenarios do not use containers, most stored media files and media streamed on the web use adaptive streaming technologies (e.g. DASH, HLS) that are in a containerized form (e.g. MP4, ISOBMFF). WebCodecs can only be used on non-containerized streams. Applications that want to use WebCodecs with containerized media need to ship additional logic on their own to extract the media streams from their container (and/or to add streams to a container). For more information about media container formats, we recommend The Definitive Guide to Container File Formats by Armin Trattnig .

So, having a direct handle on a video frame seems useful to create a media processing pipeline. It gives a handle to the atomic chunk of data that will be processed at each step.

Pipe chains

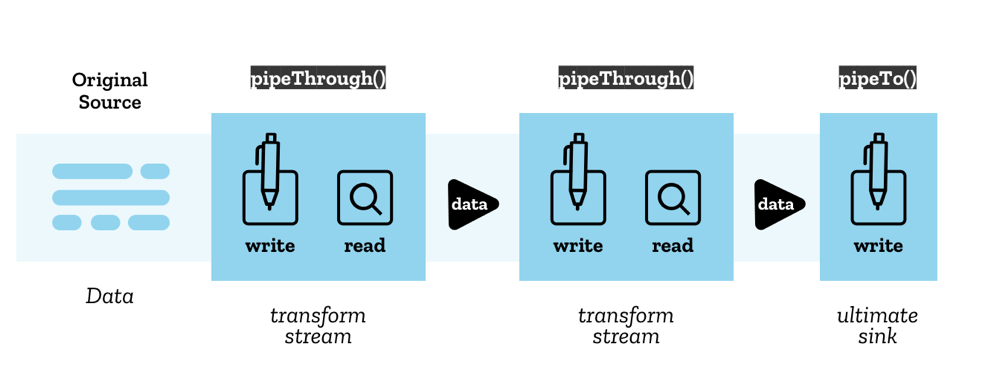

WHATWG Streams are specifically designed to create pipe chains to process such atomic chunks. This is illustrated in the Streams API concepts MDN page:

WHATWG Streams are also used as the underlying structure by some of the technologies under consideration, such as WebTransport , VideoTrackGenerator , and MediaStreamTrackProcessor .

Finally, Streams provide backpressure and queuing mechanisms out of the box. As defined in the WHATWG Streams standard , backpressure is the process of

normalizing flow from the original source according to how fast the chain can process chunks.

When a step in a chain is unable to accept more chunks in its queue, it sends a signal that propagates backward through the pipe chain and up to the source to tell it to adjust its rate of production of new chunks. With backpressure, no need to worry about overflowing queues, the flow will naturally adapt to the maximum speed at which processing can run.

Broadly speaking, creating a media processing pipeline using streams translates to:

- Create a stream of VideoFrame objects – somehow

- Use TransformStream to create processing steps – compose them as needed

- Send/Render the resulting stream or VideoFrame objects – somehow

The Devil is of course in the somehow . Some technologies can ingest or digest a stream of VideoFrame objects directly – not all of them can. Connectors are needed.

Pipelining is like a game of dominoes

We found it useful to visualize possibilities through a game of dominoes:

The left side of each domino is a type of input. The right side of the diagram shows the type of output. There are three main types of dominoes:

- generators,

- transformers, and

As long as you match the input of a domino with the output of the preceding one, you may assemble them any way you like to create pipelines. Let’s look at them in more detail:

Generating a stream

You may create a VideoFrame from the contents of a canvas (or a buffer of bytes for that matter). Then, to generate a stream, just write the frame to a WritableStream at a given rate. In our code, this is implemented in the worker-getinputstream.js file. The logic creates a Nyan-cat-like animation with the W3C logo. As we will describe later, we make use of the WHATWG Streams backpressure mechanism by waiting for the writer to be ready:

In WebRTC contexts, the source of a video stream is usually a MediaStreamTrack obtained from the camera through a call to getUserMedia , or received from a peer. The MediaStreamTrackProcessor object (MSTP) can be used to convert the MediaStreamTrack to a stream of VideoFrame objects.

Note: MediaStreamTrackProcessor is only exposed in worker contexts… in theory, but Chrome currently exposes it on the main thread and only there.

WebTransport creates WHATWG streams, so there is no need to run any stream conversion. That said, it is fairly inefficient to transport raw decoded frames given their size. Thus why all media streams travel encoded through the cloud! As such, the WebTransportReceiveStream will typically contain encoded chunks, to be interpreted as EncodedVideoChunk . To get back to a stream of VideoFrame objects, each chunk needs to go through a VideoDecoder . Simple chunk encoding/decoding logic (without WebTransport ) can be found in the worker-transform.js file.

The demo does not integrate WebTransport yet. We encourage you to check Bernard Aboba’s WebCodecs/WebTransport sample . Both the sample and approach presented here are limited in that only one stream is used to send/receive encoded frames. Real-life applications would likely be more complex to avoid head-of-line blocking issues. They would likely use multiple transport streams in parallel, up to one per frame. On the receiving end, frames received on individual streams then need to be reordered and merged to re-create a unique stream of encoded frames. The IETF Media over QUIC (moq) Working Group develops such a low-latency media delivery solution (over raw QUIC or WebTransport).

RTCDataChannel could also be used to transport encoded frames, with the caveat that some adaptation logic would be needed to connect RTCDataChannel with Streams .

Once you have a Stream of VideoFrame objects, video processing can be structured as a TransformStream that takes a VideoFrame as input and produces an updated VideoFrame as output. Transform streams can be chained as needed, although it is always a good idea to keep the number of steps that need to access pixels to a minimum, since accessing pixels in a video frame typically means looping through millions of them (ie 1920 * 1080 = 2 074 600 pixels for a video frame in full HD).

Note: Part 2 explores technologies that can be used under the hood to process the pixels. We also review performance considerations.

Sending/Rendering a stream

Some apps only need to extract information from the stream – like in the case of gesture detection. However, in most cases, the final stream needs to be rendered or sent somewhere.

A VideoFrame can be directly drawn onto a canvas. Simple!

Rendering frames to a canvas gives the applications full control over when to display those frames. This seems particularly useful when a video stream needs to be synchronized with something else, e.g. overlays and/or audio. One drawback is that, if the goal is to end up with a media player, you will have to re-implement that media player from scratch. That means adding controls, support for tracks, accessibility, etc. This is no easy task…

A stream of VideoFrame objects cannot be injected into a <video> element. Fortunately, a VideoTrackGenerator (VTG) can be used to convert the stream into a MediaStreamTrack that can then be injected into a <video> element.

Only for Workers

Note VideoTrackGenerator is only exposed in worker contexts… in theory, but as for MediaStreamTrackProcessor , Chrome currently exposes it on the main thread and only there.

VideoTrackGenerator is the new MediaStreamTrackGenerator

Also note: VideoTrackGenerator used to be called MediaStreamTrackGenerator . Implementation in Chrome has not yet caught up with the new name, so our code still uses the old name!

WebTransport can be used to send the resulting stream to the cloud. As noted before, it would require too much bandwidth to send unencoded video frames in a WebTransportSendStream . They need to be encoded first, using the VideoEncoder interface defined in WebCodecs. Simple frame encoding/decoding logic (without WebTransport) can be found in the worker-transform.js file.

Streams come geared with a backpressure mechanism. Signals propagate through the pipe chain and up to the source when the queue is building up to indicate it might be time to slow down or drop one or more frames. This mechanism is very convenient to avoid accumulating large decoded video frames in the pipeline that could exhaust memory. 1 second of full HD video at 25 frames per second happily takes 200MB of memory once decoded.

The API also makes it possible for web applications to implement their own buffering strategy. If you need to process a live feed in real-time, you may want to drop frames that cannot be processed in time. Alternatively, if you need to transform recorded media then you can slow down and process all frames, no matter how long it takes.

One structural limitation is that backpressure signals only propagate through the pipeline in parts where WHATWG streams are used. They stop whenever the signals bump into something else. For instance, MediaStreamTrack does not expose a WHATWG streams interface. As a result, if a MediaStreamTrackProcessor is used in a pipeline, it receives backpressure signals but signals do not propagate beyond it. The buffering strategy is imposed: the oldest frame will be removed from the queue when room is needed for a new frame.

In other words, if you ever end up with a VideoTrackGenerator followed by a MediaStreamTrackProcessor in a pipeline, backpressure signals will be handled by the MediaStreamTrackProcessor and will not propagate to the source before the VideoTrackGenerator . You should not need to create such a pipeline, but we accidentally ended up with that configuration while writing the demo. Keep in mind that this is not equivalent to an identity transform.

Workers, TransformStream and VideoFrame

So far, we have assembled dominoes without being explicit about where the underlying code is going to run. With the notable exception of getUserMedia , all the components that we have discussed can run in workers. Running them outside of the main thread is either good practice or mandated as in the case of VideoTrackGenerator and MediaStreamTrackProcessor – though note these interfaces are actually only available on the main thread in Chrome’s current implementation.

Now if we are going to have threads, why restrict yourself to one worker when you can create more? Even though a media pipeline describes a sequence of steps, it seems useful at first sight to try and run different steps in parallel.

To run a processing step in a worker, the worker needs to gain access to the initial stream of VideoFrame objects which may have been created in another worker. Workers typically do not share memory but the postMessage API may be used for cross-worker communication. A VideoFrame is not a simple object but it is defined as a transferable object , which essentially means that it can be sent from one worker to another efficiently, without requiring a copy of the underlying frame data.

Note: Transfer detaches the object being transferred, which means that the transferred object can no longer be used by the worker that issued the call to postMessage .

One approach to run processing steps in separate workers would be to issue a call to postMessage for each and every VideoFrame at the end of a processing step to pass it over to the next step. From a performance perspective, while postMessage is not necessarily slow , the API is event-based and events still introduce delays. A better approach would be to pass the stream of VideoFrame objects once and for all when the pipeline is created. This is possible because ReadableStream , WritableStream and TransformStream are all transferable objects as well. Code to connect an input and output stream to another worker could then become:

Now, the fact that streams get transferred does not mean that the chunks that get read from or written to these streams are themselves transferred . Chunks are rather serialized . The nuance is thin (and should have a very minimal impact on performance) but particularly important for VideoFrame objects. Why? Because a VideoFrame needs to be explicitly closed through a call to its close method to free the underlying media resource that the VideoFrame points to.

When a VideoFrame is transferred, its close method is automatically called on the sender side. When a VideoFrame is serialized, even though the underlying media resource is not cloned, the VideoFrame object itself is cloned, and the close method now needs to be called twice: once on the sender side and once on the receiver side. The receiver side is not an issue: calling close() there is to be expected. However, there is a problem on the sender’s side: a call like controller.enqueue(frame) in a TransformStream attached to a readable stream transferred to another worker will trigger the serialization process, but that process happens asynchronously and there is no way to tell when it is done. In other words, on the sender side, code cannot simply be:

If you do that, the browser will rightfully complain when it effectively serializes the frame that it cannot clone it because the frame has already been closed. And yet the sender needs to close the frame at some point. If you don’t, one of two things could happen:

- the browser will either report a warning that it bumped into dangling VideoFrame instances (which suggests a memory leak) or

- the pipeline simply freezes after a couple of frames are processed.

The pipeline freeze happens, for example, when the VideoFrame is tied to hardware-decoded data. Hardware decoders use a very limited memory buffer, so pause until the memory of already decoded frames gets freed. This is a known issue. There are ongoing discussions to extend WHATWG streams with a new mechanism that would allow it to explicitly transfer ownership of the frame so that the sender side does not need to worry about the frame anymore. See for example the Transferring Ownership Streams Explained proposal.

Note: Closing the frame synchronously as in the code above sometimes works in practice in Chrome depending on the underlying processing pipeline. We found it hard to reproduce the exact conditions that make the browser decide to clone the frame right away or delay it. As far as we can tell, the code should not work in any case.

For now, it is probably best to stick to touching streams of VideoFrame objects from one and only one worker. The demo does use more than one worker. It keeps track of frame instances to close at the end of the processing pipeline. However, we did that simply because we did not know initially that creating multiple workers would be problematic and require such a hack.

The timestamp property of a VideoFrame instance provides a good identifier for individual frames, and allows applications to track them throughout the pipeline. The timestamp even survives encoding (and respectively decoding) with a VideoEncoder (and respectively with VideoDecoder ).

In the suggested pipeline model, a transformation step is a TransformStream that operates on encoded or decoded frames. The time taken to run the transformation step is thus simply the time taken by the transform function, or more precisely the time taken until the function calls controller.enqueue(transformedChunk) to send the updated frame down the pipe. The demo features a generic InstrumentedTransformStream class that extends TransformStream to record start and end times for each frame in a static cache. The class is a drop-in replacement for TransformStream :

Recorded times then get entered in an instance of a generic StepTimesDB class to compute statistics such as minimum, maximum, average, and median times taken by each step, as well as time spent waiting in queues.

This works well for the part of the pipeline that uses WHATWG Streams, but as soon as the pipeline uses opaque streams, such as when frames are fed into a VideoTrackGenerator , we lose the ability to track individual frames. In particular, there is no easy way to tell when a video frame is actually displayed to a <video> element. The requestVideoFrameCallbac k function reports many interesting timestamps, but not the timestamp of the frame that has been presented for composition.

The workaround implemented in the demo encodes the frame’s timestamp in an overlay in the bottom-right corner of the frame and then copies the relevant part of frames rendered to the <video> element to a <canvas> element whenever the requestVideoFrameCallback callback is called to decode the timestamp. This does not work perfectly – frames can be missed in between calls to the callback function, but it is better than nothing.

Note: requestVideoFrameCallback is supported in Chrome and Safari but not in Firefox for now.

It is useful for statistical purposes to track the time when the frame is rendered. For example, one could evaluate jitter effects. Or you could use this data for synchronization purposes, like if video needs to be synchronized with an audio stream and/or other non-video overlays. Frames can of course be rendered to a canvas instead. The application can then keep control over when a frame gets displayed to the user (ignoring the challenges of reimplementing a media player).

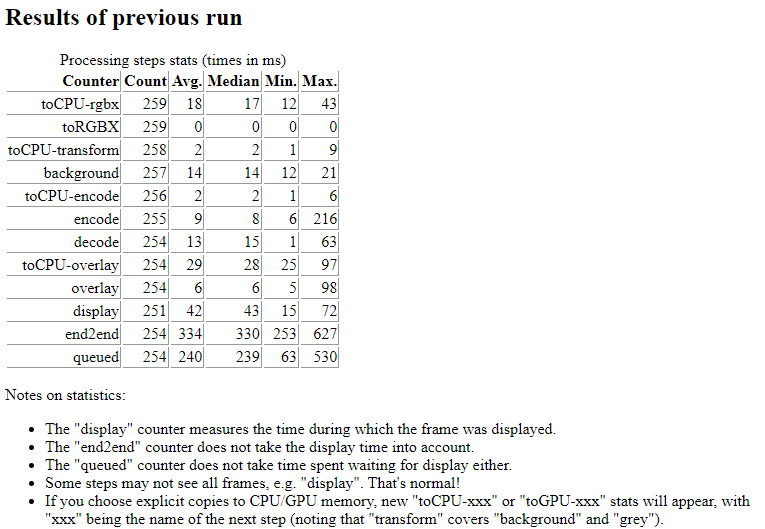

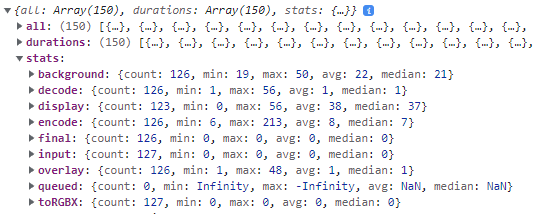

A typical example of statistics returned to the console at the end of a demo run is provided below:

The times are per processing step and per frame. The statistics include the average times taken by each step per frame. For this example: background removal took 22ms , adding the overlay 1ms , encoding 8 ms , decoding 1ms , and frames stayed on display during 38ms .

This article explored the creation of a real-time video processing pipeline using WebCodecs and Streams, along with considerations on handling backpressure, managing the VideoFrame lifecycle, and measuring performance. The next step is to actually start processing the VideoFrame objects that such a pipeline would expose. Please stay tuned, this is the topic of part 2!

{“author”: “ François Daoust “}

Attributions

– WHATWG Stream logo: https://resources.whatwg. org /l ogo-streams.svg Licensed under a Creative Commons Attribution 4.0 International License: https://streams.spec.whatwg.or g/#ipr

– Film strip: https://www.flaticon.com/free- icons/film Film icons created by Freepik – Flaticon

Related Posts

Reader Interactions

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Back to all guides

What is WebCodecs API?

Tue Apr 12 2022

There are several online editors for editing and processing audio and video files on the internet these days. Such websites eliminate the need for downloading and installing heavy-duty software on your computer. All you need to do is search for online editing tools, and Google will provide you with all the websites providing this service.

From the programmer’s perspective, building such web applications can be difficult. There are a lot of features that they need to implement to make a functional online video editor. And without a doubt, APIs will come into play.

Let’s look at one of the APIs that will help a developer build an online video editor.

WebCodecs API

It is a powerful web API that lets developers access the individual frames of a video. It also helps developers to encode and decode audio and video files. It does all this by working on a separate thread. This way, the site responsiveness is not compromised by the processing load.

The API provides access to a lot of features, for instance,

- Raw video frames

- Image decoder

- Audio/Video encoders and decoders

The WebCodecs API is under the hood used by several other APIs. For instance, Web Audio API, MediaRecoder API, WebRTC API, etc., all use WebCodecs for processing audio and video.

The WebCodecs API consists of a lot of different interfaces, each has its browser support, but almost all of them ar2e not supported by Firefox and Safari.

Let’s look at a few interfaces provided by the API.

AudioDecoder

The interfaces help decode audio files right inside your web application.

VideoDecoder

Like the AudioDecoder interface, this interface helps to decode video files inside your web browser.

This interface allows representing an audio file. The audio file is unencoded.

EncodedAudioChunk

This interface represents a chunk of encoded audio data.

New Safari features will make iPhone web apps feel more like native apps

Apple’s Safari browser in iOS and iPadOS 16.4 is gaining support for features like web push notifications, making iPhone web apps work more like native apps.

The browser now supports new technologies like web push notifications to improve the experience of using Progressive Web Apps (aka web apps) on the iPhone.

Apple also has relaxed some of the restrictions related to third-party browsers, which are now permitted to add web apps to the Home Screen.

Safari is adopting several new web features

- Safari will support push notifications from web apps such as Google Maps, Uber and Instagram. Once approved, web notifications will show up in the Notification Center and on the Lock Screen, just like notifications from native apps.

- Third-party web browsers like Google Chrome can now add web apps to the Home Screen. Before iOS 16.4, only Safari could do that.

- Extension syncing will ensure you use the same Safari extensions on your iPhone, iPad and Mac, making the user experience more consistent.

Web push notifications

Web apps won’t be permitted to send you notifications without permission. Web notifications will only work for web apps you’ve added to your Home Screen. Furthermore, web developers must explicitly enable support for this feature.

Additionally, you’ll need to turn on notifications in the web app’s settings and respond positively to a prompt asking whether you’d like to allow notifications.

Brady Eidson and Jen Simmons, WebKit blog :

A web app that has been added to the Home Screen can request permission to receive push notifications as long as that request is in response to direct user interaction—such as tapping on a Subscribe button provided by the web app. iOS or iPadOS will prompt the user to give the web app permission to send notifications.

From that moment, notifications from said web app will appear in the Notification Center, Daily Summaries, on the Lock Screen and your paired Apple Watch alongside notifications from native apps (this is already supported on macOS).

Web apps will display the number of unread notifications on icon badge, just like native apps. You’ll be able to manage web notifications and how they appear in your notification settings, just like you would notifications for native apps.

You will also be able to filter web notifications using Focus modes.

Adding web apps to the Home Screen

With iOS 16.4, you can add a web app to the Home Screen using a third-party browser like Chrome. Before iOS 16.4, only Safari could add a web app to the Home Screen. You’ll choose Add to Home Screen from the share sheet, which will bring up an interface to add a web app to your Home Screen.

Saving a web app to the Home Screen ensures it launches in fullscreen, with no browser interface visible. Another significant change: Such web apps will launch in the third-party browser that added them to the Home Screen.

Previously, these things opened exclusively in Safari.

Developers can even provide an iOS-sized icon for their web app to appear on the Home Screen. Yet another exciting change: you can add multiple instances of the same app to the Home Screen.

iPhone web apps are about to become much more powerful

Summing up, Safari in iOS 16.4 and iPadOS 16.4 has adopted several web technologies, including the Push API, Notifications API, Badging API, WebCodecs API, Screen Wake Lock API, Import Maps, Media Queries, Service Workers, additional codecs for video processing (AV1), device orientation APIs and more.

The move will make web apps on the iPhone behave much more like their native counterparts. Web apps will be able to automatically adapt their interface between portrait and landscape modes, prevent your device from going to sleep when using a web app, and more. All told, there are 135 new features for Safari in iOS 16.4.

Useful Safari tutorials

- How to fix Safari tabs disappearing on iPad and iPhone

- How to show the full URL in the Safari address bar

- How to pin tabs in Safari on iPhone, iPad and Mac

- How to download videos from Safari on iPhone

- How to customize Safari on iPhone and iPad

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

Web video codec guide

This guide introduces the video codecs you're most likely to encounter or consider using on the web, summaries of their capabilities and any compatibility and utility concerns, and advice to help you choose the right codec for your project's video.

Due to the sheer size of uncompressed video data, it's necessary to compress it significantly in order to store it, let alone transmit it over a network. Imagine the amount of data needed to store uncompressed video:

- A single frame of high definition (1920x1080) video in full color (4 bytes per pixel) is 8,294,400 bytes.

- At a typical 30 frames per second, each second of HD video would occupy 248,832,000 bytes (~249 MB).

- A minute of HD video would need 14.93 GB of storage.

- A fairly typical 30 minute video conference would need about 447.9 GB of storage, and a 2-hour movie would take almost 1.79 TB (that is, 1790 GB) .

Not only is the required storage space enormous, but the network bandwidth needed to transmit an uncompressed video like that would be enormous, at 249 MB/sec—not including audio and overhead. This is where video codecs come in. Just as audio codecs do for the sound data, video codecs compress the video data and encode it into a format that can later be decoded and played back or edited.

Most video codecs are lossy , in that the decoded video does not precisely match the source. Some details may be lost; the amount of loss depends on the codec and how it's configured, but as a general rule, the more compression you achieve, the more loss of detail and fidelity will occur. Some lossless codecs do exist, but they are typically used for archival and storage for local playback rather than for use on a network.

Common codecs

The following video codecs are those which are most commonly used on the web. For each codec, the containers (file types) that can support them are also listed. Each codec provides a link to a section below which offers additional details about the codec, including specific capabilities and compatibility issues you may need to be aware of.

Factors affecting the encoded video

As is the case with any encoder, there are two basic groups of factors affecting the size and quality of the encoded video: specifics about the source video's format and contents, and the characteristics and configuration of the codec used while encoding the video.

The simplest guideline is this: anything that makes the encoded video look more like the original, uncompressed, video will generally make the resulting data larger as well. Thus, it's always a tradeoff of size versus quality. In some situations, a greater sacrifice of quality in order to bring down the data size is worth that lost quality; other times, the loss of quality is unacceptable and it's necessary to accept a codec configuration that results in a correspondingly larger file.

Effect of source video format on encoded output

The degree to which the format of the source video will affect the output varies depending on the codec and how it works. If the codec converts the media into an internal pixel format, or otherwise represents the image using a means other than simple pixels, the format of the original image doesn't make any difference. However, things such as frame rate and, obviously, resolution will always have an impact on the output size of the media.

Additionally, all codecs have their strengths and weaknesses. Some have trouble with specific kinds of shapes and patterns, or aren't good at replicating sharp edges, or tend to lose detail in dark areas, or any number of possibilities. It all depends on the underlying algorithms and mathematics.

The degree to which these affect the resulting encoded video will vary depending on the precise details of the situation, including which encoder you use and how it's configured. In addition to general codec options, the encoder could be configured to reduce the frame rate, to clean up noise, and/or to reduce the overall resolution of the video during encoding.

Effect of codec configuration on encoded output

The algorithms used do encode video typically use one or more of a number of general techniques to perform their encoding. Generally speaking, any configuration option that is intended to reduce the output size of the video will probably have a negative impact on the overall quality of the video, or will introduce certain types of artifacts into the video. It's also possible to select a lossless form of encoding, which will result in a much larger encoded file but with perfect reproduction of the original video upon decoding.

In addition, each encoder utility may have variations in how they process the source video, resulting in differences in the output quality and/or size.

The options available when encoding video, and the values to be assigned to those options, will vary not only from one codec to another but depending on the encoding software you use. The documentation included with your encoding software will help you to understand the specific impact of these options on the encoded video.

Compression artifacts

Artifacts are side effects of a lossy encoding process in which the lost or rearranged data results in visibly negative effects. Once an artifact has appeared, it may linger for a while, because of how video is displayed. Each frame of video is presented by applying a set of changes to the currently-visible frame. This means that any errors or artifacts will compound over time, resulting in glitches or otherwise strange or unexpected deviations in the image that linger for a time.

To resolve this, and to improve seek time through the video data, periodic key frames (also known as intra-frames or i-frames ) are placed into the video file. The key frames are full frames, which are used to repair any damage or artifact residue that's currently visible.

Aliasing is a general term for anything that upon being reconstructed from the encoded data does not look the same as it did before compression. There are many forms of aliasing; the most common ones you may see include:

Color edging

Color edging is a type of visual artifact that presents as spurious colors introduced along the edges of colored objects within the scene. These colors have no intentional color relationship to the contents of the frame.

Loss of sharpness

The act of removing data in the process of encoding video requires that some details be lost. If enough compression is applied, parts or potentially all of the image could lose sharpness, resulting in a slightly fuzzy or hazy appearance.

Lost sharpness can make text in the image difficult to read, as text—especially small text—is very detail-oriented content, where minor alterations can significantly impact legibility.

Lossy compression algorithms can introduce ringing , an effect where areas outside an object are contaminated with colored pixels generated by the compression algorithm. This happens when an algorithm that uses blocks that span across a sharp boundary between an object and its background. This is particularly common at higher compression levels.

Note the blue and pink fringes around the edges of the star above (as well as the stepping and other significant compression artifacts). Those fringes are the ringing effect. Ringing is similar in some respects to mosquito noise , except that while the ringing effect is more or less steady and unchanging, mosquito noise shimmers and moves.

Ringing is another type of artifact that can make it particularly difficult to read text contained in your images.

Posterizing

Posterization occurs when the compression results in the loss of color detail in gradients. Instead of smooth transitions through the various colors in a region, the image becomes blocky, with blobs of color that approximate the original appearance of the image.

Note the blockiness of the colors in the plumage of the bald eagle in the photo above (and the snowy owl in the background). The details of the feathers is largely lost due to these posterization artifacts.

Contouring or color banding is a specific form of posterization in which the color blocks form bands or stripes in the image. This occurs when the video is encoded with too coarse a quantization configuration. As a result, the video's contents show a "layered" look, where instead of smooth gradients and transitions, the transitions from color to color are abrupt, causing strips of color to appear.

In the example image above, note how the sky has bands of different shades of blue, instead of being a consistent gradient as the sky color changes toward the horizon. This is the contouring effect.

Mosquito noise

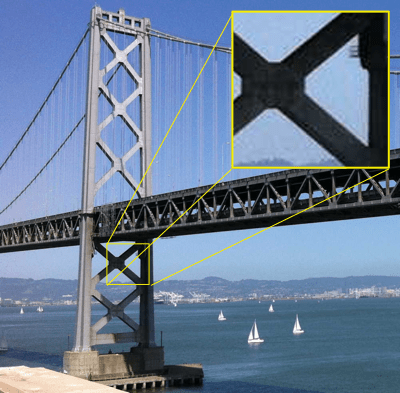

Mosquito noise is a temporal artifact which presents as noise or edge busyness that appears as a flickering haziness or shimmering that roughly follows outside the edges of objects with hard edges or sharp transitions between foreground objects and the background. The effect can be similar in appearance to ringing .

The photo above shows mosquito noise in a number of places, including in the sky surrounding the bridge. In the upper-right corner, an inset shows a close-up of a portion of the image that exhibits mosquito noise.

Mosquito noise artifacts are most commonly found in MPEG video, but can occur whenever a discrete cosine transform (DCT) algorithm is used; this includes, for example, JPEG still images.

Motion compensation block boundary artifacts

Compression of video generally works by comparing two frames and recording the differences between them, one frame after another, until the end of the video. This technique works well when the camera is fixed in place, or the objects in the frame are relatively stationary, but if there is a great deal of motion in the frame, the number of differences between frames can be so great that compression doesn't do any good.

Motion compensation is a technique that looks for motion (either of the camera or of objects in the frame of view) and determines how many pixels the moving object has moved in each direction. Then that shift is stored, along with a description of the pixels that have moved that can't be described just by that shift. In essence, the encoder finds the moving objects, then builds an internal frame of sorts that looks like the original but with all the objects translated to their new locations. In theory, this approximates the new frame's appearance. Then, to finish the job, the remaining differences are found, then the set of object shifts and the set of pixel differences are stored in the data representing the new frame. This object that describes the shift and the pixel differences is called a residual frame .

There are two general types of motion compensation: global motion compensation and block motion compensation . Global motion compensation generally adjusts for camera movements such as tracking, dolly movements, panning, tilting, rolling, and up and down movements. Block motion compensation handles localized changes, looking for smaller sections of the image that can be encoded using motion compensation. These blocks are normally of a fixed size, in a grid, but there are forms of motion compensation that allow for variable block sizes, and even for blocks to overlap.

There are, however, artifacts that can occur due to motion compensation. These occur along block borders, in the form of sharp edges that produce false ringing and other edge effects. These are due to the mathematics involved in the coding of the residual frames, and can be easily noticed before being repaired by the next key frame.

Reduced frame size

In certain situations, it may be useful to reduce the video's dimensions in order to improve the final size of the video file. While the immediate loss of size or smoothness of playback may be a negative factor, careful decision-making can result in a good end result. If a 1080p video is reduced to 720p prior to encoding, the resulting video can be much smaller while having much higher visual quality; even after scaling back up during playback, the result may be better than encoding the original video at full size and accepting the quality hit needed to meet your size requirements.

Reduced frame rate

Similarly, you can remove frames from the video entirely and decrease the frame rate to compensate. This has two benefits: it makes the overall video smaller, and that smaller size allows motion compensation to accomplish even more for you. For example, instead of computing motion differences for two frames that are two pixels apart due to inter-frame motion, skipping every other frame could lead to computing a difference that comes out to four pixels of movement. This lets the overall movement of the camera be represented by fewer residual frames.

The absolute minimum frame rate that a video can be before its contents are no longer perceived as motion by the human eye is about 12 frames per second. Less than that, and the video becomes a series of still images. Motion picture film is typically 24 frames per second, while standard definition television is about 30 frames per second (slightly less, but close enough) and high definition television is between 24 and 60 frames per second. Anything from 24 FPS upward will generally be seen as satisfactorily smooth; 30 or 60 FPS is an ideal target, depending on your needs.

In the end, the decisions about what sacrifices you're able to make are entirely up to you and/or your design team.

Codec details

The AOMedia Video 1 ( AV1 ) codec is an open format designed by the Alliance for Open Media specifically for internet video. It achieves higher data compression rates than VP9 and H.265/HEVC , and as much as 50% higher rates than AVC . AV1 is fully royalty-free and is designed for use by both the <video> element and by WebRTC .

AV1 currently offers three profiles: main , high , and professional with increasing support for color depths and chroma subsampling. In addition, a series of levels are specified, each defining limits on a range of attributes of the video. These attributes include frame dimensions, image area in pixels, display and decode rates, average and maximum bit rates, and limits on the number of tiles and tile columns used in the encoding/decoding process.

For example, AV1 level 2.0 offers a maximum frame width of 2048 pixels and a maximum height of 1152 pixels, but its maximum frame size in pixels is 147,456, so you can't actually have a 2048x1152 video at level 2.0. It's worth noting, however, that at least for Firefox and Chrome, the levels are actually ignored at this time when performing software decoding, and the decoder just does the best it can to play the video given the settings provided. For compatibility's sake going forward, however, you should stay within the limits of the level you choose.

The primary drawback to AV1 at this time is that it is very new, and support is still in the process of being integrated into most browsers. Additionally, encoders and decoders are still being optimized for performance, and hardware encoders and decoders are still mostly in development rather than production. For this reason, encoding a video into AV1 format takes a very long time, since all the work is done in software.

For the time being, because of these factors, AV1 is not yet ready to be your first choice of video codec, but you should watch for it to be ready to use in the future.

AVC (H.264)

The MPEG-4 specification suite's Advanced Video Coding ( AVC ) standard is specified by the identical ITU H.264 specification and the MPEG-4 Part 10 specification. It's a motion compensation based codec that is widely used today for all sorts of media, including broadcast television, RTP videoconferencing, and as the video codec for Blu-Ray discs.

AVC is highly flexible, with a number of profiles with varying capabilities; for example, the Constrained Baseline Profile is designed for use in videoconferencing and mobile scenarios, using less bandwidth than the Main Profile (which is used for standard definition digital TV in some regions) or the High Profile (used for Blu-Ray Disc video). Most of the profiles use 8-bit color components and 4:2:0 chroma subsampling; The High 10 Profile adds support for 10-bit color, and advanced forms of High 10 add 4:2:2 and 4:4:4 chroma subsampling.

AVC also has special features such as support for multiple views of the same scene (Multiview Video Coding), which allows, among other things, the production of stereoscopic video.

AVC is a proprietary format, however, and numerous patents are owned by multiple parties regarding its technologies. Commercial use of AVC media requires a license, though the MPEG LA patent pool does not require license fees for streaming internet video in AVC format as long as the video is free for end users.

Non-web browser implementations of WebRTC (any implementation which doesn't include the JavaScript APIs) are required to support AVC as a codec in WebRTC calls. While web browsers are not required to do so, some do.

In HTML content for web browsers, AVC is broadly compatible and many platforms support hardware encoding and decoding of AVC media. However, be aware of its licensing requirements before choosing to use AVC in your project!

Firefox support for AVC is dependent upon the operating system's built-in or preinstalled codecs for AVC and its container in order to avoid patent concerns.

ITU's H.263 codec was designed primarily for use in low-bandwidth situations. In particular, its focus is for video conferencing on PSTN (Public Switched Telephone Networks), RTSP , and SIP (IP-based videoconferencing) systems. Despite being optimized for low-bandwidth networks, it is fairly CPU intensive and may not perform adequately on lower-end computers. The data format is similar to that of MPEG-4 Part 2.

H.263 has never been widely used on the web. Variations on H.263 have been used as the basis for other proprietary formats, such as Flash video or the Sorenson codec. However, no major browser has ever included H.263 support by default. Certain media plugins have enabled support for H.263 media.

Unlike most codecs, H.263 defines fundamentals of an encoded video in terms of the maximum bit rate per frame (picture), or BPPmaxKb . During encoding, a value is selected for BPPmaxKb, and then the video cannot exceed this value for each frame. The final bit rate will depend on this, the frame rate, the compression, and the chosen resolution and block format.

H.263 has been superseded by H.264 and is therefore considered a legacy media format which you generally should avoid using if you can. The only real reason to use H.263 in new projects is if you require support on very old devices on which H.263 is your best choice.

H.263 is a proprietary format, with patents held by a number of organizations and companies, including Telenor, Fujitsu, Motorola, Samsung, Hitachi, Polycom, Qualcomm, and so on. To use H.263, you are legally obligated to obtain the appropriate licenses.

HEVC (H.265)

The High Efficiency Video Coding ( HEVC ) codec is defined by ITU's H.265 as well as by MPEG-H Part 2 (the still in-development follow-up to MPEG-4). HEVC was designed to support efficient encoding and decoding of video in sizes including very high resolutions (including 8K video), with a structure specifically designed to let software take advantage of modern processors. Theoretically, HEVC can achieve compressed file sizes half that of AVC but with comparable image quality.

For example, each coding tree unit (CTU)—similar to the macroblock used in previous codecs—consists of a tree of luma values for each sample as well as a tree of chroma values for each chroma sample used in the same coding tree unit, as well as any required syntax elements. This structure supports easy processing by multiple cores.

An interesting feature of HEVC is that the main profile supports only 8-bit per component color with 4:2:0 chroma subsampling. Also interesting is that 4:4:4 video is handled specially. Instead of having the luma samples (representing the image's pixels in grayscale) and the Cb and Cr samples (indicating how to alter the grays to create color pixels), the three channels are instead treated as three monochrome images, one for each color, which are then combined during rendering to produce a full-color image.

HEVC is a proprietary format and is covered by a number of patents. Licensing is managed by MPEG LA ; fees are charged to developers rather than to content producers and distributors. Be sure to review the latest license terms and requirements before making a decision on whether or not to use HEVC in your app or website!

Chrome support HEVC for devices with hardware support on Windows 8+, Linux and ChromeOS, for all devices on macOS Big Sur 11+ and Android 5.0+.

Edge (Chromium) supports HEVC for devices with hardware support on Windows 10 1709+ when HEVC video extensions from the Microsoft Store is installed, and has the same support status as Chrome on other platforms. Edge (Legacy) only supports HEVC for devices with a hardware decoder.

Mozilla will not support HEVC while it is encumbered by patents.

Opera and other Chromium based browsers have the same support status as Chrome.

Safari supports HEVC for all devices on macOS High Sierra or later.

The MPEG-4 Video Elemental Stream ( MP4V-ES ) format is part of the MPEG-4 Part 2 Visual standard. While in general, MPEG-4 part 2 video is not used by anyone because of its lack of compelling value related to other codecs, MP4V-ES does have some usage on mobile. MP4V is essentially H.263 encoding in an MPEG-4 container.

Its primary purpose is to be used to stream MPEG-4 audio and video over an RTP session. However, MP4V-ES is also used to transmit MPEG-4 audio and video over a mobile connection using 3GP .

You almost certainly don't want to use this format, since it isn't supported in a meaningful way by any major browsers, and is quite obsolete. Files of this type should have the extension .mp4v , but sometimes are inaccurately labeled .mp4 .

Firefox supports MP4V-ES in 3GP containers only.

Chrome does not support MP4V-ES; however, ChromeOS does.

MPEG-1 Part 2 Video

MPEG-1 Part 2 Video was unveiled at the beginning of the 1990s. Unlike the later MPEG video standards, MPEG-1 was created solely by MPEG, without the ITU's involvement.

Because any MPEG-2 decoder can also play MPEG-1 video, it's compatible with a wide variety of software and hardware devices. There are no active patents remaining in relation to MPEG-1 video, so it may be used free of any licensing concerns. However, few web browsers support MPEG-1 video without the support of a plugin, and with plugin use deprecated in web browsers, these are generally no longer available. This makes MPEG-1 a poor choice for use in websites and web applications.

MPEG-2 Part 2 Video

MPEG-2 Part 2 is the video format defined by the MPEG-2 specification, and is also occasionally referred to by its ITU designation, H.262. It is very similar to MPEG-1 video—in fact, any MPEG-2 player can automatically handle MPEG-1 without any special work—except it has been expanded to support higher bit rates and enhanced encoding techniques.

The goal was to allow MPEG-2 to compress standard definition television, so interlaced video is also supported. The standard definition compression rate and the quality of the resulting video met needs well enough that MPEG-2 is the primary video codec used for DVD video media.

MPEG-2 has several profiles available with different capabilities. Each profile is then available four levels, each of which increases attributes of the video, such as frame rate, resolution, bit rate, and so forth. Most profiles use Y'CbCr with 4:2:0 chroma subsampling, but more advanced profiles support 4:2:2 as well. In addition, there are four levels, each of which offers support for larger frame dimensions and bit rates. For example, the ATSC specification for television used in North America supports MPEG-2 video in high definition using the Main Profile at High Level, allowing 4:2:0 video at both 1920 x 1080 (30 FPS) and 1280 x 720 (60 FPS), at a maximum bit rate of 80 Mbps.

However, few web browsers support MPEG-2 without the support of a plugin, and with plugin use deprecated in web browsers, these are generally no longer available. This makes MPEG-2 a poor choice for use in websites and web applications.

Theora , developed by Xiph.org , is an open and free video codec which may be used without royalties or licensing. Theora is comparable in quality and compression rates to MPEG-4 Part 2 Visual and AVC, making it a very good if not top-of-the-line choice for video encoding. But its status as being free from any licensing concerns and its relatively low CPU resource requirements make it a popular choice for many software and web projects. The low CPU impact is particularly useful since there are no hardware decoders available for Theora.

Theora was originally based upon the VC3 codec by On2 Technologies. The codec and its specification were released under the LGPL license and entrusted to Xiph.org, which then developed it into the Theora standard.

One drawback to Theora is that it only supports 8 bits per color component, with no option to use 10 or more in order to avoid color banding. That said, 8 bits per component is still the most commonly-used color format in use today, so this is only a minor inconvenience in most cases. Also, Theora can only be used in an Ogg container. The biggest drawback of all, however, is that it is not supported by Safari, leaving Theora unavailable not only on macOS but on all those millions and millions of iPhones and iPads.

The Theora Cookbook offers additional details about Theora as well as the Ogg container format it is used within.

Edge supports Theora with the optional Web Media Extensions add-on.

The Video Processor 8 ( VP8 ) codec was initially created by On2 Technologies. Following their purchase of On2, Google released VP8 as an open and royalty-free video format under a promise not to enforce the relevant patents. In terms of quality and compression rate, VP8 is comparable to AVC .

If supported by the browser, VP8 allows video with an alpha channel, allowing the video to play with the background able to be seen through the video to a degree specified by each pixel's alpha component.

There is good browser support for VP8 in HTML content, especially within WebM files. This makes VP8 a good candidate for your content, although VP9 is an even better choice if available to you. Web browsers are required to support VP8 for WebRTC, but not all browsers that do so also support it in HTML audio and video elements.

Video Processor 9 ( VP9 ) is the successor to the older VP8 standard developed by Google. Like VP8, VP9 is entirely open and royalty-free. Its encoding and decoding performance is comparable to or slightly faster than that of AVC, but with better quality. VP9's encoded video quality is comparable to that of HEVC at similar bit rates.

VP9's main profile supports only 8-bit color depth at 4:2:0 chroma subsampling levels, but its profiles include support for deeper color and the full range of chroma subsampling modes. It supports several HDR implementations, and offers substantial freedom in selecting frame rates, aspect ratios, and frame sizes.

VP9 is widely supported by browsers, and hardware implementations of the codec are fairly common. VP9 is one of the two video codecs mandated by WebM (the other being VP8 ). Note however that Safari support for WebM and VP9 was only introduced in version 14.1, so if you choose to use VP9, consider offering a fallback format such as AVC or HEVC for iPhone, iPad, and Mac users.

VP9 is a good choice if you are able to use a WebM container (and can provide fallback video when needed). This is especially true if you wish to use an open codec rather than a proprietary one.

Color spaces supported: Rec. 601 , Rec. 709 , Rec. 2020 , SMPTE C , SMPTE-240M (obsolete; replaced by Rec. 709), and sRGB .

All versions of Chrome, Edge, Firefox, Opera, and Safari

Firefox only supports VP8 in MSE when no H.264 hardware decoder is available. Use MediaSource.isTypeSupported() to check for availability.

Choosing a video codec

The decision as to which codec or codecs to use begins with a series of questions to prepare yourself:

- Do you wish to use an open format, or are proprietary formats also to be considered?

- Do you have the resources to produce more than one format for each of your videos? The ability to provide a fallback option vastly simplifies the decision-making process.

- Are there any browsers you're willing to sacrifice compatibility with?

- How old is the oldest version of web browser you need to support? For example, do you need to work on every browser shipped in the past five years, or just the past one year?

In the sections below, we offer recommended codec selections for specific use cases. For each use case, you'll find up to two recommendations. If the codec which is considered best for the use case is proprietary or may require royalty payments, then two options are provided: first, an open and royalty-free option, followed by the proprietary one.

If you are only able to offer a single version of each video, you can choose the format that's most appropriate for your needs. The first one is recommended as being a good combination of quality, performance, and compatibility. The second option will be the most broadly compatible choice, at the expense of some amount of quality, performance, and/or size.

Recommendations for everyday videos